A2. Annex 2 - JCP-Consult

A2. Annex 2 - JCP-Consult

A2. Annex 2 - JCP-Consult

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

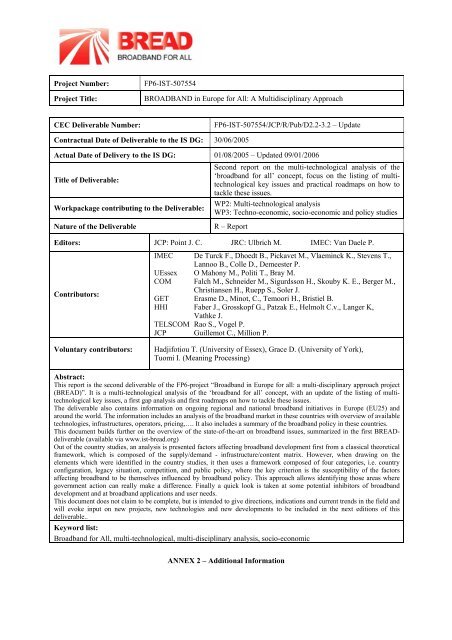

Project Number: FP6-IST-507554<br />

Project Title: BROADBAND in Europe for All: A Multidisciplinary Approach<br />

CEC Deliverable Number: FP6-IST-507554/<strong>JCP</strong>/R/Pub/D2.2-3.2 – Update<br />

Contractual Date of Deliverable to the IS DG: 30/06/2005<br />

Actual Date of Delivery to the IS DG: 01/08/2005 – Updated 09/01/2006<br />

Title of Deliverable:<br />

Workpackage contributing to the Deliverable:<br />

Nature of the Deliverable R – Report<br />

Second report on the multi-technological analysis of the<br />

‘broadband for all’ concept, focus on the listing of multitechnological<br />

key issues and practical roadmaps on how to<br />

tackle these issues.<br />

WP2: Multi-technological analysis<br />

WP3: Techno-economic, socio-economic and policy studies<br />

Editors: <strong>JCP</strong>: Point J. C. JRC: Ulbrich M. IMEC: Van Daele P.<br />

Contributors:<br />

Voluntary contributors:<br />

IMEC De Turck F., Dhoedt B., Pickavet M., Vlaeminck K., Stevens T.,<br />

Lannoo B., Colle D., Demeester P.<br />

UEssex O Mahony M., Politi T., Bray M.<br />

COM Falch M., Schneider M., Sigurdsson H., Skouby K. E., Berger M.,<br />

Christiansen H., Ruepp S., Soler J.<br />

GET Erasme D., Minot, C., Temoori H., Bristiel B.<br />

HHI Faber J., Grosskopf G., Patzak E., Helmolt C.v., Langer K,<br />

Vathke J.<br />

TELSCOM Rao S., Vogel P.<br />

<strong>JCP</strong> Guillemot C., Million P.<br />

Hadjifotiou T. (University of Essex), Grace D. (University of York),<br />

Tuomi I. (Meaning Processing)<br />

Abstract:<br />

This report is the second deliverable of the FP6-project “Broadband in Europe for all: a multi-disciplinary approach project<br />

(BREAD)”. It is a multi-technological analysis of the ‘broadband for all’ concept, with an update of the listing of multitechnological<br />

key issues, a first gap analysis and first roadmaps on how to tackle these issues.<br />

The deliverable also contains information on ongoing regional and national broadband initiatives in Europe (EU25) and<br />

around the world. The information includes an analysis of the broadband market in these countries with overview of available<br />

technologies, infrastructures, operators, pricing,…. It also includes a summary of the broadband policy in these countries.<br />

This document builds further on the overview of the state-of-the-art on broadband issues, summarized in the first BREADdeliverable<br />

(available via www.ist-bread.org)<br />

Out of the country studies, an analysis is presented factors affecting broadband development first from a classical theoretical<br />

framework, which is composed of the supply/demand - infrastructure/content matrix. However, when drawing on the<br />

elements which were identified in the country studies, it then uses a framework composed of four categories, i.e. country<br />

configuration, legacy situation, competition, and public policy, where the key criterion is the susceptibility of the factors<br />

affecting broadband to be themselves influenced by broadband policy. This approach allows identifying those areas where<br />

government action can really make a difference. Finally a quick look is taken at some potential inhibitors of broadband<br />

development and at broadband applications and user needs.<br />

This document does not claim to be complete, but is intended to give directions, indications and current trends in the field and<br />

will evoke input on new projects, new technologies and new developments to be included in the next editions of this<br />

deliverable..<br />

Keyword list:<br />

Broadband for All, multi-technological, multi-disciplinary analysis, socio-economic<br />

ANNEX 2 – Additional Information

Disclaimer<br />

FP6-IST-507554/<strong>JCP</strong>/R/Pub/D2.2-3.2–Update 09/01/06<br />

The information, documentation and figures available in this deliverable, is written by the BREAD (“Broadband<br />

in Europe for all: a multi-disciplinary approach project (BREAD)” – project consortium under EC co-financing<br />

contract IST-507554 and does not necessarily reflect the views of the European Commission<br />

<strong>Annex</strong> 2 - Page 2 of 282

Table of Contents<br />

FP6-IST-507554/<strong>JCP</strong>/R/Pub/D2.2-3.2–Update 09/01/06<br />

DISCLAIMER........................................................................................................................................2<br />

TABLE OF CONTENTS.......................................................................................................................3<br />

<strong>A2.</strong> ANNEX 2 – ADDITIONAL INFORMATION ..........................................................................5<br />

<strong>A2.</strong>1 HOME NETWORK (UPDATED 03/01/2006) ............................................................ 5<br />

<strong>A2.</strong>1.1 Introduction 5<br />

<strong>A2.</strong>1.2 Home Networks: Technologies / Standardisation 6<br />

<strong>A2.</strong>1.3 Issues and technical trends / gap analysis 19<br />

<strong>A2.</strong>1.4 Home Networks: Roadmap 20<br />

<strong>A2.</strong>2 CABLE...........................................................................................................................23<br />

<strong>A2.</strong>2.1 Introduction 23<br />

<strong>A2.</strong>2.2 Requirements 23<br />

<strong>A2.</strong>2.3 HFC cable network deployment situation 26<br />

<strong>A2.</strong>2.4 Plant capacity 29<br />

<strong>A2.</strong>2.5 Physical and MAC layers 33<br />

<strong>A2.</strong>2.6 Open access and related issues 35<br />

<strong>A2.</strong>2.7 IP architecture 36<br />

<strong>A2.</strong>2.8 Security 40<br />

<strong>A2.</strong>2.9 Home network 41<br />

<strong>A2.</strong>2.10 Potential issues and topics to develop 43<br />

<strong>A2.</strong>2.11 Appendix 1: Analysis of disturbances in cable upstream 44<br />

<strong>A2.</strong>3 FTTX UPDATED 05/01/2006 ...................................................................................... 47<br />

<strong>A2.</strong>3.1 Introduction 47<br />

<strong>A2.</strong>3.2 State of the Art 47<br />

<strong>A2.</strong>3.3 Issues and trends 52<br />

<strong>A2.</strong>3.4 Roadmap 63<br />

<strong>A2.</strong>3.5 References 65<br />

<strong>A2.</strong>4 HAP UPDATED 01/06.................................................................................................. 65<br />

<strong>A2.</strong>4.1 Introduction 65<br />

<strong>A2.</strong>4.2 Platforms 66<br />

<strong>A2.</strong>4.3 Connections 67<br />

<strong>A2.</strong>4.4 CAPANINA Project 68<br />

<strong>A2.</strong>4.5 Roadmap 70<br />

<strong>A2.</strong>4.6 Other research 71<br />

<strong>A2.</strong>4.7 References 71<br />

<strong>A2.</strong>5 MOBILITY.................................................................................................................... 73<br />

<strong>A2.</strong>5.1 Seamless Mobility: Convergence in networks and services 73<br />

<strong>A2.</strong>5.2 Broadband mobile convergence network 73<br />

<strong>A2.</strong>5.3 Extra information: 76<br />

<strong>A2.</strong>6 VIDEO IN ALL-IP BROADBAND NETWORKS .................................................... 77<br />

<strong>A2.</strong>6.1 Audio Video Coding 77<br />

<strong>A2.</strong>6.2 Emerging transport protocols 82<br />

<strong>A2.</strong>6.3 Application-layer QoS mechanisms 85<br />

<strong>A2.</strong>6.4 Network QoS for Internet multimedia 92<br />

<strong>A2.</strong>6.5 MPLS and traffic engineering 101<br />

<strong>Annex</strong> 2 - Page 3 of 282

FP6-IST-507554/<strong>JCP</strong>/R/Pub/D2.2-3.2–Update 09/01/06<br />

<strong>A2.</strong>6.6 Session and application level signalling 103<br />

<strong>A2.</strong>6.7 Session signalling and QoS networks: Interaction and Integration 105<br />

<strong>A2.</strong>6.8 Content adaptation 109<br />

<strong>A2.</strong>6.9 Content Delivery Networks 112<br />

<strong>A2.</strong>6.10 Roadmap 114<br />

<strong>A2.</strong>6.11 Appendix I : Applications that would benefit from scalable AV coding 119<br />

<strong>A2.</strong>6.12 Appendix 2: List of relevant standardization bodies 123<br />

<strong>A2.</strong>6.13 Appendix 3: Overview of MPEG-21 124<br />

<strong>A2.</strong>6.14 Appendix 4: Main streaming media products with their characteristics 126<br />

<strong>A2.</strong>6.15 Appendix 5: Some content delivery networks (CDN) providers 127<br />

<strong>A2.</strong>7 OPTICAL METRO / CWDM ................................................................................... 128<br />

<strong>A2.</strong>7.1 Introduction 128<br />

<strong>A2.</strong>7.2 The Metropolitan Optical Networks 129<br />

<strong>A2.</strong>7.3 The vision 132<br />

<strong>A2.</strong>7.4 Gap analysis 134<br />

<strong>A2.</strong>7.5 Network architecture key issues 136<br />

<strong>A2.</strong>7.6 Enabling technologies 141<br />

<strong>A2.</strong>7.7 Summary 147<br />

<strong>A2.</strong>7.8 Appendix 1: The OPTIMIST roadmapping exercise for Metro network 150<br />

<strong>A2.</strong>7.9 Appendix2: FP6-IST-NOBEL scenario–Extract from NOBEL D11. 2004 151<br />

<strong>A2.</strong>7.10 Appendix 3: Standards 153<br />

<strong>A2.</strong>7.11 Appendix4: Related IST projects 153<br />

<strong>A2.</strong>8 OPTICAL BACKBONE ............................................................................................ 155<br />

<strong>A2.</strong>8.1 Introduction 155<br />

<strong>A2.</strong>8.2 Roadmap for Optical Core Networks 157<br />

<strong>A2.</strong>8.3 Transmission Technology 163<br />

<strong>A2.</strong>8.4 Optical Networking Technology 169<br />

<strong>A2.</strong>8.5 Control Plane 188<br />

<strong>A2.</strong>8.6 Trends and issues to be developed in the course of BREAD 190<br />

<strong>A2.</strong>8.7 Related technical initiatives 190<br />

<strong>A2.</strong>9 GRID NETWORKS ................................................................................................... 195<br />

<strong>A2.</strong>9.1 Introduction 195<br />

<strong>A2.</strong>9.2 Enablers and drivers for grids 195<br />

<strong>A2.</strong>9.3 Distinction between peer-peer, cluster and grid networks 196<br />

<strong>A2.</strong>9.4 Grid Network Required Elements 196<br />

<strong>A2.</strong>9.5 Grid Research Trends 198<br />

<strong>A2.</strong>10 SECURITY ............................................................................................................. 200<br />

<strong>A2.</strong>10.1 Introduction 200<br />

<strong>A2.</strong>10.2 Security protocols and mechanisms 210<br />

<strong>A2.</strong>10.3 Emerging Technologies 215<br />

<strong>A2.</strong>10.4 Mobility and network access control 220<br />

<strong>A2.</strong>10.5 Dependability in Broadband networks 234<br />

<strong>A2.</strong>10.6 Digital privacy protection 238<br />

<strong>A2.</strong>11 OVERALL MANAGEMENT AND CONTROL ................................................ 241<br />

<strong>A2.</strong>11.1 Introduction 241<br />

<strong>A2.</strong>11.2 Management 243<br />

<strong>A2.</strong>11.3 Control 250<br />

<strong>A2.</strong>11.4 Application level signalling 277<br />

<strong>A2.</strong>11.5 Conclusions, comparisons and roadmaps 279<br />

<strong>A2.</strong>11.6 Links to other IST projects 279<br />

<strong>Annex</strong> 2 - Page 4 of 282

<strong>A2.</strong> <strong>Annex</strong> 2 – Additional information<br />

<strong>A2.</strong>1 HOME NETWORK (Updated 03/01/2006)<br />

<strong>A2.</strong>1.1 Introduction<br />

FP6-IST-507554/<strong>JCP</strong>/R/Pub/D2.2-3.2–Update 09/01/06<br />

Home networks are based on wired and wireless technologies (Table 1: Technologies of Home-Networks)<br />

Applications are home control, communication, infotainment, and entertainment. The most challenging topic yet<br />

to be addressed in the Home Network environments is interworking and interoperability, as well as the seamless<br />

provision of services, independent of the underlying networks. Fixed Home Networks require cabling between<br />

the devices using existing wires like phone lines, power lines, etc... Premium performance is obtained when<br />

using broadband media like twisted pair, coaxial wires or optical fibres. In contrast to wired networks wireless<br />

systems are far easier to deploy, however, the performance of these systems strongly depends on the constraints<br />

which are given by the environmental conditions. Propagation loss, shadowing, absorption, multipath<br />

propagation effects due to reflections at obstacles and Doppler spread may limit the maximum distance and<br />

transmission speed. From cordless phones to cellular handsets, consumers are now unwiring their laptops, PDAs<br />

and other electronic gadgets with narrowband short-distance solutions such as Bluetooth and IEEE 802.xx /<br />

ETSI standards. Thus driving forces for ongoing R&D activities are the cellular growth and the enterprise<br />

markets of wireless local area networks and wireless personal area networks (WLAN/WPAN).<br />

Home Network Technologies<br />

Cabled Wireless<br />

Copper Fibre Radio<br />

Infrared: kb/s...Mb/s<br />

Telephone line: 100 Mb/s (VDSL)<br />

Twisted pair: < 1 Gb/s<br />

Power line: < 2...14 Mb/s (shared)<br />

MM fibre: fibre:<br />

SI MMF:

FP6-IST-507554/<strong>JCP</strong>/R/Pub/D2.2-3.2–Update 09/01/06<br />

<strong>A2.</strong>1.2 Home Networks1: Technologies / Standardisation<br />

High-speed Internet is spreading. Homes that used to have little communication technology in the past now have<br />

multiple computers, peripherals like printers and scanners, televisions, radios, stereos, DVD players, VCRs,<br />

cordless telephones, PDAs, and other electronic devices.<br />

Home networks link the many different electronic devices in a household by way of a local area network (LAN).<br />

The network can be point-to-point, such as connecting one computer to another, or point-to-multipoint where<br />

computers and other devices such as printers, set-top boxes, and stereos are connected to each other and the<br />

Internet. There are many different applications for home networking. They can be broken into five categories:<br />

resource sharing, communications, home controls, home scheduling, and entertainment/information.<br />

Resource Sharing<br />

Home networking allows all users in the household to access the Internet and other applications at the same<br />

time. In addition, files (not just data, but also audio and video depending on the speed of the network) can be<br />

swapped, and peripherals such as printers and scanners can be shared. There is no longer the need to have more<br />

than one Internet access point, printer, scanner, or in many cases, software packages.<br />

Communications<br />

Home networking allows easier and more efficient communication between users within the household and<br />

better communication management with outside communications. Phone, fax, and e-mail messages can be<br />

routed intelligently. Access to the Internet can be attained at multiple places in the home with the use of<br />

terminals and Webpads.<br />

Home Controls<br />

Home networking can allow controls within the house, such as temperature and lighting, to be managed though<br />

the network and even remotely through the Internet. The network can also be used for home security monitoring<br />

with network cameras.<br />

Home Scheduling<br />

A home network would allow families to keep one master schedule that could be updated from different access<br />

points within the house and remotely through the Internet.<br />

Entertainment/Information<br />

Home networks enable a multiple of options for sharing entertainment and information in the home. Networked<br />

multi-user games can be played as well as PC-hosted television games. Digital video networking will allow<br />

households to route video from DBS and DVDs to different set-top boxes, PCs, and other visual display devices<br />

in the home. Streaming media such as Internet radio can be sent to home stereos as well as PCs.<br />

The speed of home networks is also important to consider. Most home networking solutions have speeds of at<br />

least 1 Mbps, which is enough for most everyday data transmission (but may not be enough for bandwidthintensive<br />

applications such as full-motion video). With the development of high-speed Internet access and<br />

digital video and audio comes a need for faster networks. Several kinds of home networks can operate at speeds<br />

of 10 Mbps and up. Digital video networking, for example, requires fast data rates. DBS MPEG-2 video requires<br />

3 Mbps and DVD requires between 3 and 8 Mbps. HDTV requires more speed than current home networks have<br />

but that should change in the future, as home networks get faster and as technology develops and adapts to new<br />

Internet appliances and digital media.<br />

In general, the home networking standards can be divided into two large groups: in-home networking standards,<br />

that provide interconnectivity of devices inside the home, and home-access network standards, that provide<br />

external access and services to the home via networks like cable TV, broadcast TV, phone net and satellite.<br />

Additionally, there are the mobile-service networks that provide access from mobile terminals when the user is<br />

away from home. Currently there is no dominant wired home networking standard, and networks are likely to be<br />

heterogeneous. A comprehensive compilation of the standards for multimedia networking is given in the<br />

MEDIANET 2 project.<br />

<strong>A2.</strong>1.2.1 Cabled Home Network<br />

Many in-home networking standards require cabling between the devices. One option is to install new cabling in<br />

the form of galvanic twisted-pair or coaxial wires, or optical fibres. The alternative is to use existing cabling,<br />

such as power-lines and phone-lines.<br />

Using existing cabling in the home is very convenient for end-users. For in-home networking via the phone-line,<br />

HomePNA 3 has become the de-facto standard, providing up to 10 Mbit/s (240 Mbit/s is expected).<br />

1 Future Home, http://dbs.cordis.lu/ IST-2000-28133<br />

2 MediaNet, http://www.ist-ipmedianet.org/home.html<br />

3 HomePNA, http://www.homepna.com/, http://www.homepna.com/HPNA-DLINK-Kit.html-ssi,<br />

<strong>Annex</strong> 2 - Page 6 of 282

FP6-IST-507554/<strong>JCP</strong>/R/Pub/D2.2-3.2–Update 09/01/06<br />

For power-line networking, low-bandwidth control using and (high) bandwidth data transfer using CEBus 4 and<br />

HomePlug 5 are the most prominent ones, offering from 10 kbit/s up to 14 Mbit/s.<br />

New cabling requires an additional effort of installation, but has the advantage that premium-quality cabling can<br />

be chosen, dedicated to digital data-transport at high rates. The IEEE-1394a standard (also called Firewire and<br />

i.Link) 6 defines a serial bus that allows for data transfers up to 400 Mbit/s over a twisted-pair cable, and<br />

extension up to 3.2 Gbit/s using fibre is underway. Similarly, USB 7 defines a serial bus that allows for data<br />

transfers up to 480 Mbit/s over a twisted-pair cable, but using a master-slave protocol instead of the peer-to-peer<br />

protocol in IEEE-1394a. Both standards support hot plug-and-play and isochronous streaming, via centralised<br />

media access control, which are of significant importance for consumer-electronics applications. The<br />

disadvantage is that this sets a limit to the cable lengths between devices. Another major player is the Ethernet,<br />

which has evolved via 10 Mbit/s Ethernet and 100 Mbit/s Fast Ethernet using twisted-pair cabling, into Gigabit<br />

Ethernet, providing 1 Gbit/s using twisted-pair cabling or fibre. Ethernet notably does not support isochronous<br />

streaming since it lacks centralised medium-access control. Also it does not support device discovery (plug-andplay).<br />

It is, however, widely used, also because of the low cost.<br />

<strong>A2.</strong>1.2.2 Wireless Home Network<br />

As opposed to wired networks, wireless systems are far easier to deploy. This is due to the smaller installation<br />

effort (no new wires), and due to a lower cost of the physical infrastructure. A wireless home network is<br />

configured with an access point that acts as a transmitter and a receiver connected to the wired network at a<br />

fixed location. The access point then transmits to end users who have wireless LAN adapters with either PC<br />

cards in notebooks, ISA or PCI cards in desktops, or fully-integrated devices. A wireless home network allows<br />

real-time instant access to the network without the computer having to be near a phone jack or power outlet.<br />

Installation is easy because there is no cable to pull as with conventional Ethernet. The devices do not have to be<br />

in line-of-sight but can be in different rooms or blocked by walls and other barriers. Finally, all of these services<br />

are secure as they use encryption technologies.<br />

However, regulation by law and associated licensing fees may seriously affect the actual cost of the wireless<br />

connection. Additionally, the governmental regulations vary widely throughout the world. Especially for the<br />

license-free spectrum-bands, the issue of signal interference that limits usable bandwidth has to be solved. This<br />

is one of the reasons that there are so many wireless standards that it is becoming difficult to keep track. From<br />

cordless phones to cellular handsets, consumers are now unwiring their laptops, PDAs and other electronic<br />

gadgets with narrowband short-distance solutions such as Bluetooth and IEEE 802.xx / ETSI standards. Driving<br />

forces for ongoing R&D activities are the cellular growth and the enterprise WLAN market. In tablesTable 2:<br />

Wireless technologiesTable 3: Global wireless standards, the wireless technologies and wireless<br />

standards are summarized.<br />

In some cases there exist an overlapping application between wireless home networking, wireless access<br />

networks, and cellular systems. Within the IST-Future Home 8 project three wireless technologies have been<br />

addressed: IEEE-802.11 (WLAN), HiperLan2 and Bluetooth (WPAN). These have been selected for the<br />

following reasons:<br />

• the technologies are complementing from the usage point of view;<br />

• they are in a different phase of maturity and can be also compared against each other;<br />

• these different technologies provide the heterogeneous environment that can be used as a model of a future<br />

residential network;<br />

• they all can be used as substitutes of wired connections and therefore are suitable for an IP network<br />

environment.<br />

In this chapter on Home Networks additionally cellular and cordless systems have been integrated, because the<br />

largest and most noticeable part of the telecommunications business is telephony. The principal wireless<br />

component of telephony is mobile (i.e., cellular) telephony. In recent years the development of 3G thirdgeneration<br />

cellular i.e. IMT2000 and other wireless technologies have been key issues, like wireless<br />

piconetworking (Bluetooth), personal area network (WPAN) systems, and local area networks (WLAN).<br />

However, wireless metropolitan area network (WMAN) systems (IEEE 802.16 standards, called WiMAX**<br />

systems) are described in the chapter on Wireless Access.<br />

4 CEBus, http://www.cebus.org/<br />

5 HomePlug, http://www.homeplug.org<br />

6 1394 Trade Association, http://www.1394ta.org<br />

7 USB, http://www.usb.org<br />

8 Future Home, http://dbs.cordis.lu/ IST-2000-28133<br />

<strong>Annex</strong> 2 - Page 7 of 282

Wireless<br />

Cordless Cellular mobile<br />

Metropolitan Area<br />

Network, WMAN<br />

Systems<br />

radio<br />

DAB<br />

DECT 2G GSM<br />

DVBT<br />

ETSI HiperMAN<br />

ETSI HiperACCESS<br />

IEEE 802.16<br />

Wi-Fi*: Wireless Fidelity<br />

3G IMT2000<br />

WiMAX**: Worldwide Interoperability for Microwave Access<br />

FP6-IST-507554/<strong>JCP</strong>/R/Pub/D2.2-3.2–Update 09/01/06<br />

Table 2: Wireless technologies<br />

<strong>Annex</strong> 2 - Page 8 of 282<br />

Wireless Local Area<br />

Network,<br />

WLAN<br />

ETSI HiperLAN<br />

MMAC<br />

IEEE 802.11<br />

Wireless Personal<br />

Area Network,<br />

WPAN<br />

HomeRF<br />

IEEE 802.15<br />

UWB<br />

Bluetooth<br />

ZigBee<br />

Area IEEE ETSI Forum/Alliance<br />

WAN 802.20 3GPP, EDGE<br />

LAN 802.11 HiperLAN Wi-Fi*<br />

MAN 802.16 HiperMAN, HiperACCESS WiMAX**<br />

PAN 802.15 HiperPAN WiMedia<br />

Table 3: Global wireless standards<br />

<strong>A2.</strong>1.2.2.1 Wireless local area networks, WLANs<br />

WLANs use two frequency bands, the IEEE 802.11b,e,g 9 standard uses the 2.4 GHz band, and the IEEE<br />

802.11a standard the 5 GHz band. Notably the 802.11b standard is gaining market share. Capabilities of 802.11<br />

are to provide up to 54 Mbit/s over 300 metres distance (Wireless Ethernet 10 ).<br />

Standard Transfer Method Frequencies Data Rates Supported (Mbit/s)<br />

802.11 legacy FHSS, DSSS, infrared 2.4 GHz, IR 1, 2<br />

802.11b DSSS, HR-DSSS 2.4 GHz 1, 2, 5.5, 11<br />

"802.11b+"<br />

standardnon-<br />

DSSS,<br />

(PBCC)<br />

HR-DSSS<br />

2.4 GHz 1, 2, 5.5, 11, 22, 33, 44<br />

802.11a OFDM 5.2, 5.8 GHz 6, 9, 12, 18, 24, 36, 48, 54<br />

802.11g DSSS, HR-DSSS, OFDM 2.4 GHz<br />

Table 4: Overview of the IEEE 802.11 Standards 11<br />

1, 2, 5.5, 11; 6, 9, 12, 18, 24, 36, 48,<br />

54<br />

ETSI HiperLAN/2 operating in the 5 GHz band (licence exempt bands) using OFDM modulation and TDMA<br />

(time division multiple access).<br />

9 http://grouper.ieee.org/groups/802/11/<br />

10 http://www.wirelessethernet.org/OpenSection/index.asp<br />

11 http://en.wikipedia.org/wiki/IEEE_802.11

FP6-IST-507554/<strong>JCP</strong>/R/Pub/D2.2-3.2–Update 09/01/06<br />

The standard provides 25 Mbit/s short range, wireless access and WLAN applications in indoor and campuswide<br />

usage. Typical indoor and outdoor coverage is 50 m and 150 m, respectively. User mobility within local<br />

service area is supported. The Hiperlan/2 standard has now been merged with 802.11a, giving some features<br />

such as power control and QoS.<br />

HiSWANa (Japanese) is a WLAN standard in the 5GHz band and has a MAC structure similar to that of<br />

HiperLAN/2. But, unlike HiperLAN/2, HiSWANa does not offer direct-link mode which allows terminals to<br />

transmit to one another without routing through an access point. HiSWANa also uses a listen-before-talk<br />

mechanism similar to 802.11a to reduce uncoordinated interference. The HiSWANa MAC combines key<br />

features of both 802.11a and HiperLAN/2, at the expense of increased overhead.<br />

Wi-Fi Alliance 12<br />

Wi-Fi, short for wireless fidelity and is meant to be used generally when referring of any type of 802.11<br />

network, whether 802.11b, 802.11a, dual-band, etc. The term is promulgated by the Wi-Fi Alliance. It is a nonprofit<br />

international association formed in 1999 to certify interoperability of wireless Local Area Network<br />

products based on IEEE 802.11 specification, (WLAN). The alliance is targeting three purposes: To promote<br />

Wi-Fi worldwide by encouraging manufacturers to use standardized 802.11 technologies in their wireless<br />

networking products; to promote and market these technologies to consumers in the home, SOHO and enterprise<br />

markets; and to test and certify Wi-Fi product interoperability. A user with a "Wi-Fi Certified" product can use<br />

any brand of access point with any other brand of client hardware that also is certified. Typically, however, any<br />

Wi-Fi product using the same radio frequency (for example, 2.4GHz for 802.11b or 11g, 5GHz for 802.11a) will<br />

work with any other, even if not "Wi-Fi Certified." Formerly, the term "Wi-Fi" was used only in place of the<br />

2.4GHz 802.11b standard, in the same way that "Ethernet" is used in place of IEEE 802.3. The Alliance<br />

expanded the generic use of the term in an attempt to stop confusion about wireless LAN interoperability.<br />

Specifically the home Wi-Fi network enables everyone within a house to access each other's computers, send<br />

files to printers and share a single Internet connection. Within a small business, a Wi-Fi network can easily<br />

improve workflow, give staff the freedom to move around and allow all the users to share network devices<br />

(computers, data files, printers, etc.) and a single Internet connection.<br />

The small office Wi-Fi network also makes it easy to add new employees and computers. There is no need to<br />

install new data cables and install cabling. Just add a Wi-Fi radio to the new computer, configure it and the new<br />

employee can be up and running in minutes.<br />

To allow access to the Internet, the Internet connection (DSL, ISDN or cable modem) connects to the Wi-Fi<br />

gateway. Several Wi-Fi laptops can then wirelessly connect to the gateway. The laptop computers can connect<br />

through a built-in, or embedded, Wi-Fi radio or through a standard slide-in PC Card radio.<br />

The desktop computers can use a variety of types of Wi-Fi radios to connect to the wireless network: a plug-in<br />

USB (Universal Serial Bus) radio, a built-in PCI Card radio or an Apple AirPort module.<br />

A single printer attached to one of the desktop computers enables all of the computers on the network to print to<br />

it. Of course, the connected computer must be turned on to enable the printer to function and communicate with<br />

the rest of the network.<br />

It is also possible to use a stand-alone Wi-Fi equipped printer, or a printer with a Wi-Fi print server.<br />

If you have a combination multifunction printer, scanner and fax machine, you could access and operate this<br />

combo device, and its various capabilities, from any computer on the network.<br />

Public Wi-Fi "HotSpots" are rapidly becoming common in coffee shops, hotels, convention centres, airports,<br />

libraries, and community areas — anyplace where people gather. In these locations, a Wi-Fi network can<br />

provide Internet access to guests and visitors. People can connect using their own Wi-Fi equipped laptop<br />

computers and portable computing devices, or by using Wi-Fi equipped desktop computers provided at the<br />

location. A single networked printer with a built-in print server can also be connected to the access point, to<br />

provide printing services to users. HotSpots operate in various ways. A small public HotSpot may provide free<br />

access to its guests or it may charge a membership, per-time or data-use connection fee.<br />

12 http://www.wi-fi.org/<br />

<strong>Annex</strong> 2 - Page 9 of 282

FP6-IST-507554/<strong>JCP</strong>/R/Pub/D2.2-3.2–Update 09/01/06<br />

Even if the venue is providing Internet connectivity as a free value added service, it asks customers to provide<br />

user and registration information before they can connect to the Internet. In many instances a wireless gateway,<br />

the central base station, can provide connectivity for all the wired and wireless networking components. Internet<br />

access, wireless connectivity and wired connections all flow through the Wi-Fi gateway. This kind of Wi-Fi<br />

network differs from a completely wireless network because, in addition to the Wi-Fi connections, the Wi-Fi<br />

gateway is simultaneously supplying wired connectivity to various devices via the extra Ethernet jacks located<br />

on the wireless gateway. These can include other desktop computers, printers and print servers and wired<br />

Ethernet hubs and routers, as well as additional Wi-Fi access points 13 .<br />

<strong>A2.</strong>1.2.2.2 Wireless personal area networks, WPANs<br />

Wireless PANs typically have a short range-of-use and are intended to set up connections between personal<br />

devices. The most widely deployed standard in this class is Bluetooth 14 . Its capability is providing 1 Mbit/s for<br />

few connected devices in a small network, called a piconet. Its range is between 10 and 100 meters depending<br />

on the transmission power and the environmental conditions. The used transmission-band for Bluetooth lies in<br />

the 2.4 GHz ISM band. The HomeRF standard, like Bluetooth, also works in the 2.4 GHz ISM band. From an<br />

initial maximum data rate of 1.6 Mbit/s, it has been extended to 10 Mbit/s 15 . HomeRF has a range of 50 meters<br />

at this speed. It is not interoperable with its strongest competitor, IEEE 802.11b, however.<br />

The IEEE 802.15 16 standard is intended to go a step further. In this context the WiMedia Alliance 17 has been<br />

established. 802.15 integrates the Bluetooth standard and harmonizes it with the IEEE 802 family, such that it is<br />

IP and Ethernet compatible. The objectives of 802.15 are a high-bit rate solution providing up to 20 Mbit/s and<br />

beyond, and a low bit-rate one (IEEE 802.15.4, also known as ZigBee). Within IEEE 802.3 the study group 3c 18<br />

was formed in March 2004. The group is developing a millimetre-wave-based alternative physical layer (PHY)<br />

for the existing 802.15.3 Wireless Personal Area Network (WPAN) Standard 802.15.3-2003. This mm-wave<br />

WPAN will operate in the new and clear 57-64 GHz unlicensed band defined by FCC 47 CFR 15.255. The 60<br />

GHz WPAN will allow high coexistence (close physical spacing) with all other microwave systems in the<br />

802.15 family of WPANs. In addition, the 60 GHz WPAN will allow very high data rate applications such as<br />

high speed internet access, streaming content download (video on demand, HDTV, home theatre, etc.) real time<br />

streaming and wireless data bus for cable replacement. Data rates in excess of 2 Gbps will be provided.<br />

Within IEEE 802.15.3 task group 3a coordinates the activities of the ultra-wideband (UWB) technology 19 . It is a<br />

promising high-speed, low-power wireless technology for home entertainment or personal area network. While<br />

providing wireless distribution for TV programs, movies, games and intensive data, UWB claims also that it is<br />

assured that such distribution will not interfere with other wireless transmissions common at home. In February<br />

2002, the FCC allocated 7,500 MHz of unlicensed spectrum for UWB devices for communication applications<br />

in the 3.1 GHz to 10.6 GHz frequency band. The UWB system provides a WPAN with data payload<br />

communication capabilities of 28, 55, 110, and even at 220, 500, 660, 1000 and 1320 Mbps are expected.<br />

There exist two proposals for UWB, one is based on multi-band OFDM transmission and the other on direct<br />

sequence spreading (DS-UWB). Two different bands are defined: one band nominally occupying the spectrum<br />

from 3.1 to 4.85 GHz (the low band), and the second band nominally occupying the spectrum from 6.2 to 9.7<br />

GHz (the high band).<br />

The DS-UWB system employs direct sequence spreading of binary phase shift keying (BPSK) and quaternary<br />

bi-orthogonal keying (4BOK) UWB pulses. Forward error correction coding (convolutional coding) is used with<br />

a coding rate of ½ and ¾.<br />

The OFDM System consists of 13 sub bands of 528 MHz width each. There are 128 subcarriers in each sub<br />

band with QPSK modulation and convolutional coding.<br />

13 http://www.wi-fi.org/OpenSection/index.asp<br />

14 BlueTooth, http://www.bluetooth.com<br />

15 HomeRF, http://www.pcworld.com/news/article/0,aid,64024,00.asp<br />

16 IEEE 802.15, http://grouper.ieee.org/groups/802/15/<br />

17 WiMEDIA, http://www.wimedia.org/<br />

18 IEEE 802.15.3 SG3c, http://www.ieee802.org/15/pub/SG3c.html<br />

19 Ultra Wide Band, http://www.uwbforum.org/standards/specifications.asp<br />

<strong>Annex</strong> 2 - Page 10 of 282

FP6-IST-507554/<strong>JCP</strong>/R/Pub/D2.2-3.2–Update 09/01/06<br />

In cooperation with the 1394 Trade Association (TA) a protocol adaptation layer (PAL) has been developed<br />

between the wired IEEE1394 and the IEEE 802.15.3 MAC. The PAL also adapts the IEEE P1394.1 bridging<br />

specification to wireless use. The result is a ‘wireless FireWire’ capability, which can be implemented with any<br />

standard or non-standard physical layer, including Ultra Wideband PHYs. The PAL permits IEEE 1394 devices<br />

and protocols to be used in a wireless environment at speeds up to 480 Mbit/s per second, while allowing<br />

compatibility with existing wired 1394 devices. The standard will move consumers’ one significant step closer<br />

to controlling home networks, HDTVs, and other advanced electronics systems wirelessly, just as they now use<br />

remote controls to change TV channels or audio output 20 .<br />

WiMedia Alliance<br />

The WiMedia Alliance is a not-for-profit open industry association formed to promote wireless personal-area<br />

network (WPAN) connectivity and interoperability for multiple industry-based protocols. The WiMedia<br />

Alliance develops and adopts standards-based specifications for connecting wireless multimedia devices,<br />

including application, transport, and control profiles; test suites; and a certification program to accelerate widespread<br />

consumer adoption of "wire-free" imaging and multimedia solutions. (IEEE 802.15, 1394, WiMedia<br />

Alliance’s Convergence Architecture, WiMCA), MBOA (Multiband OFDM Alliance). The WiMedia Alliance<br />

charter is to develop a specification based on the IEEE 802.15.3 standard with a strong focus on an ultra-wide<br />

band physical layer (802.15.3a). The Alliance will establish a certification and logo program and promote the<br />

WiMedia brand 21 . Alliance activities include coordinating with other standards bodies, promoting the allocation<br />

of UWB spectrum at international regulatory bodies. The Alliance is committed to intelligently leveraging as<br />

many existing technologies as possible with the end-goal of developing an easy-to-understand consumer system<br />

for interoperable wireless multimedia devices. The WiMedia Alliance serves the consumer electronics, PC and<br />

mobile communications markets. Products specific to these markets as well as emerging convergence products<br />

will benefit from simple wireless connectivity.<br />

WiMedia-enabled products will meet the demanding requirements of portable consumer imaging and<br />

multimedia applications and support peer-to-peer connectivity and isochronous as well as synchronous data.<br />

WiMedia technology will be optimised for low-cost, small-form factor, and quality of service (QoS) awareness<br />

and will enable multimedia applications that are not optimised by existing wireless standards.<br />

<strong>A2.</strong>1.2.2.3 Third generation mobile communication systems (cellular)<br />

For the development of 3G the ITU established the IMT2000 (International Mobile Telecommunications at<br />

2000 MHz) standard. 3G networks will provide mobile multimedia, personal services, the convergence of<br />

digitalisation, mobility, the Internet, and new technologies based on the global standards. The international<br />

standardisation activities for 3G are mainly concentrated in the different regions in the European<br />

Telecommunications Standards Institute (ETSI) Special Mobile Group (SMG) in Europe, Research Institute of<br />

Telecommunications Transmission (RITT) in China, Association of Radio Industry and Businesses (ARIB) and<br />

Telecommunication Technology Committee (TTC) in Japan, Telecommunications Technologies Association<br />

(TTA) in Korea, and Telecommunications Industry Association (TIA) and T1P1 in the United States. In order to<br />

harmonise and standardise in detail the similar ETSI, ARIB, TTC, TTA, T1 WCDMA, and related TDD<br />

proposals the 3rd Generation Partnership Project 22 (3GPP) was established.<br />

In general IMT-2000 consists of four systems and two main technologies summarised in Table 5: IMT2000<br />

radio interfaces and access techniques, Source: Chr. Menzel, SIEMENS AG. The systems are<br />

UMTS, CDMA2000, DECT and UWC-136 (EDGE), while the technologies apply TDMA and CDMA, i.e.<br />

time- and code division multiplexing. UMTS (Universal Mobile Telecommunications System) UTRA-FDD and<br />

UTRA-TDD are the European versions of IMT-2000.<br />

A typical chip rate of 3.84 Mcps is used for the 5-MHz band allocation. Data rates at 364 kbit/s (FDD-mode)<br />

and more are available and for stationary hot-spot services a 2 Mbit/s mode (TDD) is provided. UMTS is a<br />

packet-switched technology.<br />

20 http://www.1394ta.org/Press/2003Press/december/12.08.a.htm<br />

21 http://www.caba.org/standard/wimedia.html<br />

22 http://www.3gpp.org/<br />

<strong>Annex</strong> 2 - Page 11 of 282

FP6-IST-507554/<strong>JCP</strong>/R/Pub/D2.2-3.2–Update 09/01/06<br />

Wideband CDMA (W-CDMA), supported by groups in Japan (ARIB) and Europe, and backward-compatible<br />

with GSM, has been selected for the UMTS Terrestrical Radio Access (UTRA) frequency division duplex<br />

(FDD).<br />

Table 5: IMT2000 radio interfaces and access techniques, Source: Chr. Menzel, SIEMENS AG<br />

The IMT2000 frequency ranges are shown in the tab.6 (the exact spectrum available remains country-specific):<br />

Frequency division duplex (FDD)<br />

<strong>Annex</strong> 2 - Page 12 of 282<br />

Time division duplex (TDD)<br />

Region 1 (e.g. Europe and Africa) Region 1 (e.g. Europe and Africa)<br />

1920-1980 MHz Uplink<br />

2110-2170 MHz Downlink<br />

1900 – 1920 MHz<br />

2010 – 2025 MHz<br />

Region 2 (e.g. America) Region 2 (e.g. America)<br />

1850-1910 MHz Uplink<br />

1930-1990 MHz Downlink<br />

1850 – 1910 MHz<br />

1930 – 1990 MHz<br />

1910 – 1930 MHz<br />

Uplink and<br />

Downlink<br />

Uplink and<br />

Downlink<br />

Table 6: IMT2000 Frequency allocations (Source: Overview of 3GPP Release 99, ETSI Mobile Competence<br />

Centre Version xx/07/04 © Copyright ETSI 2004)<br />

<strong>A2.</strong>1.2.2.4 Relation of UMTS/3G to other wireless technologies<br />

For comparison some typical data of UMTS and Wi-Fi/WiMAX are shown in Table 7: Comparison of<br />

UMTS, Wi-Fi/WiMAX, and Mobile-Fi, *WISP: Wireless Internet Service Provider. With<br />

respect to future “beyond-3G” systems the recent evolution and successful deployment of WLANs has yield a<br />

demand to integrate WLANs with 3G cellular networks. Data rates provided by the WLAN standards are far<br />

above the targeted 144 kbps of General Packet Radio Services (GPRS) and 384 kbps — 2 Mbps of the UMTS

FP6-IST-507554/<strong>JCP</strong>/R/Pub/D2.2-3.2–Update 09/01/06<br />

cellular systems, making the WLANs important and attractive to be used as adds on service to the usual 3G<br />

cellular systems.<br />

The key goal of this integration is to develop heterogeneous mobile data networks, capable to support ubiquitous<br />

data services with very high data rates in strategic locations. The effort to develop such heterogeneous networks<br />

is linked with many technical challenges including seamless vertical handovers across WLAN and 3G radio<br />

technologies, security, 3G-based authentication, unified accounting & billing, WLAN sharing (by several 3G<br />

networks), consistent QoS and service provisioning, etc.<br />

According to the report of the IST-project MUSE 23 (6th FP 507295) MA 2.4, it is recognised that in general a<br />

convergence of the fixed and mobile access architectures is necessary by using 3GPP concepts. By 2007, first<br />

trails of convergent networks based on 3GPP and next generation network approaches will be performed<br />

between fixed and mobile operators. Also trials with IEEE 802.1x focused on DSL radio interworking are<br />

possible. The main drivers are a cost effective sharing of network resources and common platforms, as well as<br />

the offering of new converged services. By 2010, a gradual integration of fixed and mobile networks<br />

internetworking and commercial deployments depending on user demands are seen.<br />

3G Wi-Fi WiMAX Mobile-Fi<br />

Standard UMTS IEEE 802.11 IEEE 802.16 IEEE 802.20<br />

Maximum speed Mbit/s 0.364 FDD<br />

2 TDD<br />

54 10-100 16<br />

Operations/Operators Cell phone Individuals, Individuals, WISPs WISPs<br />

companies WISPs*<br />

Coverage area Micro-/ 100m several km several km<br />

Macro cell<br />

up to 40 km<br />

Spectrum<br />

several km<br />

near 2 GHz 2.4 GHz, 2-11GHz, 3.5GHz<br />

near 5GHz 10-66GHz<br />

Mobility high FDD yes no mobility, high<br />

low TDD<br />

mobile: 802.16e<br />

Table 7: Comparison of UMTS, Wi-Fi/WiMAX, and Mobile-Fi, *WISP: Wireless Internet Service Provider<br />

<strong>A2.</strong>1.2.3 Middleware<br />

In computing, middleware consists of software agents acting as an intermediary between different application<br />

components.. It is used most often to support complex, distributed applications. The software agents involved<br />

may be one or many.<br />

The ObjectWeb consortium gives the following definitions of middleware: "In a distributed computing system,<br />

middleware is defined as the software layer that lies between the operating system and the applications on each<br />

site of the system."<br />

Middleware is now used to describe database management systems, web servers, application servers content<br />

management systems, and similar tools that support the application development and delivery process.<br />

Middleware is especially integral to modern information based on XML, SOAP, Web services, and serviceoriented<br />

architecture.<br />

Middleware is the enabling technology of Enterprise application integration. (Source: Wikipedia)<br />

23 http://www.ist-muse.org/<br />

<strong>Annex</strong> 2 - Page 13 of 282

<strong>A2.</strong>1.2.4 Connectivity<br />

FP6-IST-507554/<strong>JCP</strong>/R/Pub/D2.2-3.2–Update 09/01/06<br />

By recognizing the need to separate connectivity from applications we have the opportunity to unleash the<br />

power of the marketplace that has served so very well in computing and in the Internet. [Bob Frankston, 2002-<br />

01-29]<br />

Source: www.satn.org<br />

History<br />

Like any other system, our understanding of telecommunications has evolved and changed. In engineering the<br />

phone system, there were a myriad of technical problems to be solved in order to be able to carry voice<br />

conversations over long distances or even around the world. The signal had to be delivered with precise timing<br />

with every component of the network adjusted just right. Because the equipment was so expensive there was<br />

great emphasis on precise planning for capacity.<br />

Similarly, television was an amazing feat of engineering in the 1930's. It took very precise engineering to<br />

synchronize the video beam in the kinescope (the camera) with the image shown in the receiver. Many technical<br />

tricks were used including interlacing so that successive scans filled alternating lines to produce a smoother<br />

image. People then took advantage of the accidental properties, such as adding closed captioning by using the<br />

"vertical blanking interval" (the time it took to move the beam from the bottom back to the top of the screen).<br />

The rise of the Internet in the 1990's (though the process actually started decades earlier) has demonstrated that<br />

we can now treat both telephony and television as streams of bits over a packet network. In the network itself all<br />

packets are treated the same with no special handling for audio or video streams. The network doesn't even have<br />

the notion of a circuit since successive packets needn't go to the same destination.<br />

Connectivity<br />

The pragmatic definition: Connectivity is the unbiased transport of packets between two end points. This is also<br />

the essential definition of "IP" (Internet Protocol).<br />

There is a strong boundary between the IP layer and the applications built upon it. TCP, for example, is an<br />

application protocol. In the term "TCP/IP" the slash emphasizes the separation of the two.<br />

The virtuous cycle<br />

Since no application's packets get special treatment, the IP layer created a new commodity, connectivity, and set<br />

in motion the virtuous cycle of low prices generating new applications. These applications generated new<br />

demand. The new capacity created to meet this demand drove down the unit price but generated higher<br />

aggregate revenue to the connectivity providers.<br />

It is still difficult for many people to grasp the power of the virtuous cycle set in motion by an effective<br />

marketplace structure. In the 1970's the military paid millions of dollars for computers that were far less<br />

powerful than the machines we use for children's video games.<br />

It also means that now telephony and television can be treated as streams of packets built upon the connectivity<br />

layer. There is a caveat in that we need sufficient capacity in our networks to carry this traffic. Early efforts to<br />

send audio and video over the Internet were limited by the capacity of the network but it is now becoming<br />

common and accepted to listen to live events over the Internet and, unlike radio, there is no predefined limit on<br />

the quality.<br />

We now have home networks running at 100 megabits per second bought along with pencils at the local<br />

stationery store. And soon, a billion bits per second will be common. We already have Internet backbones that<br />

support a trillion bits per second per strand of fiber.<br />

<strong>A2.</strong>1.2.5 Initiatives<br />

Internet Engineering Task Force (IETF) 24 , IPv6 Forum 25 , Universal Plug and Play Forum 26 , Open Services<br />

Gateway Initiative (OSGi), 4DHomeNet 27 .<br />

24 IETF, http://www.ietf.org/<br />

25 ipv6forum, http://www.ipv6forum.com/<br />

<strong>Annex</strong> 2 - Page 14 of 282

FP6-IST-507554/<strong>JCP</strong>/R/Pub/D2.2-3.2–Update 09/01/06<br />

4DHomeNet offers 4DAgent and a remote management system that supports OSGi Service Platform Release<br />

2. The system shows how home network operators can remotely manage their OSGi-based distributed<br />

residential gateways. 4DHomeNet also shows how OSGi Frameworks and DVB-MHP can work together<br />

providing a user-friendly interface via a TV set.<br />

Broadband Wireless Association 28 ,<br />

The BWA offers to members an independent voice that is heard by regulators and licence authorities primarily<br />

throughout Europe but with strong links to North America and the Pacific Rim. It also offers essential technical<br />

and market information in its promotion and facilitation of the broadband wireless industry, Members of the<br />

Association are from all parts of the wireless industry including operators, vendors, research groups and<br />

consultants. The association conducts a number of activities, which may result in direct benefits by its members.<br />

TDD Coalition 29<br />

The TDD Coalition is a consortium of manufacturers and operators promoting the use of TDD duplexing<br />

technology in broadband wireless networks.<br />

OFDM Forum 30<br />

The OFDM Forum is a voluntary association of hardware manufacturers, software firms and other users of<br />

orthogonal frequency division multiplexing (OFDM) technology in wireless applications. The OFDM Forum<br />

was created to foster a single, compatible OFDM standard, needed to implement cost-effective, high-speed<br />

wireless networks on a variety of devices. OFDM is a cornerstone technology for the next generation of highspeed<br />

wireless data products and services for both corporate and consumer use. With the introduction of the<br />

IEEE 802.11a, ETSI BRAN, and multimedia applications, the wireless world is ready for products based on<br />

OFDM technology.<br />

Wireless Communication Association 31<br />

The Wireless Communications Association International (WCA, founded in 1988) is the non-profit trade and<br />

professional association for the wireless broadband industry with member companies on six continents<br />

representing the bulk of the sector's leading carriers, vendors and consultants. The WCA's mission is to advance<br />

the interests of the wireless carriers that provide high-speed data, Internet, voice and video services on<br />

broadband spectrum through land-based systems using reception/transmit devices in all broadband spectrum<br />

bands. The WCA is an established leader in government relations, technology standards and industry event<br />

organization. General fixed wireless access scope (including free space optics). The members provide services<br />

or products in spectrum bands as UHF, 2.1, 2.3, 2.5, 12, 18, 23, and 28 GHz.<br />

MMAC-PC 32<br />

Japan: Multimedia Mobile Access Communication Systems Promotion Council (founded 1996). The objective<br />

of the Council is to realize MMAC as soon as possible through investigations of system specifications,<br />

demonstrative experiment, information exchange and popularisation activities and thereby contribute to the<br />

efficient use of radio frequency spectrum.<br />

Wireless World Research Forum 33 (WWRF)WWRF is a global organisation, which was founded in<br />

August 2001. Members of the Forum are: manufacturers, network operators/service providers, R&D centres,<br />

universities, and small and medium enterprises. WWRF provides a global platform for discussion of results,<br />

exchange of views to initiate global cooperation towards systems beyond 3G.<br />

26 plug and play forum: http://www.upnp.org/<br />

27 4DHomeNet, www.4dhome.net<br />

28 BWA, http://www.broadband-wireless.org/home.htm<br />

29 TDD coalition, http://www.tddcoalition.org/<br />

30 OFDM Forum, http://www.ofdm-forum.com/index.asp?ID=92<br />

31 Wireless Communication Association, http://www.wcai.com/<br />

32 MMAC-PC, http://www.arib.or.jp/mmac/e/about.htm<br />

33 WWRF, http://www.wireless-world-research.org/<br />

<strong>Annex</strong> 2 - Page 15 of 282

<strong>A2.</strong>1.2.6 R&D Activities / Projects<br />

FP6-IST-507554/<strong>JCP</strong>/R/Pub/D2.2-3.2–Update 09/01/06<br />

<strong>A2.</strong>1.2.6.1 MMAC 34<br />

Within the framework of the Japanese Multimedia Mobile Access Communication Systems project MMAC<br />

multimedia information is transmitted at ultra high speeds and with high quality "anytime and anywhere", details<br />

of the MMAC system specifications are:<br />

High Speed Wireless Access (outdoor, indoor): Mobile Communication System which can transmit at up to 30<br />

Mbit/s using the SHF and other band (3-60 GHz). It can be used for mobile video, telephone conversations.<br />

Ultra High Speed Wireless LAN (indoor): Wireless LAN which can transmit up to 156 Mbit/s using the<br />

millimeter wave radio band (30-300 GHz). It can be used for high quality TV conferences.<br />

5GHz Band Mobile Access (outdoor, indoor): ATM type Wireless Assess and Eathernet type Wireless LAN<br />

using 5GHz band. Each system can transmit at up to 20-25Mbit/s for multimedia information.<br />

Wireless Home-Link (indoor): Wireless Home-Link which can transmit up to 100Mbit/s using the SHF-and<br />

other frequency bands band (3-60GHz). It can be used for between PCs and Audio Visual equipments transmit<br />

multimedia information.<br />

<strong>A2.</strong>1.2.6.2 House_n 35<br />

Is a multi-disciplinary project lead by researchers at the Massachusetts Institute of Technology, USA. This<br />

project includes also advanced communication technologies, e.g. Rondoni, J.C. Context-Aware Experience<br />

Sampling for the Design and Study of Ubiquitous Technologies M.Eng. 36 . Thesis Electrical Engineering and<br />

Computer Science, Massachusetts Institute of Technology, September 2003. “The paradigm of desktop<br />

computing is beginning to shift in favour of highly distributed and embedded computer systems that are<br />

accessible from anywhere at anytime. Applications of these systems, such as advanced contextually-aware<br />

personal assistants, have enormous potential not only to abet their users, but also to revolutionize the way people<br />

and computers interact.<br />

<strong>A2.</strong>1.2.6.3 European projects<br />

(Projects which are interesting for activities “wireless 3G and beyond” and Home-Networks)<br />

WINNER 37 Wireless World Initiative New Radio, Integrated Project (38 partners), IST-2003-507581, Action<br />

Line: Mobile and wireless systems beyond 3G,<br />

The key objective of the WINNER project is to develop a totally new concept in radio access. This is built on<br />

the recognition that developing disparate systems for different purposes (cellular, WLAN, shortrange access<br />

etc.) will no longer be sufficient in the future converged Wireless World. This concept will be realised in the<br />

ubiquitous radio system concept. The vision of a ubiquitous radio system concept is providing wireless access<br />

for a wide range of services and applications across all environments, from short-range to wide-area, with one<br />

single adaptive system concept for all envisaged radio environments. It will efficiently adapt to multiple<br />

scenarios by using different modes of a common technology basis. The concept will comprise the optimised<br />

combination of the best component technologies, based on an analysis of the most promising technologies and<br />

concepts available or proposed within the research community. The initial development of technologies and<br />

their combination in the system concept will be further advanced towards future system realisation. Compared to<br />

current and evolving mobile and wireless systems, the WINNER system concept will provide significant<br />

improvements in peak data rate, latency, mobile speed, spectrum efficiency, coverage, cost per bit and supported<br />

environments taking into account specified Quality-of-Service requirements. The concept will province the<br />

wireless access underpinning the knowledge society and the eEurope initiative, enabling the "ambient<br />

intelligence" vision. To achieve this impact, the concept will be derived by a systematic approach. Advanced<br />

radio technologies will be investigated with respect to predicted user requirements and challenging scenarios.<br />

34 MMAC, http://www.arib.or.jp/mmac/e/what.htm<br />

35 House_n, http://architecture.mit.edu/house_n/)<br />

36 Thesis, http://architecture.mit.edu/house_n/web/publications/publications.htm<br />

37 Winner, https://www.ist-winner.org/<br />

<strong>Annex</strong> 2 - Page 16 of 282

FP6-IST-507554/<strong>JCP</strong>/R/Pub/D2.2-3.2–Update 09/01/06<br />

The project will contribute to the global research, regulatory and standardisation process. Given the consortium<br />

pedigree, containing major players across the whole domain, such contributions will have a major impact on the<br />

future directions of the Wireless World.<br />

FUTURE HOME 38 , IST 2000-28133 (closed)<br />

The Future Home project focuses to create a solid, secure, user friendly home networking concept with open,<br />

wireless networking specification. The project introduces usage of IPv6 and Mobile IP protocols in the wireless<br />

home network. It specifies and implements prototypes of wireless home network elements and service points. It<br />

develops new services that use capabilities of the network and verify feasibility of the concept in user trial. The<br />

networking concept defines a wireless home networking platform (HNSP) with network protocols and network<br />

elements. It defines the wireless technologies and network management methods for supporting user friendliness<br />

and easy installation procedures as well as management of the wireless resources. The wireless technologies are<br />

Bluetooth, WLAN, and HiperLAN/2.<br />

BROADWAY 39 The way to broadband access at 60GHz, IST-2001-32686<br />

BROADWAY aims to propose a hybrid dual frequency system based on a tight integration of HIPERLAN/2<br />

OFDM high spectrum efficiently technology at 5GHz and an innovative fully ad-hoc extension of it at 60GHz<br />

named HIPERSPOT. This concept extends and complements existing 5GHz broadband wireless LAN systems<br />

in the 60GHz range for providing a new solution to very dense urban deployments and hot spot coverage. This<br />

system is to guarantee nomadic terminal mobility in combination with higher capacity (achieving data rates<br />

exceeding 100Mbps). This tight integration between both types of system (5/60GHz) will result in wider<br />

acceptance and lower cost of both systems through massive silicon reuse. This new radio architecture will by<br />

construction inherently provide backward compatibility with current 5GHz WLANs (ETSI BRAN<br />

HIPERLAN/2). BroadWay is obviously part of the 4G scenario, as it complements the wide area infrastructure<br />

by providing a new hybrid air interface technology working at 5 GHz and at 60 GHz. This air interface is<br />

expected to be particularly innovative as it addresses the new concept of convergence between wireless local<br />

area network and wireless personal area network systems.<br />

NEWCOM 40 , Network of Excellence in Wireless Communications,<br />

Action Line: Mobile and Wireless Systems beyond 3G, FP6-507325<br />

NEWCOM (more than 60 partners) aims at creating a European network that links in a cooperative way a large<br />

number of leading research groups addressing the strategic objective "Mobile and wireless systems beyond 3G".<br />

NEWCOM will implement an elaborate plan of initiatives which revolve around the key notion and strategic<br />

choice of a Virtual Knowledge Centre: NEWCOM will effectively act as a distributed university, organised in a<br />

matrix fashion: the columns will represent the seven NEWCOM (Disciplinary) departments, while the rows will<br />

represent NEWCOM projects.<br />

Department 1 Analysis and Design of Algorithms for Signal Processing at Large in Wireless Systems<br />

Department 2 Radio Channel Modelling for Design Optimisation and Performance Assessment of Next<br />

Generation Communication Systems<br />

Department 3 Design, modelling and experimental characterisation of RF and microwave devices and<br />

subsystems<br />

Department 4 Analysis, Design and Implementation of Digital Architectures and Circuits<br />

Department 5 Source Coding and Reliable Delivery of Multimedia Contents<br />

Department 6 Protocols and Architectures, and Traffic Modelling for (Reconfigurable / Adaptive) Wireless<br />

Networks<br />

Department 7 QoS Provision in Wireless Networks: Radio Resource Management, Mobility, and Security<br />

Project A Ad Hoc and Sensor Networks<br />

Project B Ultra-wide Band Communication Systems<br />

Project C Functional Design Aspects of Future Generation Wireless Systems<br />

Project D Reconfigurable radio for interoperable transceivers<br />

Project E Cross Layer Optimisation<br />

38 FUTURE HOME, http://future-home.org<br />

39 Broadway: http://www.ist-broadway.org/description.html<br />

40 NEWCOM, http://dbs.cordis.lu/fep-cgi/srchidadb?ACTION=D&CALLER=PROJ_IST&QM_EP_RCN_A=71453<br />

<strong>Annex</strong> 2 - Page 17 of 282

FP6-IST-507554/<strong>JCP</strong>/R/Pub/D2.2-3.2–Update 09/01/06<br />

MediaNet 41<br />

Action Line: Networked Audio Visual Systems and Home Platforms, FP6-507452<br />

The project (IP with more than 30 partners) aims at developing technologies, infrastructure and service solutions<br />

enabling the easy exchange of digital content and audio-video content between creators, providers, customers<br />

and citizens. MediaNet will identify and develop a set of representative and appealing end-to-end applications,<br />

key enabling technologies, a reference architecture and its key interfaces for an easy and smooth exchange of<br />

content all along the media supply chain. By developing and maintaining a shared vision, all stakeholders are<br />

guaranteed of the effective and seamless work between all subparts of the media chain.<br />

The project covers three complementary domains: media networking, multimedia services, and content<br />

engineering. The project will develop new management service platforms for broadband access networks and<br />

open home networking, and storage solutions. Wireless communication solutions for audio-video content, new<br />

end-to-end service provisioning over shared public and private infrastructures, mixing video broadcast over<br />

broadband access, content on demand, and interactive online applications will be proposed, as well as personal<br />

multimedia communications supported by portable terminals and person-to-person communication services over<br />

IP.<br />

A common open and shared delivery platform will be created covering the broadband access and the home<br />

domains, where all devices and services will interoperate, combining advanced multimedia content, services,<br />

and communications in public and residential environments. MediaNet, which is centered at the intersection of<br />

the audio-video, PC and telecom industries actively participates in the development of such next generation<br />

connected digital applications and devices by addressing some of its technical key issues:<br />

• the development of an open multi-vendor/multi-service business reference architecture and a technical<br />

roadmap ,<br />

• broadband access and home networking to support multiple overlay end-to-end applications by third<br />

parties;<br />

• shared services, infrastructures, and equipment while assuring investment protection, interoperability and<br />

competition;<br />

• Digital Rights Management (DRM) and end-to-end content protection solutions;<br />

• N-Services, e-Service platforms, Digital Video Broadcasting over IP, Multimedia Home Platform,<br />

gateways, wireless solutions for audio/video streaming, open distributed storage, Multimedia<br />

communications over IP services and terminals, MPEG4/AVC encoding and decoding circuits, HQ A/V<br />

streaming over IP.<br />

MOCCA 42 The Mobile Cooperation and Coordination Action, FP6-2004-IST-2<br />

Action Line: Programme Level Accompanying Measures<br />

The MOCCA coordination action will facilitate collaboration between projects addressing mobile and wireless<br />

issues, within the European Research Area (ERA), between projects in the ERA and research programmes in<br />

Asia and the US, and between researchers and projects in the ERA and their counterparts in the developing<br />

regions of the world. It will address this collaboration in the context of the research and development of future<br />

mobile and wireless systems, including the services arid applications they serve. MOCCA will facilitate<br />

European and international collaboration regarding research on future wireless systems and their applications. It<br />

will pave the way towards harmonised international standards for future mobile and wireless systems so that the<br />

systems meet the needs of users worldwide. The MOCCA approach is open to all. All interested ERA projects<br />

will be invited to participate in the activities organised by the project. Inter-continental collaboration with all<br />

major mobile and wireless research programmes and standardisation fora will be supported. MOCCA results<br />

will lead to the development of future applications, services and wireless networks, which meet the needs of<br />

users worldwide, building on Europe's strength in the mobile sector. In the long term, MOCCA results will<br />

improve the impact of the research results of the ERA wireless related projects on global standardisation<br />

activities and in the global market.<br />

WIND-FLEX 43 , Wireless Indoor Flexible High Bitrate Modem Architecture, IST-1999-10025 (closed)<br />

41 http://www.ist-ipmedianet.org/home.html<br />

42 http://mocca.objectweb.org/<br />

43 WINDFLEX, http://labreti.ing.uniroma1.it/windflex/<br />

<strong>Annex</strong> 2 - Page 18 of 282

FP6-IST-507554/<strong>JCP</strong>/R/Pub/D2.2-3.2–Update 09/01/06<br />

A high bit-rate flexible and configurable modem architecture is investigated, which works in single-hop, ad hoc<br />

networks and provides a wireless access to the Internet in an indoor environment where slow. The main<br />

emphasis is in the OSI layers 1 and 2. The best possible performance with a reasonable complexity is attained by<br />

using a jointly optimised adaptive system which includes the multiple access method, diversity, modulation and<br />

coding, and equalization and decoding.<br />

The system is not optimised in advance but it will be adaptive and configurable in the run time. This is a step<br />

towards a SDR (Software defined radio), which is presently too far in the future due to technological problems.<br />

Bit rates from 64 kbit/s up to 100 Mbit/s are considered at the frequency band of 17 GHz. Modulation was<br />

OFDM with 128 subcarriers, channel bandwidth was 50 MHz, modulation schemes BPSK, QPSK, 16QAM,<br />

64QAM. The bit rate is variable depending on the user needs and channel conditions. Flexibility is attained by<br />

using a multicarrier modulation method even though single-carrier methods will be also considered. Best<br />

possible methods are used including joint diversity, modulation and coding (such as space-time coding) in the<br />

transmitter and joint equalization, decoding and channel estimation (such as per-survivor processing) in the<br />

receiver. The work is done in high quality research groups who are using compatible simulation tools so that<br />

almost full (off-line) simulation is possible. The driving force is research, not the existing standards.<br />

NGN INITIATIVE 44 Next Generation Networks Initiative, IST-2000-26418 (closed)<br />

The NGN Initiative's mission is to establish the infrastructure to operate the first open environment for research<br />

on the whole range of Next Generation Networks (NGN) topics to be discussed, consensus achieved and<br />

collective outputs disseminated to the appropriate international standards bodies, fora, and other organisations.<br />

Being of worldwide interest, it is inevitable that some of the Internet-related topics addressed here will also be<br />

covered in the US Next Generation Internet (NGI 45 ) programme. Inputs to Eu-FP6 programme.<br />

WWRI 46 Wireless World Research Initiative, IST-2001-37680 (closed)<br />

The WWRI was an accompanying measure under the IST-programme in the Fifth Framework Programme. The<br />

project started in June 2002 for 10 months. Key players in the wireless sector initiated the WWRI project to<br />

provide a launch pad to the wireless community (industry and academica) for a balanced cooperative research<br />

programme for the Wireless World. The work done in WWRI was useful for the preparation of Integrated<br />

Projects for the 6th EU Framework Programme.<br />

<strong>A2.</strong>1.2.6.4 National projects<br />

WIGWAM is part of the Central Innovation Program "Mobile Internet" which is funded by the German Ministry<br />

of Education and Research (BMBF). The objective of WIGWAM is the design of a complete system for<br />

wireless communication with a maximum transmission data rate of 1 Gbit/s.<br />

The targeted spectrum is the 5 GHz band and the extension bands 17, 24, and 60 GHz. Depending on the<br />

mobility of the user, the data rate should be scalable.<br />

The goal is a "1 Gbit/s component" of a heterogeneous future mobile communication system. All aspects of such<br />

a system will be investigated, from the hardware platform to the protocols, which are subject to very strong<br />

requirements given the extremely high data rate of 1 Gbit/s.<br />

The main application area is the transmission of multimedia content in so-called hot-spots (see figure below), in<br />

home scenarios, and in large offices where an enormous data rate back-off is necessary, e.g. to supply the user<br />

with short-term high data rates, or to enable a true plug-and-play without any frequency planning (particularly<br />

important in home scenarios). In order to be able to include such a high data rate air-interface into a future<br />

heterogeneous mobile communications system, also high mobility applications are covered.<br />

<strong>A2.</strong>1.3 Issues and technical trends / gap analysis<br />

<strong>A2.</strong>1.3.1 Technical Trends<br />

<strong>A2.</strong>1.3.1.1 Cabled Home Network<br />

44 NGNI, http://www.ngni.org/overview.htm<br />

45 Next Generation Internet, http://www.ngi.de/<br />

46 http://www.ist-wwri.org/project.html<br />

<strong>Annex</strong> 2 - Page 19 of 282

FP6-IST-507554/<strong>JCP</strong>/R/Pub/D2.2-3.2–Update 09/01/06<br />

While the optical fibre based 10 Gigabit Ethernet is running, the copper cable based 10 Gigabit Ethernet will<br />

come: In September 2004 IEEE has completed its 10GBASE-T draft (802.3an Draft 1.0), which will be accepted<br />

most probably in mid 2006. The copper cable based 10 Gbit/s Ethernet is expected to considerably drop the<br />

costs of the interface compared to the optical fibre based version. Distances of 15m will be supported.<br />

<strong>A2.</strong>1.3.1.2 Wireless Home Network<br />

In the UMTS cellular network HSDPA (High Speed Downlink Packet Access) and HSUPA (High Speed Uplink<br />

Packet Access) are the most recent enhancements. While HSDPA has been standardized in 3GPP Release 5,<br />

HSUPA is not yet fixed.<br />

HSDPA is a packet-based data service in the W-CDMA downlink with data transmission up to 8-10 Mbps over<br />

a 5MHz bandwidth. HSDPA implementations includes Adaptive Modulation and Coding up to 16QAM, Hybrid<br />

Automatic Request (HARQ), fast cell search, and advanced receiver design. Multiple-Input Multiple-Output<br />

(MIMO) systems are the work item in Release 6 specifications, which will support even higher data transmission<br />

rates up to 20 Mbps.<br />