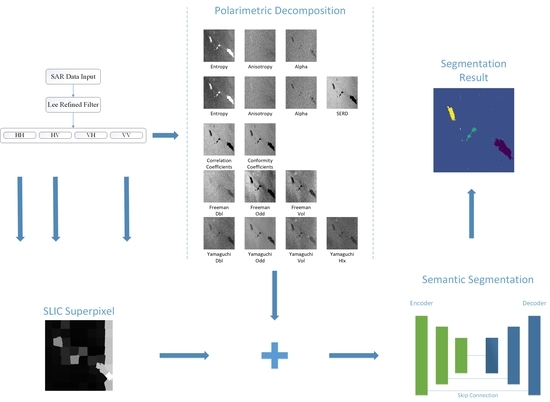

Figure 1.

Flow chart of our oil spill detection method.

Figure 1.

Flow chart of our oil spill detection method.

Figure 2.

Structure of neural networks in our segmentation method. Blue and green blocks donate encoder parts, which consist of multi convolution layers, here we used depthwise separable convolution, dilated convolution and standard convolution as filter kernel, respectively. Purple-red blocks constitute the decoder part, it outputs a classification map with the same size as original image.

Figure 2.

Structure of neural networks in our segmentation method. Blue and green blocks donate encoder parts, which consist of multi convolution layers, here we used depthwise separable convolution, dilated convolution and standard convolution as filter kernel, respectively. Purple-red blocks constitute the decoder part, it outputs a classification map with the same size as original image.

Figure 3.

Polarized parameters extraction. We extracted 13 polarized parameters in total. They are divided into five groups as the position of the box in the figure, each group was input into neural network for classification.

Figure 3.

Polarized parameters extraction. We extracted 13 polarized parameters in total. They are divided into five groups as the position of the box in the figure, each group was input into neural network for classification.

Figure 4.

Convolution kernels for (a) standard kernel, which has a receptive filed of , and (b) dilated kernel with dilation rate = 2, and its receptive field is .

Figure 4.

Convolution kernels for (a) standard kernel, which has a receptive filed of , and (b) dilated kernel with dilation rate = 2, and its receptive field is .

Figure 5.

Convolution process of transposed convolution layer.

Figure 5.

Convolution process of transposed convolution layer.

Figure 6.

Three oil spill data used in the experiments. Left side shows the original image, and right side are images processed by Refined Lee Filter. (a1,a2) Image 1 acquired by Radarsat-2, (b1,b2) Image 2 (PR11588) acquired by SIR-C/X-SAR and (c1,c2) Image 3 (PR44327) acquired by Spaceborne Imaging Radar-C/X-Band Synthetic Aperture Radar (SIR-C/X-SAR).

Figure 6.

Three oil spill data used in the experiments. Left side shows the original image, and right side are images processed by Refined Lee Filter. (a1,a2) Image 1 acquired by Radarsat-2, (b1,b2) Image 2 (PR11588) acquired by SIR-C/X-SAR and (c1,c2) Image 3 (PR44327) acquired by Spaceborne Imaging Radar-C/X-Band Synthetic Aperture Radar (SIR-C/X-SAR).

Figure 7.

All the polarized features extracted from Radarsat2 data. (a1–a3) H/A/Alpha decomposition, a1 for entropy, a2 for anisotropy, a3 for alpha. (b1–b4) H/A/Alpha decomposition and Single-Bounce Eigenvalue Relative Difference (SERD), b1 for entropy, b2 for anisotropy, b3 for alpha, b4 for SERD. (c1,c2) Scattering coefficients calculated from scattering matrix, c1 for co-polarized correlation coefficients, c2 for conformity coefficients. (d1–d3) Freeman 3-component decomposition, d1 for double-bounce scattering, d2 for rough surface scattering, d3 for volume scattering. (e1–e4) Yamaguchi 4-component decomposition, e1 for double-bounce scattering, e2 for helix scattering, e3 for rough surface scattering, e4 for volume scattering.

Figure 7.

All the polarized features extracted from Radarsat2 data. (a1–a3) H/A/Alpha decomposition, a1 for entropy, a2 for anisotropy, a3 for alpha. (b1–b4) H/A/Alpha decomposition and Single-Bounce Eigenvalue Relative Difference (SERD), b1 for entropy, b2 for anisotropy, b3 for alpha, b4 for SERD. (c1,c2) Scattering coefficients calculated from scattering matrix, c1 for co-polarized correlation coefficients, c2 for conformity coefficients. (d1–d3) Freeman 3-component decomposition, d1 for double-bounce scattering, d2 for rough surface scattering, d3 for volume scattering. (e1–e4) Yamaguchi 4-component decomposition, e1 for double-bounce scattering, e2 for helix scattering, e3 for rough surface scattering, e4 for volume scattering.

Figure 8.

Simple Linear Iterative Clustering (SLIC) superpixel segmentation results. (a) Image 1, (b) Image 2 and (c) Image 3.

Figure 8.

Simple Linear Iterative Clustering (SLIC) superpixel segmentation results. (a) Image 1, (b) Image 2 and (c) Image 3.

Figure 9.

The results of dark spots area verified by polarized parameters, 1-3 in each group represents emulsion, 2 for biogenic look-alike, 3 for oil-spill area of Image 1, 4 and 5 represent biogenic look alike and oil spill area of Image 2 and Image 3. (a1–a5) Ground truth, (b1–b5) H/A/Alpha, (c1–c5) H/A/SERD/Alpha, (d1–d5) Scattering Coefficients, (e1–e5) Freeman 3-Component Decomposition, (f1–f5) Yamaguchi 4-Component Decomposition.

Figure 9.

The results of dark spots area verified by polarized parameters, 1-3 in each group represents emulsion, 2 for biogenic look-alike, 3 for oil-spill area of Image 1, 4 and 5 represent biogenic look alike and oil spill area of Image 2 and Image 3. (a1–a5) Ground truth, (b1–b5) H/A/Alpha, (c1–c5) H/A/SERD/Alpha, (d1–d5) Scattering Coefficients, (e1–e5) Freeman 3-Component Decomposition, (f1–f5) Yamaguchi 4-Component Decomposition.

Figure 10.

The results of dark spots area verified by polarized parameters combined with SLIC superpixel segmentation, 1-3 in each group represents emulsion, 2 for biogenic look-alike, 3 for oil-spill area of Image 1. Images 4 and 5 represent biogenic look-alike and oil spill area of Image 2 and Image 3. (a1–a5) Ground truth, (b1–b5) H/A/Alpha, (c1–c5) H/A/SERD/Alpha, (d1–d5) Scattering Coefficients, (e1–e5) Freeman 3-Component Decomposition, (f1–f5) Yamaguchi 4-Component Decomposition.

Figure 10.

The results of dark spots area verified by polarized parameters combined with SLIC superpixel segmentation, 1-3 in each group represents emulsion, 2 for biogenic look-alike, 3 for oil-spill area of Image 1. Images 4 and 5 represent biogenic look-alike and oil spill area of Image 2 and Image 3. (a1–a5) Ground truth, (b1–b5) H/A/Alpha, (c1–c5) H/A/SERD/Alpha, (d1–d5) Scattering Coefficients, (e1–e5) Freeman 3-Component Decomposition, (f1–f5) Yamaguchi 4-Component Decomposition.

Figure 11.

SLIC superpixel segmentation results with different superpixel numbers. (a) 150, (b) 200, (c) 250, (d) 300, (e) 350, (f) 400.

Figure 11.

SLIC superpixel segmentation results with different superpixel numbers. (a) 150, (b) 200, (c) 250, (d) 300, (e) 350, (f) 400.

Figure 12.

The whole classification result of Yamaguchi 4-component parameters. Left: the results without SLIC superpixel; right: the results with SLIC superpixel. (a1,a2) Image 1, (b1,b2) Image 2, (c1,c2) Image 3.

Figure 12.

The whole classification result of Yamaguchi 4-component parameters. Left: the results without SLIC superpixel; right: the results with SLIC superpixel. (a1,a2) Image 1, (b1,b2) Image 2, (c1,c2) Image 3.

Table 1.

The detailed parameters of segmentation networks.

Table 1.

The detailed parameters of segmentation networks.

| Layer 1 | Filter | Kernel Size | Strides |

|---|

| Conv 1 | depthwise | | 1 |

| Conv 2 | Conv | | 2 |

| Conv 3 | depthwise | | 1 |

| Conv 4 | Conv | | 2 |

| Conv 5 | dilated | | 1 |

| Conv 6 | Conv | | 2 |

| Conv 7 | Conv | | 2 |

| Conv 8 | Conv | | 1 |

| Conv 9 | Conv | | 3 |

| Conv 10 | Conv | | 1 |

| Res 1 | Conv | | 4 |

| Res 2 | Conv | | 4 |

| Deconv 1 | transposed | | 1 |

| Deconv 2 | transposed | | 8 |

| Deconv 2_1 | transposed | | 4 |

| Deconv 3 | transposed | | 2 |

| Deconv 3_1 | transposed | | 2 |

Table 2.

Details of Synthetic Aperture Radar (SAR) image acquisition.

Table 2.

Details of Synthetic Aperture Radar (SAR) image acquisition.

| Image ID | 137348 | PR11588 | PR44327 |

|---|

| Radar Sensor | Radarsat-2 | SIR-C/X-SAR | SIR-C/X-SAR |

| SAR Band | C | C | C |

| Pixel Spacing (m) | | | |

| Radar Center Frequency (Hz) | | | |

| Centre Incidence Angle (deg) | 35.287144 | 23.600 | 45.878 |

Table 3.

Characteristics of polarized parameters in experiments.

Table 3.

Characteristics of polarized parameters in experiments.

| Parameter | Clean Sea | Look alike | Emulsion | Oil Spill | Ship |

|---|

| Entropy | low | high | higher | higher | higher |

| Anisotropy | low | low | low | low | low |

| Alpha | low | lower | low | low | low |

| SERD | high | low | lower | lower | lower |

| Correlation Coefficient | high | low | lower | lower | lower |

| Conformity Coefficient | high | low | lower | lower | lower |

| Freeman Double-Bounce | high | low | low | lower | higher |

| Freeman Rough-Surface | high | low | low | low | higher |

| Freeman Volume | high | lower | lower | low | higher |

| Yamaguchi Double-Bounce | high | low | low | lower | higher |

| Yamaguchi Helix | low | lower | lower | lower | high |

| Yamaguchi Rough-Surface | high | low | lower | lower | higher |

| Yamaguchi Volume | high | lower | lower | low | higher |

Table 4.

Number of samples of each category.

Table 4.

Number of samples of each category.

| Areas | CS | EM | LA | OS | SH |

| Training set | 80 | 75 | 82 | 88 | 31 |

| Test set | 27 | 25 | 28 | 30 | 12 |

Table 5.

Mean Intersection over Union (MIoU) result on each classification of polarized parameters experiments.

Table 5.

Mean Intersection over Union (MIoU) result on each classification of polarized parameters experiments.

| | Without SLIC Superpixel | With SLIC Superpixel |

|---|

| Classification | CS | EM | LA | OS | SH | CS | EM | LA | OS | SH |

|---|

| H/A/Alpha | 94.0% | 70.8% | 84.2% | 85.7% | 10.5% | 95.6% | 88.3% | 89.7% | 93.4% | 39.7% |

| H/A/SERD/Alpha | 94.5% | 80.7% | 84.9% | 88.3% | 11.7% | 95.8% | 91.0% | 91.7% | 95.1% | 43.1% |

| Scattering coefficients 1 | 94.1% | 27.3% | 82.3% | 80.2% | 6.8% | 94.7% | 85.8% | 84.2% | 90.1% | 41.5% |

| Freeman | 95.8% | 80.7% | 82.4% | 90.6% | 60.7% | 96.3% | 91.1% | 91.5% | 95.1% | 48.3% |

| Yamaguchi | 96.1% | 81.8% | 85.4% | 94.0% | 75.0% | 96.9% | 94.1% | 94.6% | 96.8% | 70.2% |

Table 6.

Total MIoU results of each group of polarimetric parameters.

Table 6.

Total MIoU results of each group of polarimetric parameters.

| Parameters | H/A/Alpha | H/A/SERD/Alpha | Scattering coefficients | Freeman | Yamaguchi |

| Without SLIC Superpixel | 69.0% | 72.0% | 58.1% | 82.0% | 86.5% |

| With SLIC Superpixel | 81.3% | 83.3% | 79.3% | 84.5% | 90.5% |

Table 7.

Average MIoU of each classification.

Table 7.

Average MIoU of each classification.

| Areas | CS | EM | LA | OS | SH |

| Without SLIC Superpixel | 94.9% | 68.2% | 83.8% | 87.8% | 32.9% |

| With SLIC Superpixel | 95.9% | 90.1% | 90.3% | 94.1% | 48.6% |

Table 8.

Total MIoU results of Yamaguchi parameters combined with different SLIC parameters.

Table 8.

Total MIoU results of Yamaguchi parameters combined with different SLIC parameters.

| Superpixels | 150 | 200 | 250 | 300 | 350 | 400 |

|---|

| Total MIoU | 87.1% | 88.5% | 91.0% | 90.3% | 90.5% | 86.6% |

Table 9.

Calculation time of each process (seconds).

Table 9.

Calculation time of each process (seconds).

| | Image 1 | Image 2 | Image 3 |

|---|

| Pixel Number in Experiments | | | |

| Computer Configuration | i5-8250u CPU 8GB |

| Superpixel Segmentation(s) | 1742 | 576 | 482 |

| CNN Classification with SLIC(s) | H/A/Alpha | 1594 | 135 | 243 |

| H/A/Alpha/SERD | 2107 | 186 | 359 |

| Scattering Coefficients | 1023 | 102 | 159 |

| Freeman | 1602 | 133 | 245 |

| Yamaguchi | 2115 | 192 | 361 |

Table 10.

Memory Usage Condition of CNN.

Table 10.

Memory Usage Condition of CNN.

| | Without SLIC | With SLIC |

|---|

| H/A/Alpha | 59.2 M | 91.3 M |

| H/A/Alpha/SERD | 91.3 M | 130 M |

| Scattering Coefficients | 33.9 M | 59.2 M |

| Freeman | 59.2 M | 91.3 M |

| Yamaguchi | 91.3 M | 130 M |