Hey Alexa, play music!

PANEL: Smart Speakers - Changing the face of Music Consumption

Pivotal Music Conference, Birmingham - 27th September 2019

What are Smart Speakers?

A smart speaker is typically a wireless speaker and voice command device. It enables users to give voice recognition commands to virtual assistants through voice activation commands or 'wake words' such as "Hey Siri", "OK Google" or "Hey Google", "Alexa" and "Hey Microsoft". Prices start from as low as £30. A smart speaker with a touchscreen is known as a smart display. Whist it's still early days, algorithm technology profiling your behaviour from social networks and other platforms linked to the device, can influence how results are prioritised. It is painfully apparent, that to get the most personalised service from such a device - which is so reliant on machine learning, AI and algorithm decision making - relies heavily on a good data supply and how much data you give these services about your profile. As a commercial business area this is often referred to as 'Voice'.

Voice - Market Leader Amazon

At Pivotal, I was joined by Lewis Newson (Digital Marketing, Cooking Vinyl) representing the supply of music from rights holders, Michael Cassidy (Chief Innovation Officer, FUGA) as the connector to digital services providers (DSPs) such as Amazon and Ivy Taylor, Voice Product Manager representing Amazon Music UK.

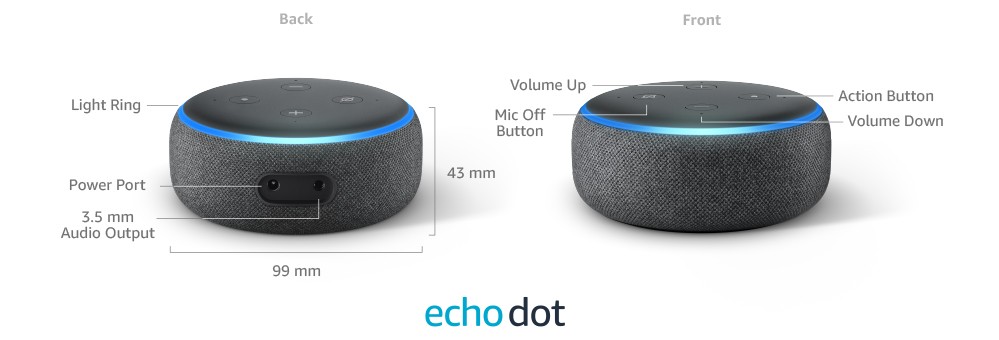

Earlier this year, the British music industry body - BPI - issued a report into Smart Speakers: Stuart Dredge, Music Ally report author on behalf of the BPI and ERA. In 2019, the US clocked a smart speaker total of 76 million units of which 6 million were shifted over the summer. Despite an aggressive drop in price by Apple Home Pod, Amazon remains the market leader - 70% of total units are Amazon Echo.

Also last week, 30 technology platforms including Microsoft, Spotify and Sonos have signed up to an initiative intended to enable seamless and secure integration with multiple voice services on a single product. Notably competitors Google and Apple did not join the party. Chief R&D officer at Spotify Gustav Söderström said in the press release: “We are excited to join the Voice Interoperability Initiative, which will give our listeners a more seamless experience across whichever voice assistant they choose...” - demonstrating the significant potential that subscription platforms expect from Voice products.

CASUAL LISTENING

34% of Echo and Home owners spend 4+ hours a day listening to music, vs. 24% of general population. 39% of smart speaker owners say time listening to them is replacing their AM/FM stations (source: BPI). 48% of smart speaker owners have a premium subscription to a music-streaming service.

What was clear from the BPI report and the Pivotal Smart Speaker panel is that current smart speaker listening behaviour is typically casual or 'lean back'. Whilst users may be spending hours listening, typical requests are broad and disengaged. They're not scrutinising what they are listing to - it's background music. Users do not use voice for sophisticated or deep catalogue music searches. It seems implied that as this has potential to increase reliance on subscription playlisting and replace radio, it increases the need for artists and labels to plug music to subscription playlists using broadstrokes compiling like 'Sleep Playlist' from platforms like Spotify and Amazon Prime.

Panellist Ivy Taylor, began working for Amazon as a Digital Music Content Manager, curating playlists for the launch of Prime Music and owning the content strategy of Stations. Prior to Amazon, Ivy worked as a music consultant, creating in-store audio strategies and bespoke playlists for brands, and supervising music for marketing campaigns. In her current role she works to improve the music experience on Alexa and has launched new Voice features such as ‘Activities’, ‘Play More Like This’ and ‘Music Alarms’. Ivy seemed open to receiving music suggestions from labels and didn't give any hints to agendas or favoured genres. Instead she gave some further insight into the changes in user behaviour. Amazon monitor the frequency and style of requests to better understand how users interact with the devices and understand behaviour. This has revealed that users are becoming more conversational with the Amazon virtual assistant - Alexa - and requests are becoming more mood or era based.

What struck me (as a voice cynic) is that it could be useful to fulfil the 'hum a tune' or sing part of the lyric to the assistant if you can't remember the song title - something that seems so human. Rather than relying on a narrow, convoluted traditional routes to radio, for rights holders willing to enrich their data, this could be a win for more niche sources of music that don't get general radio play anyway to capitalise on the successes that they've found at Spotify.

ENGAGED LISTENING

Ivy explained a little more about the Amazon features for those who want to engage with specific artists. Amazon Skills which group interviews and catalogues together by one artist. An artist may be invited by Amazon Follow to share a personal message about an upcoming release in e-mail notifications and will be available on their Follow Updates timeline. If you are invited to share and choose not to participate, Amazon may still promote or market your upcoming release to your followers. Given that this is an invitation (presumably at beta stage), there still feels like too much potential for key players to capitalise on the good will of individuals at Amazon much like radio plugging.

EMERGING MARKETS

In order to scout out opportunity it's good to know where voice is growing globally and this makes absolute sense for people who find keyboards inhibit their communications because they're language is not easily found on a keyboard. Lewis Newson flagged that there has been some interesting learning in India. In 2018 a survey showed that 82% of Indian respondents used voice activated technology. Umesh Sachdev, cofounder, Uniphore, a natural language processing startup, says, “Voice is quicker compared to typing text—150 words spoken compared to 40 words typed per minute.” For those who frequently seek help from random people at post offices and ATMs to fill forms or withdraw money, voice is becoming a game changer. This opens a new market for people who didn't feel confident to type and can instead talk out their music choices.

PROBLEMS WITH PRIORITISATION AND BIAS

There is regulation on prioritisation and data which has now been tested in court. It is going to test the wider politics of machine learning and AI. Country singer Martina McBride spoke out in September 2019 about how she found gender bias in recommended songs.

In a separate interview with Billboard, McBride describes her meeting with Spotify. “I felt from the meeting that it was a lack of awareness that they weren't even aware that happens when you type in country music and start a playlist. It's an algorithm, of course... That’s what really upset me the most. I felt like we'd been erased. I felt like the entire female gender and voice had been erased. It just broke my heart.”

SUPPLY CHAIN

What if you as a rights holder want Alexa to prioritise the Trentemøller extended remix? What if you can't stand extended edits and only want the radio edits? What happens if your favourite version of a song is not prioritised - where song titles of the same name across different artists or where songs have different cover versions, how will Alexa know which one you mean?

Similar audience questions at the Pivotal panel highlighted a key concern for independent music for which the panel could not supply any clear advice and this will be a key area that likely needs addressing by services such as FUGA to make sure that the data delivered to digital service providers (DSPs) sharpen up the potential for search results.

We're a long way from asking Alexa for specific remixes, versions or edits. But potentially the winners of Voice will be subscription services and so it's down to users to sharpen up their profiles and music suppliers to enrich their data with mood, lyric and other criteria. Metadata supply chain is typically driven by the labels or the recording rights community using data standard DDEX (Digital Data Exchange). FUGA is one of the operatives that achieve this for music makers - others include Feedmusic, TuneCore, Beryl Media, ReverbNation, Syntax Creative, The Orchard, INgrooves, Revelator, X5 and CD Baby. Michael Cassidy works with a team of engineers and product owners, developing new platform services for FUGA clients and is at the forefront of advancing FUGA’s best-in-breed technology. He is particularly interested in how new technologies including artificial intelligence, speech recognition, blockchain and VR are changing the way we connect creators with listeners in the music industry.

Michael was keen to flag that to put music on Apple Music, suppliers must include lyrics in order to enable searches. He acknowledged that there's some difficulty in getting enriched data from music suppliers but that this will give music sources wins in voice. DDEX is being updated to MEAD which is big news as this sets standards for more enriched media data which will enable more relevance to songs. Significantly this includes “focus tracks”, which is crucial for voice-activated services (i.e. which sound recording or video is to be played when a consumer asks for, for example, “the latest George-Ezra track” – which may well differ from Ezra’s most recently released song because of advertisement campaigns or other events). This will put pressure on labels with large rosters to administer these extra values - but without this effort those songs are going to get lost.

THE INVISIBLES

On Friday, Amazon launched a series of new products. Some of us find having a listening device in their living room - or in their pocket via their mobile - hugely sinister with serious security implications. However, as we come accustomed to talking to our devices, it's clear that voice has huge potential to improve hands free lifestyle - if you're feeding a baby and trying to nurse it to sleep, to assist food hygiene by avoiding touching an oven with raw meat and to make it easier to use music in sports training. This week, Amazon introduced its beta, invite-only hardware devices that incorporate receivers into other objects. These 'invisibles' include Alexa-enabled glasses, a ring and even a combination convection oven, microwave, air fryer, and food warmer. As Ivy flagged to the audience, the most immediate advance is Amazon Echo Buds but for those of us interested in advances in voice and audio-visual media there's some interesting product launches all time particularly in conferencing for which Facebook have a product called Portal.

CONCLUSIONS

It's still early days and inevitably, once people get used to talking to voice recognition services their expectations will become more sophisticated. I'm curious as to what potential there will be to own a set of conversational criteria. Could a brand or sponsor partner with a music supplier to own 'cheer me up?'.

It's clear that people are using and adopting voice and that this is not a fad. This will change consumer behaviour and will put pressure on brands and advertisers to find new ways of exploiting this media which hopefully could be to the further potential of music. However, it's going to be a long time before the meta-data chain theories become sufficiently mainstream to enable a reliable flow of enriched data. My hope is that sync teams will be able to work closer with the data supply chain to share the enriched data that we collect day to day to sell music into sync spaces.

This is something that has been core to my business strategy at Domino and finally feels like cross-departmental conversations are opening up. If the sync team can feed song history into the data flowing to DSPs it will be possible for Voice users to say 'Alexa, play the theme song to Peaky Blinders' or 'Alexa, play the song on the (insert brand) ad'. And it will be interesting to see how that becomes part of the negotiation for sync deals.

It's important that every music company is pooling the data that fleshes out the relevance of their music to mood and reflect conversations people are having with their virtual assistant. Smart speakers follow you round your home, when your walking the dog, when you're driving to the supermarket ... the potential synergy with smart tools and your own personal data could benefit music in finally mapping out true data about people's music usages in different spaces and create more personalised experiences that deliver a more authentic interplay on a personal level which could have long term gains for artists.

Ever the optimist, my hope is that once people begin to rely more on these interfaces that it might be possible to interrupt people's normal behaviour - to lead them into some alternatives that they may never have considered but that suit their profile based on more sophisticated supply chain of enriched music data and understanding of psychology. The bit that will get interesting is when AI is able to mimic serendipity.

For the time being - we're still a little bit stuck at 'Hey Alexa, Play music'.

CEO of Music For Sport

4yNice article Lynden. But I'm wondering if any body is interested in the quality of the audio. It's pretty awful from these devices from an audiophiles point of view. Highly compressed files and small speakers. Who has the interests at heart of those of us who want to hear music as the producers and composers intended it?