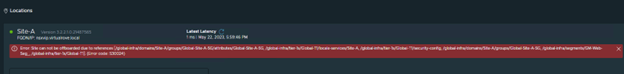

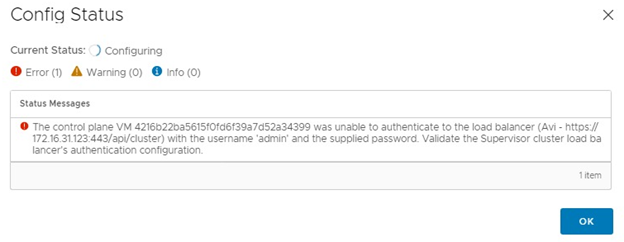

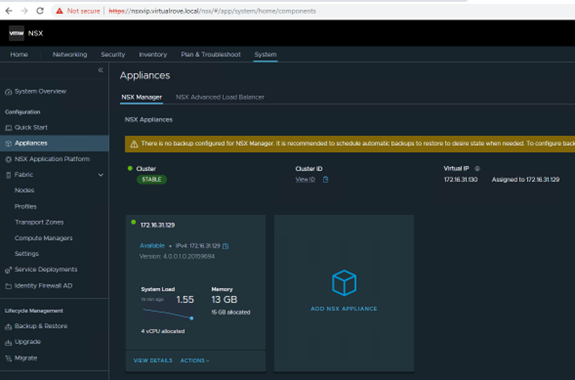

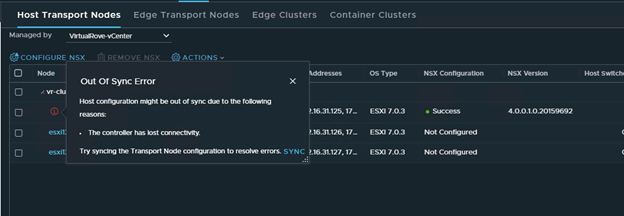

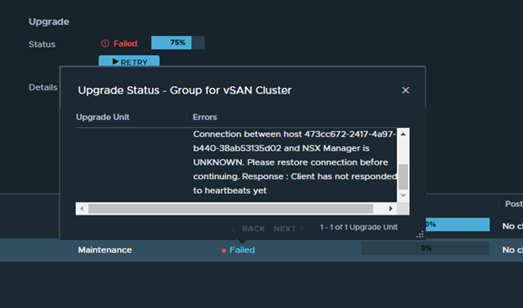

Got following error while upgrading NSX from v3.2.2 to v4.1.

Pre-upgrade checks failed for HOST: Connection between host 473cc672-2417-4a97-b440-38ab53135d02 and NSX Controller is UNKNOWN. Response : [Lcom.vmware.nsxapi.fabricnode.dto.ControlConnStatusDto;@edbaf5b Connection between host 473cc672-2417-4a97-b440-38ab53135d02 and NSX Manager is UNKNOWN. Please restore connection before continuing. Response : Client has not responded to heartbeats yet

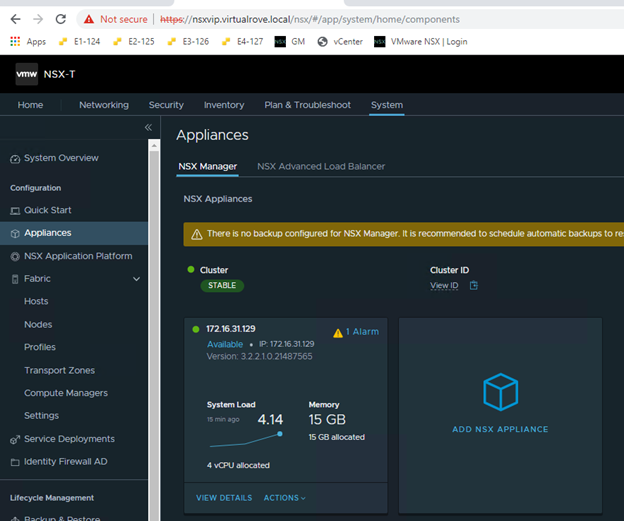

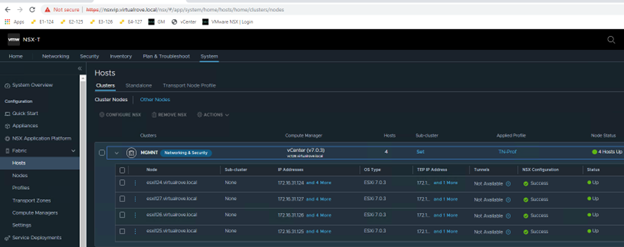

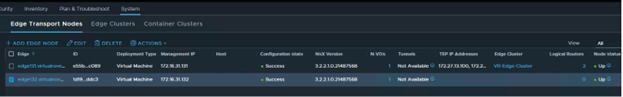

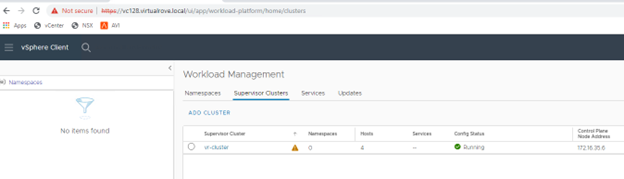

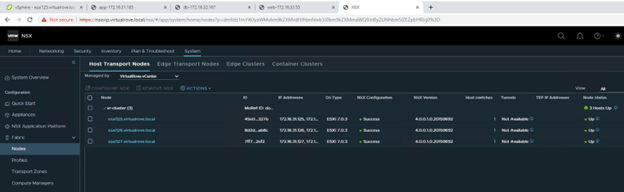

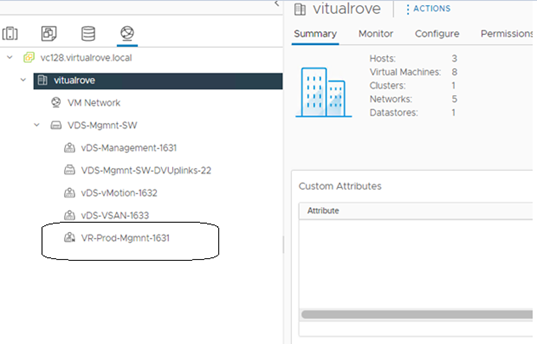

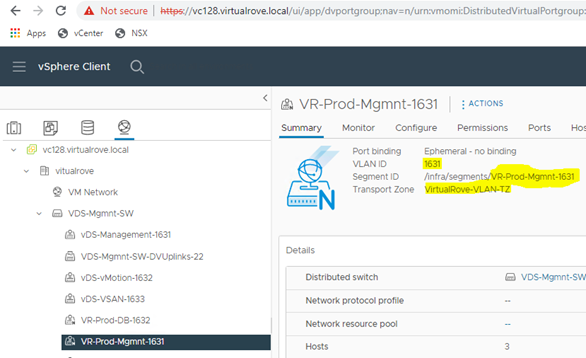

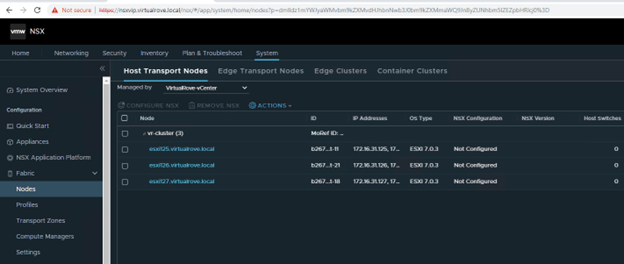

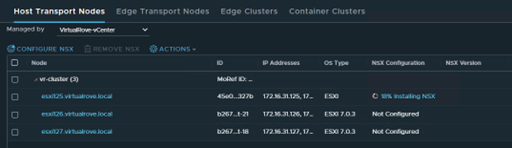

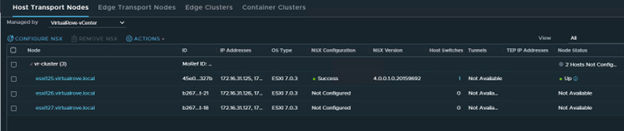

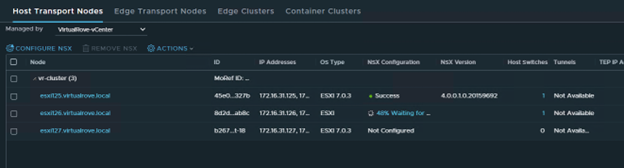

We only have 3 hosts in the cluster. For some reason, it was showing 4th host “esxi164” in host groups which does not exist in the vCenter inventory.

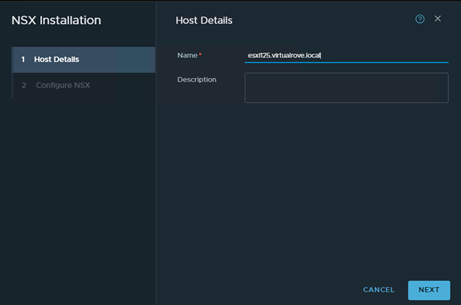

Click on the host group to check the details.

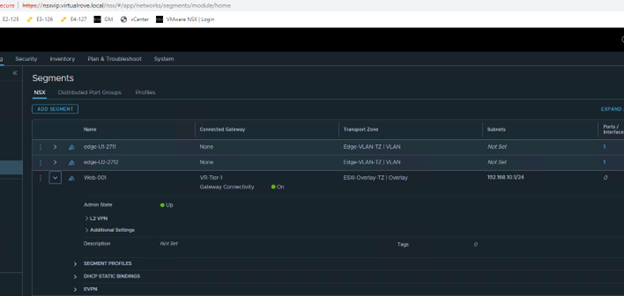

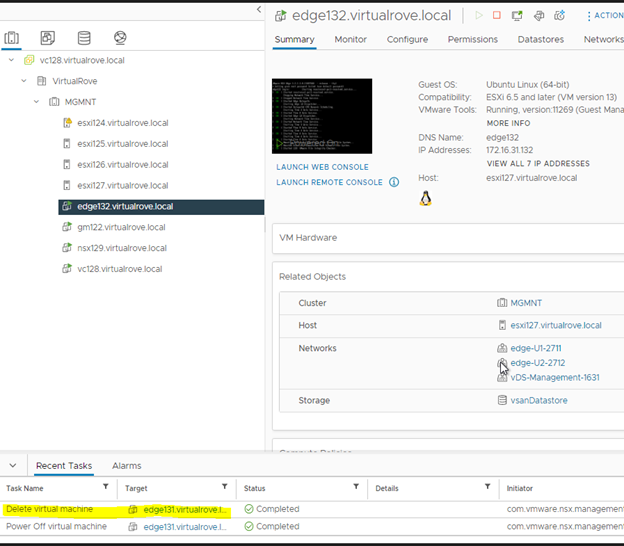

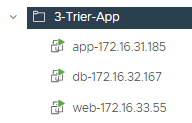

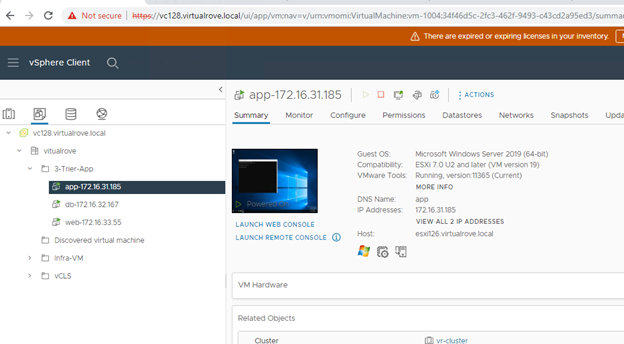

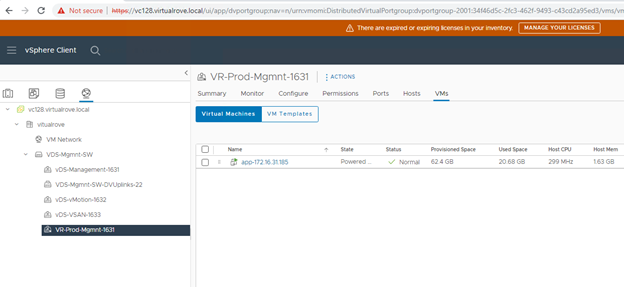

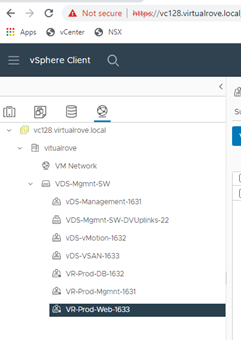

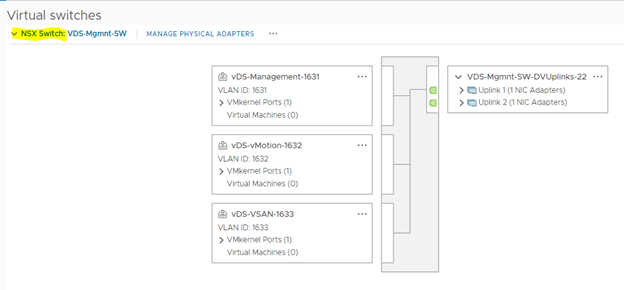

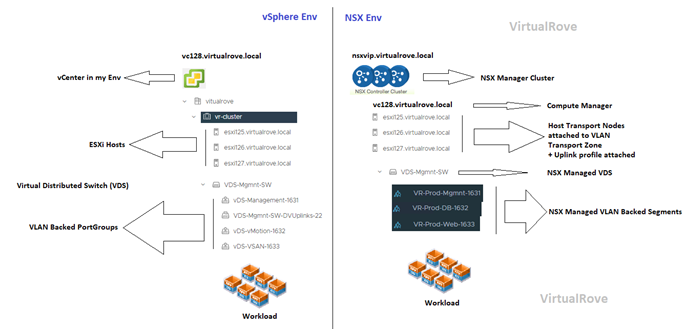

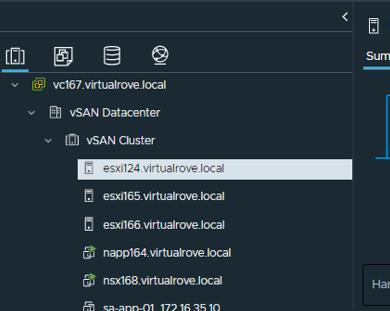

Here is my vCenter inventory,

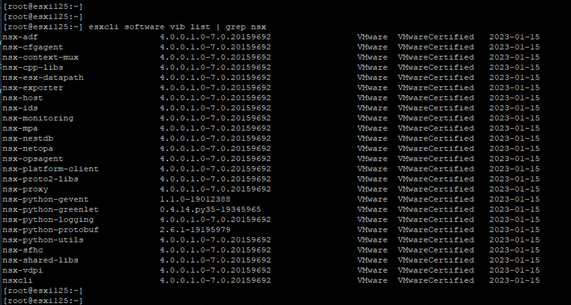

The host in the question (esxi164.virtualrove.local) was one of the old host in the cluster. It was removed from the cluster long back. However, somehow it is showing up in NSX upgrade inventory.

And as the error message says, NSX-T manager was unable to locate this to upgrade it.

“Connection between host 473cc672-2417-4a97-b440-38ab53135d02 and NSX Manager is UNKNOWN.”

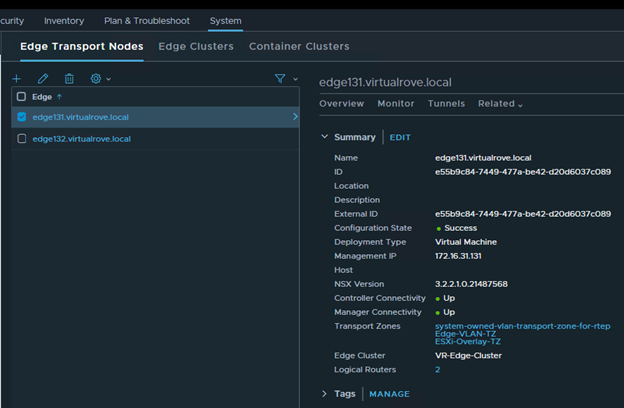

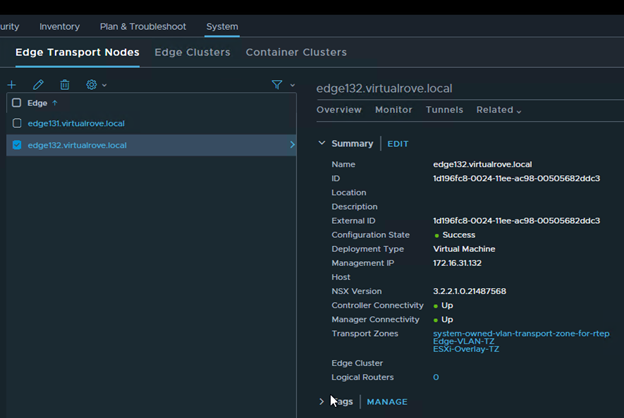

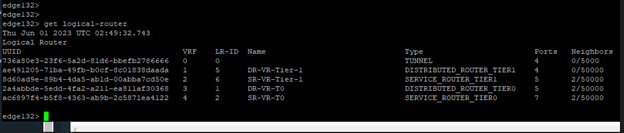

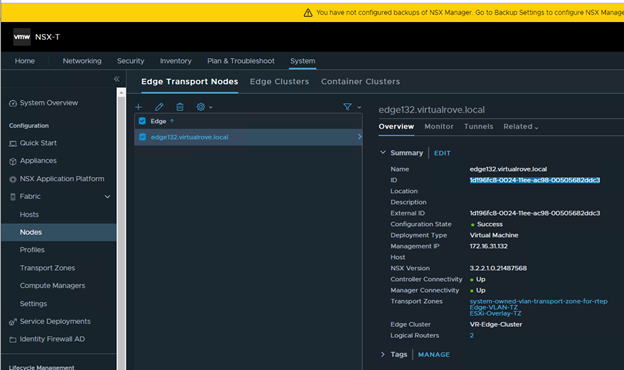

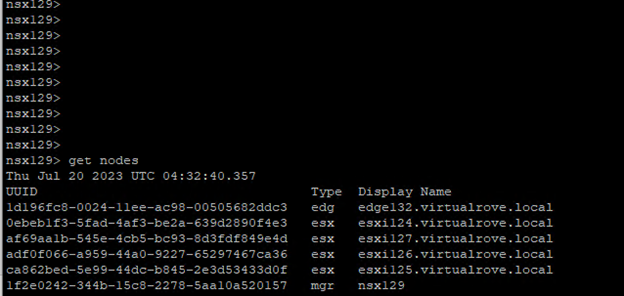

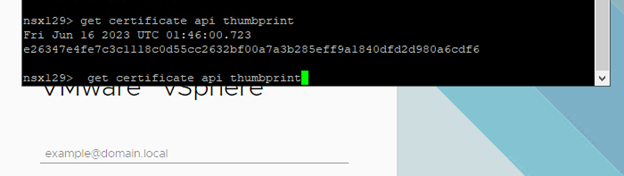

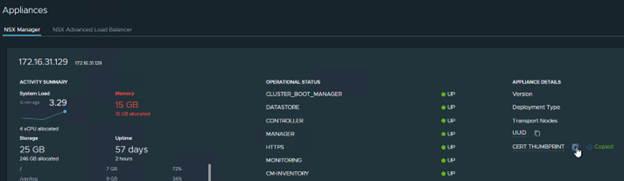

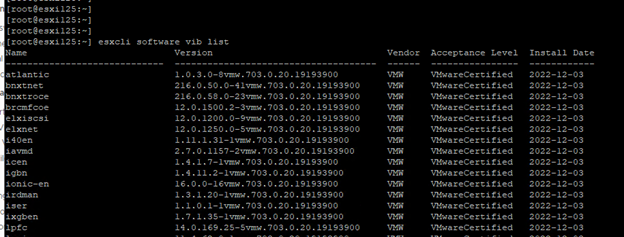

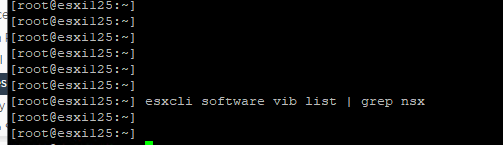

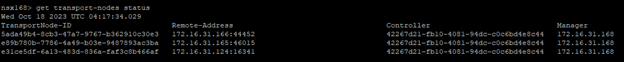

The UUID mentioned in the error message had to be for missing host (esxi164.virtualrove.local). Because the UUID was not matching with any of the host transport nodes UUID in the cluster. You can run the following command on one of the NSX manager to get the UUID’s of the nodes.

get transport-nodes status

Or you can click on the TN node in NSX UI to check the UUID.

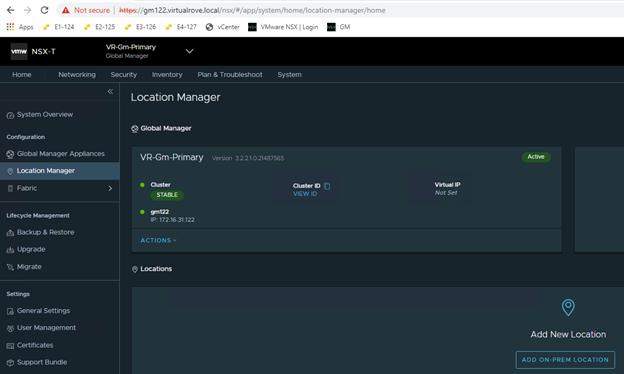

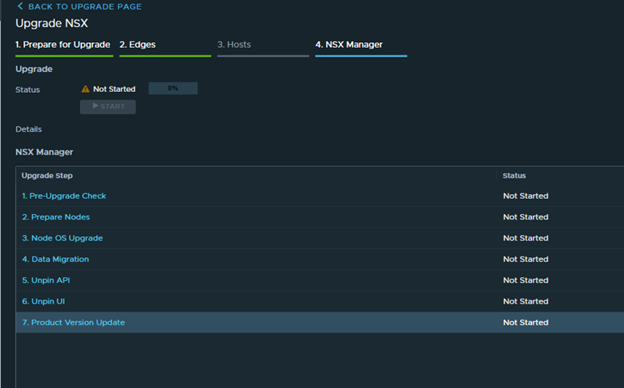

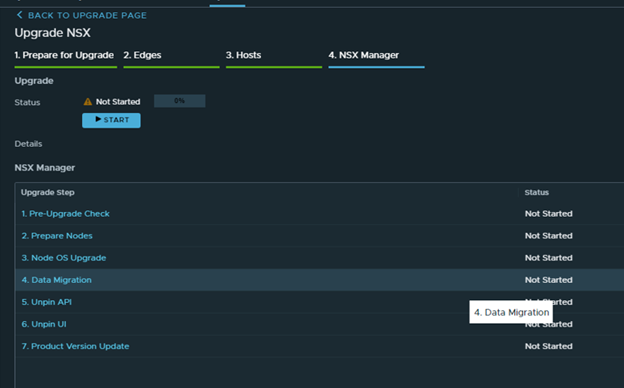

If you click next on the upgrade page, it will not let you upgrade NSX managers.

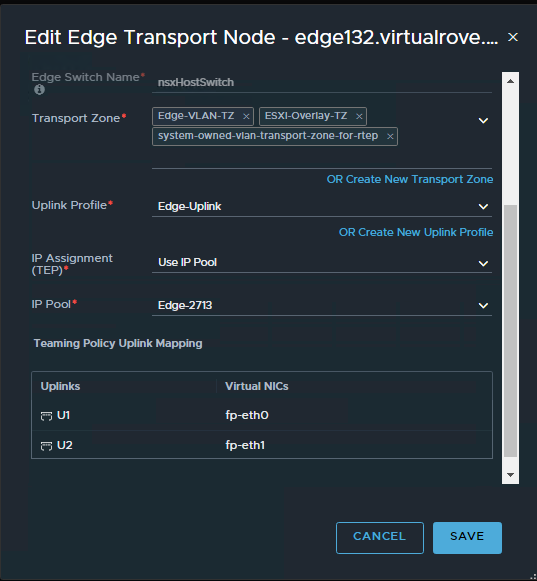

So, the possible cause for this issue is, the old host entry still exists in the NSX inventory somewhere. And it is trying to locate that host to upgrade it.

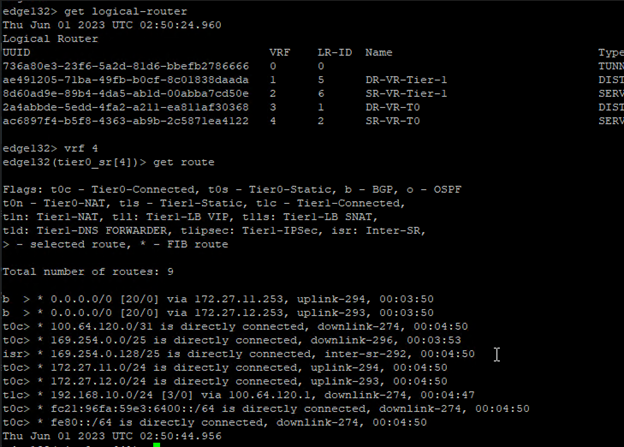

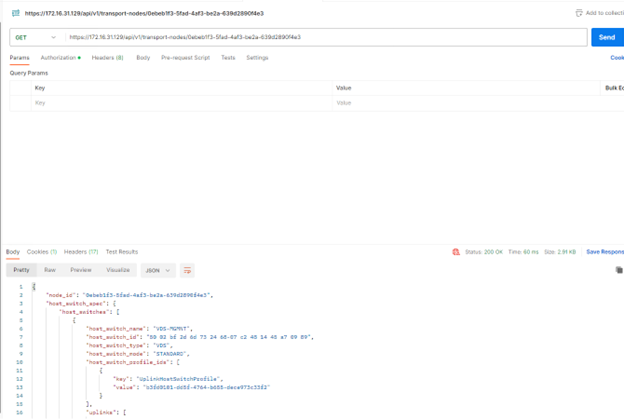

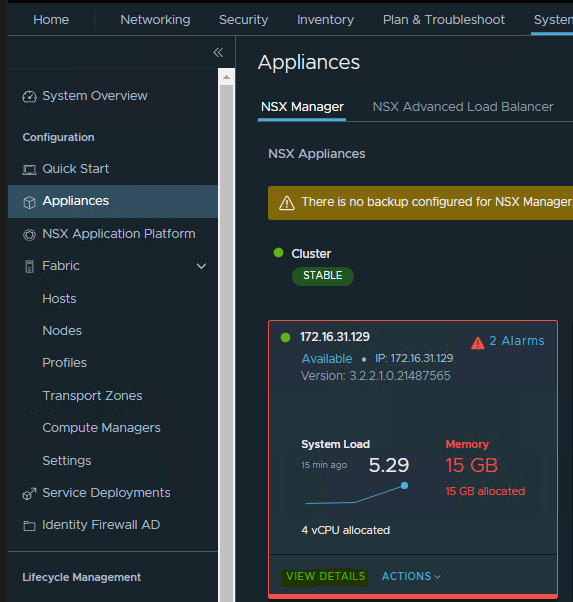

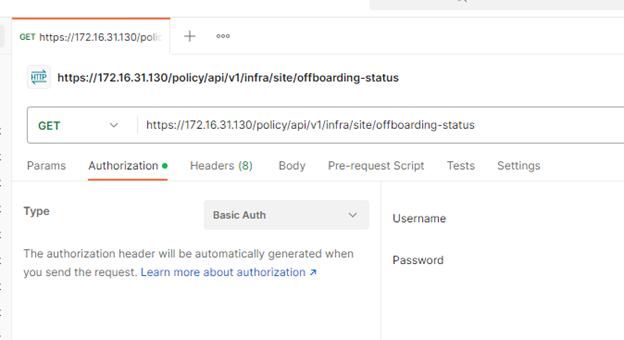

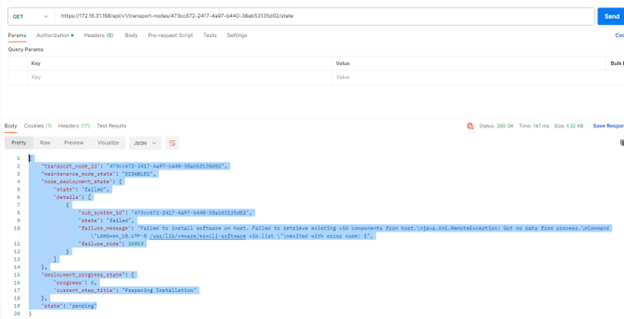

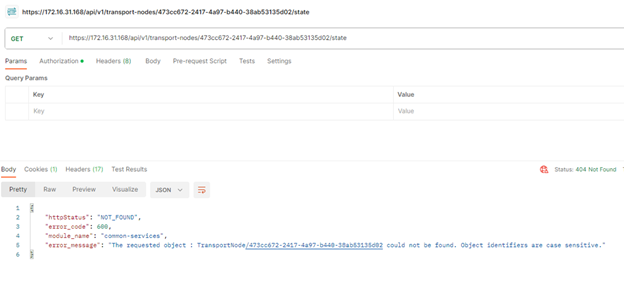

There is an API call to check the state of the host.

GET https://{{MPIP}}/api/v1/transport-nodes/<Transport-Node-UUID>/state

Replace the MPIP (NSX manager IP) and TN UUID to match with your env.

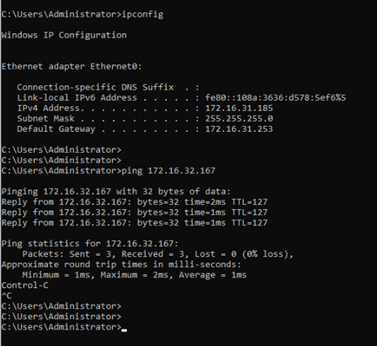

GET https://172.16.31.168/api/v1/transport-nodes/473cc672-2417-4a97-b440-38ab53135d02/state

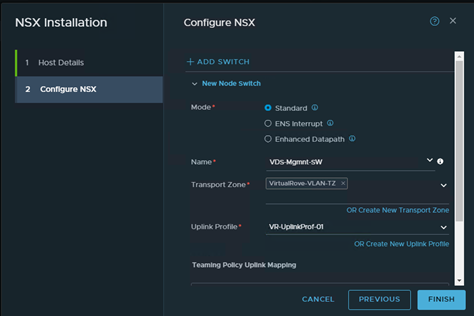

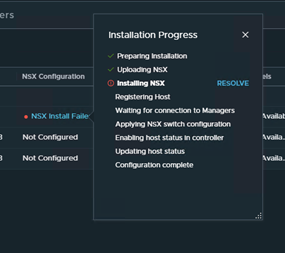

As we can see from the output, “current_step_title: Preparing Installation”. Looks like something went wrong while the host was being removed from NSX env and its state is still being marked as “state: pending” in NSX manager database.

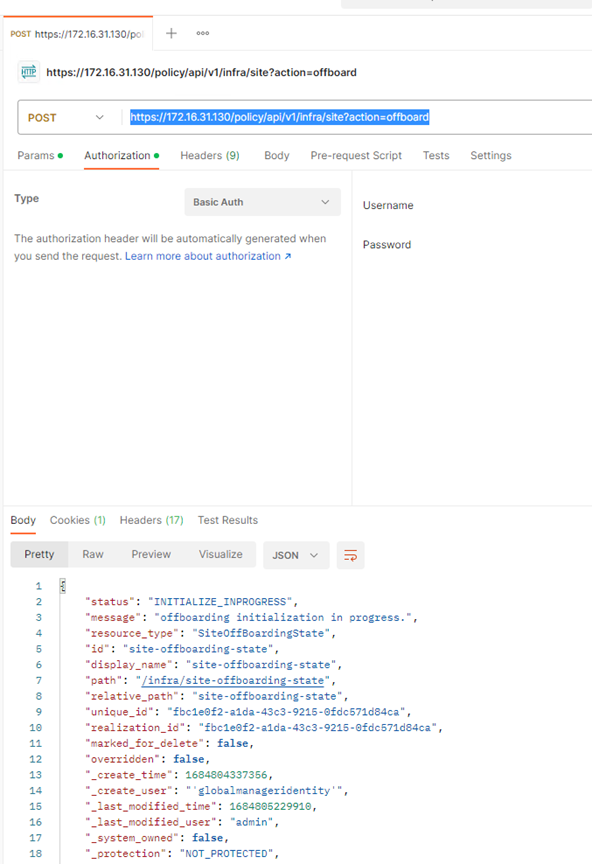

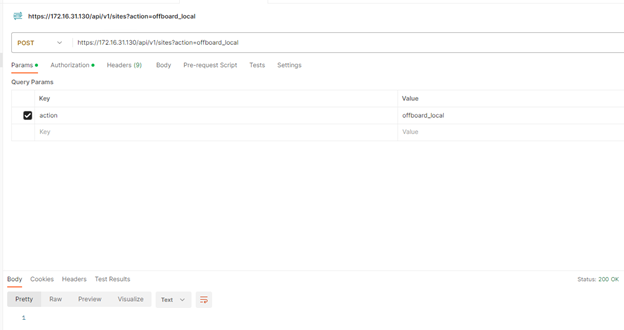

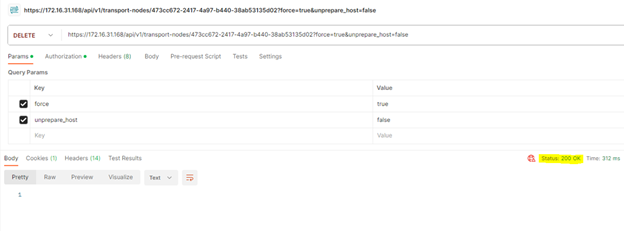

Lets delete the host entry by using an API call,

DELETE https://172.16.31.168/api/v1/transport-nodes/473cc672-2417-4a97-b440-38ab53135d02?force=true&unprepare_host=false

Status: 200 OK

Run the GET API again to confirm,

It does not show any information now.

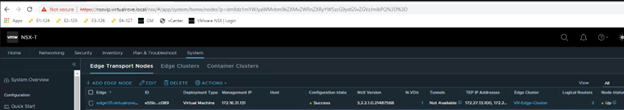

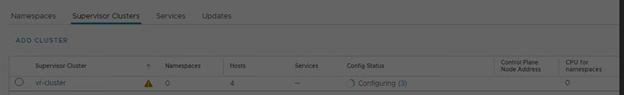

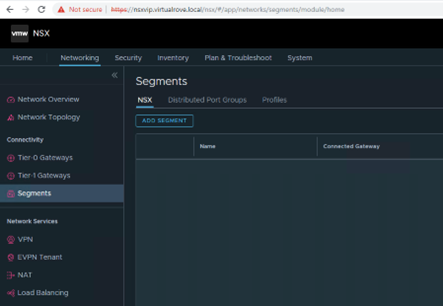

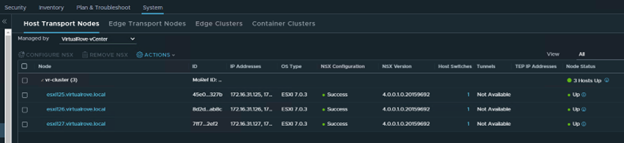

Time to check the upgrade console in NSX.

The group which was showing 1 host with an error no longer exists.

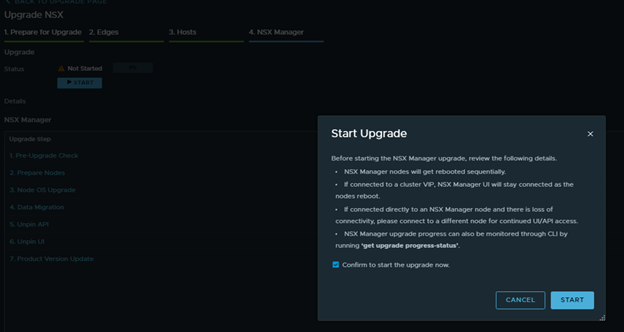

I was able to get to the next step to upgrade NSX managers.

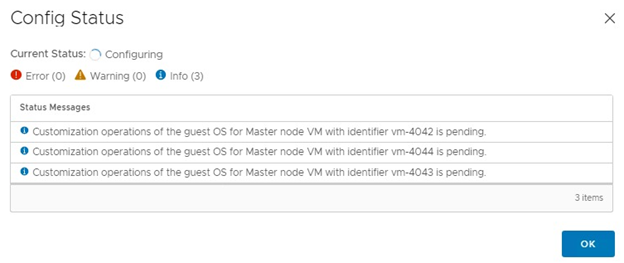

Confirm and start.

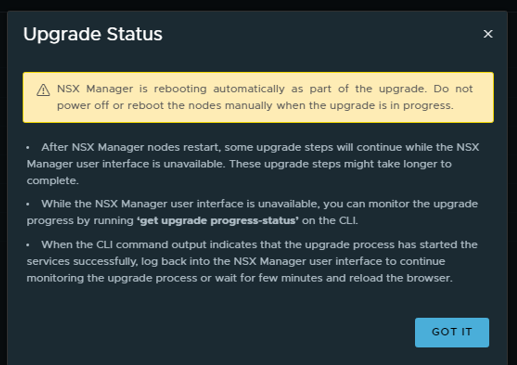

Upgrade status.

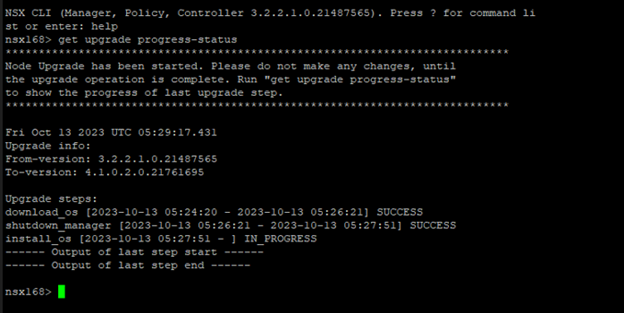

As stated in the message above, ran “get upgrade progress-status” in the cli.

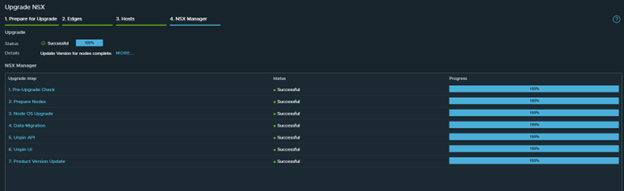

NSX upgrade to v4.1 has been successfully completed.

That’s all for this blog. Hope that the information in the blog is helpful. See you in the next blogpost. Thank You for visiting.

Are you looking out for a lab to practice VMware products…? If yes, then click here to know more about our Lab-as-a-Service (LaaS).

Leave your email address in the box below to receive notification on my new blogs.