TransAsia Airways Flight GE235 accident, associated with the pilot-flying confusion after an uncommanded auto-feather of engine number 2 which resulted in reducing power on the operative engine 1, brings to our days an old danger we thought had been overridden.

Photo (C) Michael Karch ATR 72-212A(500)

Therefore, the Aviation Safety Council Taipei-Taiwan during the investigation process made a review of the available literature and studies related to Propulsion System Malfunction and Inappropriate Crew Response (PSM + ICR) and published this review in its final report.

Propulsion System Malfunction and Inappropriate Crew Response (1)

1. Overview of PSM+ICR Study

Following an accident in the U.S. in December 1994, (Note from the blogger: American Eagle Flight 3379 uncontrolled collision with terrain. Morrisville, North Carolina December 13th, 1994) the U.S. Federal Aviation Administration (FAA) requested the Aviation Industries Association (AIA) to conduct a review of serious incidents and accidents that involved an engine failure or perceived engine failure and an ‘inappropriate’ crew response. The AIA conducted the review in association with the European Association of Aerospace Industries (AECMA) and produced their report in November 1998. (Note from the blogger: This report is included in a digest from the Flight Safety Foundation which includes multiple reports. The subject report concludes on page 193 of the linked document Sallee, G. P. & Gibbons, D. M. (1999). Propulsion system malfunction plus inappropriate crew response (PSM + ICR). Flight Safety Digest, 18, (11-12), 1-193)

The review examined all accidents and serious incidents worldwide which involved ‘Propulsion System Malfunction + Inappropriate Crew Response (PSM+ICR)’. Those events were defined as ‘where the pilot(s) did not appropriately handle a single benign engine or propulsion system malfunction’. Inappropriate responses included incorrect response, lack of response, or unexpected and unanticipated response. The review focused on events involving western-built commercial turbofan and turboprop aircraft in the transport category. The review conclusions included the following:

- The rate of occurrences per airplane departure for PSM+ICR accidents had remained essentially constant for many years. Those types of accidents were still occurring despite the significant improvement in propulsion system reliability that has occurred over the past 20 years, suggesting that the rate of inappropriate crew response to propulsion system malfunction rates had increased.

- As of 1998, the number of accidents involving PSM+ICR was about three per year in revenue service flights, with an additional two per year associated with flight crew training of simulated engine-out

- Although the vast majority of propulsion system malfunctions were recognized and handled appropriately, there was sufficient evidence to suggest that many pilots have difficulty identifying certain propulsion system malfunctions and reacting appropriately.

- With specific reference to turboprop aircraft, pilots were failing to properly control the airplane after a propulsion system malfunction that should have been within their capabilities to handle.

- The research team was unable to find any adequate training materials on the subject of modern propulsion system malfunction recognition.

- There were no existing regulatory requirements to train pilots on propulsion system malfunction

- While current training programs concentrated appropriately on pilot handling of engine failure (single engine loss of thrust and resulting thrust asymmetry) at the most critical point in flight, they do not address the malfunction characteristics (auditory and visual cues) most likely to result in inappropriate

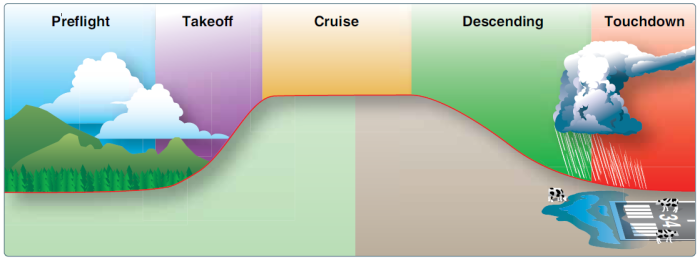

Turboprop Aircraft

Of the 75 turboprop occurrences with sufficient data for analysis, about 80% involved revenue flights. PCM+ ICR events in turboprop operations were occurring at 6 ± 3 events per year. About half of the accidents involving turboprop aircraft in the transport category occurred during the takeoff phase of flight. About 63% of the accidents involved a loss of control, with most of those occurring following the propulsion system malfunction during takeoff. Seventy percent of the ‘power plant malfunction during takeoff’ events led to a loss of control, either immediately or on the subsequent approach to land.

Propulsion system failures resulting in an uncommanded total power loss were the most common technical events. ‘Shut down by crew’ events included those where either a malfunction of the engine occurred and the crew shut down the engine, or where one engine malfunctioned and the other (wrong) engine was shut down. Fifty percent of the ‘shut down by crew’ events involved the crew shutting down the wrong engine, half of which occurred on training flights.

Failure Cues

The report’s occurrence data indicated that flight crews did not recognize the propulsion system malfunction from the symptoms, cues, and/or indications. The symptoms and cues were, on occasion, misdiagnosed resulting in inappropriate action. In many of the events with inappropriate actions, the symptoms and cues were totally outside of the pilot’s operational and training experience base.

The report stated that to recognize power plant malfunctions, the entry condition symptoms and cues need to be presented during flight crew training as realistically as possible. When these symptoms and cues cannot be presented accurately, training via some other means should be considered. The need to accomplish failure recognition emerges from the analysis of accidents and incidents that were initiated by single power plant failures which should have been, but were not, recognized and responded to in an appropriate manner.

While training for engine failure or malfunction recognition is varied, it often involved the pilot reaction to a single piece of data (one instrument or a single engine parameter), as opposed to assessing several data sources to gain information about the total propulsion system. Operators reported that there was little or no training given on how to identify a propulsion system failure or malfunction.

There was little data to identify which cues, other than system alerts and annunciators, the crews used or failed to use in identifying the propulsion system malfunctions. In addition, the report was unable to determine if the crews had been miscued by aircraft systems, displays, other indications, or each other where they did not recognize the power plant malfunction or which power plant was malfunctioning.

Effect of Auto-feather Systems

The influence of auto-feather systems on the outcome of the events was also examined. The events “loss of control during takeoff” were specifically addressed since this was the type of problem and flight phase for which auto-feather systems were designed to aid the pilot. In 15 of the events, auto-feather was fitted and armed (and was therefore assumed to have operated). In five of the events, an auto-feather system was not fitted and of the remaining six, the auto-feather status is not known. Therefore, in at least 15 out of 26 events, the presence of auto-feather failed to prevent the loss of control. This suggests that whereas auto-feather is undoubtedly a benefit, control of the airplane is being lost for reasons other than excessive propeller drag.

Training Issues

In early generation jet and turboprop aircraft, flight engineers were assigned the duties of recognizing and handling propulsion system anomalies. Specific training was given to flight engineers on these duties under the requirements of CFR Part 63 – Certification: Flight Crew Members Other than Pilots, Volume 2, Appendix 13. To become a pilot, an individual progressed from flight engineer through co-pilot to pilot and all pilots by this practice received power plant malfunction recognition training. The majority of pilots from earlier generations were likely to see several engine failures during their careers, and failures were sufficiently common to be a primary topic for discussion. It was not clear how current generation pilots learned to recognize and handle propulsion system malfunctions.

At the time of the report, pilot training and checking associated with propulsion system malfunctions concentrated on emergency checklist items which were typically limited, on most aircraft, to an engine fire, in-flight shutdown and re-light, and, low oil pressure. In addition, the training and checking covered the handling task following engine failure at or close to V1. Pilots generally were not exposed in their training to the wide range of propulsion system malfunctions that can occur. No evidence was found of specific pilot training material on the subject of propulsion system malfunction recognition on modern engines.

There’s a broad range of propulsion system malfunctions that can occur and the symptoms associated with those malfunctions. If the pilot community is, in general, only exposed to a very limited portion of that envelope, it is probable that many of the malfunctions that occur in service will be outside the experience of the flight crew. It was the view of the research group that, during basic pilot training and type conversion, a foundation in propulsion system malfunction recognition was necessary. This should be reinforced, during recurrent training with exposure to the extremes of propulsion system malfunction; e.g., the loudest, most rapid, most subtle, etc. This, at least, should ensure that the malfunction was not outside the pilot’s experience, as was often the case.

The report also emphasized that “Although it is important to quickly identify and diagnose certain emergencies, the industry needs to effect cockpit/aircrew changes to decrease the likelihood of a too-eager crew member in shutting down the wrong engine”. In addition, the report also noted that negative transfer has also been seen to occur since initial or ab-initio training was normally carried out in aircraft without auto-feather systems. Major attention was placed on the need for rapid feathering of the propeller(s) in the event of engine failure. On most modern turboprop commercial transport airplanes, which are fitted with auto-feather systems, this training can lead to over-concentration on the propeller condition at the expense of the most important task of flying the airplane.

Furthermore, both negative training and transfer were most likely to occur at times of high stress, fear, and surprise, such as may occur in the event of a propulsion system malfunction at or near the ground.

Loss of control may be due to a lack of piloting skills or it may be that preceding inappropriate actions had rendered the aircraft uncontrollable regardless of skill. The recommended solutions (even within training) would be quite different for these two general circumstances. In the first instance, it is a matter of instilling through practice the implementation of appropriate actions without even having to think about what to do in terms of control actions. In the second instance, there is a serious need for procedural practice. Workload, physical and mental, can be very high during an engine failure event.

Training Recommendations

The report made a number of recommendations to improve pilot training. With specific reference to turboprop pilot training, the report recommended:

- Industry provides training guidelines on how to recognize and diagnose the engine problem by using all available data in order to provide the complete information state of the propulsion

- Industry standardized training for asymmetric

- Review stall recovery training for pilots during takeoff and go-around with a focus on preventing confusion during low-speed flight with an engine failure.

American Eagle Flight 3379 uncontrolled collision with terrain. Morrisville, North Carolina December 13th, 1994 Photo from GenDisasters.com

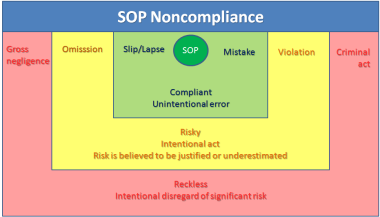

Error types

Errors in integrating and interpreting the data produced by propulsion system malfunctions were the most prevalent and varied in the substance of all error types across events. This might be expected given the task pilots have in propulsion system malfunction (PSM) events of having to integrate and interpret data both between or among engines and over time in order to arrive at the information that determines what is happening and where (i.e., to which component). The error data clearly indicated that additional training, both event-specific and on system interactions, is required.

Data integration

The same failure to integrate relevant data resulted in instances where the action was taken on the wrong engine. These failures to integrate data occurred both when engine indications were changing rapidly, that is more saliently, as well as when they were changing more slowly over time.

Erroneous assumptions

The second category of errors related to interpretation involved erroneous assumptions about the relationship between or among aircraft systems and/or the misidentification of specific cues during the integration/interpretation process. Errors related to erroneous assumptions should be amenable to reduction, if not elimination, through the types of training recommended by the workshop. Errors due to misidentification of cues need to be evaluated carefully for the potential for design solutions.

Misinterpretation of cues

A third significant category of errors leading to inappropriate crew responses under “interpret” was that of misinterpretation of the pattern of data (cues) available to the crew for understanding what was happening and where in order to take appropriate action. Errors of this type may be directly linked to failures to properly integrate cue data because of incomplete or inaccurate mental models at the system and aircraft levels, as well as misidentification of cues. A number of the events included in this subcategory involved the misinterpretation of the pattern of cues because of the similarity of cue patterns between malfunctions with very different sources.

Crew communication

A fourth error category involved the failure to obtain relevant data from crew members. The failure to integrate input from crew members into the pattern of cues was considered important for developing recommendations regarding crew coordination. It also highlighted the fact that inputs to the process of developing a complete picture of relevant cues for understanding what is happening and where can and often must come from other crew members as well as from an individual’s cue-seeking activity. This error type was different to “not attending to inputs from crew members”, which would be classified as a detection error.

System knowledge

Knowledge of system operation under non-normal conditions was inadequate or incomplete and produced erroneous or incomplete mental models of system performance under non-normal conditions. The inappropriate crew responses were based on errors produced by faulty mental models at either the system or aircraft level.

Improper strategy and/or procedure and execution errors

The selection of an inappropriate strategy or procedure featured prominently in the events and included deviations from best practice and choosing to reduce power on one or both engines below a safe operating altitude. Execution errors included errors made in the processing and/or interpretation of data or those made in the selection of the action to be taken.

2. U.S. Army ‘Wrong Engine’ Shutdown Study

The United States (U.S.) Army conducted a study to see if pilots’ reactions to single-engine emergencies in dual-engine helicopters were a systemic problem and whether the risks of such actions could be reduced. The goal was to examine errors that led to pilots to shutting down the wrong engine during such emergencies (‘The Wrong Engine Study’- Wildzunas, R.M., Levine, R.R., Garner, W., and Braman, G.D. (1999). Error analysis of UH-60 single-engine emergency procedures (USAARL Report No. 99-05). Fort Rucker, AL: U.S. Army Aeromedical Research Laboratory).

The research involved the use of surveys and simulator testing. Over 70 % of survey respondents believed there was the potential for shutting down the wrong engine and 40 % confirmed that they had, during actual or simulated emergency situations, confused the power control levers (PCLs). In addition, 50% of those who recounted confusion confirmed they had shut down the “good engine” or moved the good engine’s PCL. When asked what they felt had caused them to move the wrong PCL, 50% indicated that their action was based on an incorrect diagnosis of the problem. Other reasons included the design of the PCL, the design of the aircraft, use of night vision goggles (NVG), inadequate training, negative habit transfer, rushing the procedure and inadequate written procedures. When asked how to prevent pilots from selecting the wrong engine, 75% recommended training solutions and 25% engineering solutions.

The simulator testing (n=47) found that 15% of the participants reacted incorrectly to the selected engine emergency and 25% of the erroneous reactions resulted in dual engine power loss and simulated fatalities. Analysis of reactions to the engine emergencies identified difficulties with the initial diagnosis of a problem (47%) and errors in action taken (32%). Other errors included the failure to detect system changes, failure to select a reasonable goal based on the emergency (get home versus land immediately), and failure to perform the designated procedure. The range of responses included immediately recognizing and correcting the error to shutting down the “good” engine, resulting in loss of the helicopter. Although malfunctions that require single-engine emergency procedures were relatively rare, the study indicated that there was a one in six likelihood that, in these types of emergencies, the crew will respond incorrectly.

The pattern of cognitive errors was very similar to the PSM+ICR error data. The functions contributing to the greatest number of errors were diagnostic (interpretation) and action (execution). The largest difference was in the major contribution of strategy/procedure errors in the PSM+ICR database, whereas there were comparatively few goal, strategy, and procedure errors in the U.S. Army simulator study. The survey data indicated that pilots felt that improper diagnosis and lack of training were major factors affecting their actions on the wrong engine. This supported the findings of the PSM+ICR report that included the need for enhanced training to improve crew performance in determining what is happening and where.

3. Additional Human Factors considerations

3.1 Diagnostic skills

Diagnostic skills are recognized as having important implications for operators of complex socio-technical systems, such as aviation (Wiggins, M. W. (2015). Cues in diagnostic reasoning. In M. W. Wiggins and T. Loveday (Eds.), Diagnostic expertise in organizational environments (pp. 1-13). Aldershot, UK: Ashgate.).

The development of advanced technologies and their associated interfaces and displays have highlighted the importance of cue acquisition and utilization to accurately and efficiently determine the status of a system state before responding appropriately to that situation. Moreover, cue-based processing research has significant implications for designing diagnostic support systems, interfaces, and training (Wiggins, M. W. (2012). The role of cue utilization and adaptive interface design in the management of skilled performance in operations control. Theoretical Issues in Ergonomics Science, 15, 1-10).

In addition, miscuing (Miscuing refers to the activation of an inappropriate association in memory by a salient feature, thereby delaying or preventing the accurate recognition of an object or event) and/or poorly differentiated cues have been implicated in several major aircraft accidents, including Helios Airways Flight 522 and Air France Flight 447 (Loveday, T. (2015). Designing for diagnostic cues. In M.W. Wiggins and T. Loveday (Eds.), Diagnostic expertise in organizational environments (pp. 49-60). Aldershot, UK: Ashgate.)

It has also been argued that cue-based associations comprise the initial phase of situational awareness (O’Hare, D. (2015). Situational awareness and diagnosis. In M. W. Wiggins and T. Loveday (Eds.), Diagnostic expertise in organizational environments (pp. 13-26). Aldershot, UK: Ashgate).

Furthermore, it has been demonstrated that individuals and teams with higher levels of cue utilization have superior diagnostic skills and are better equipped to respond to non-normal system states (Loveday, T., Wiggins, M. W., & Searle, B. J. (2013). Cue utilization and broad indicators of workplace expertise. Journal of Cognitive Engineering and Decision-Making, 8, 98-113).

The ‘PSM+ICR’ study identified recurring problems with a crew’s diagnosis of propulsion system malfunctions, in part, because the cues, indications, and/or symptoms associated with the malfunctions were outside of the pilot’s previous training and experience. Consistent with the U.S. Army study, that often led to confusion and inappropriate responses, including shutting down the operative engine.

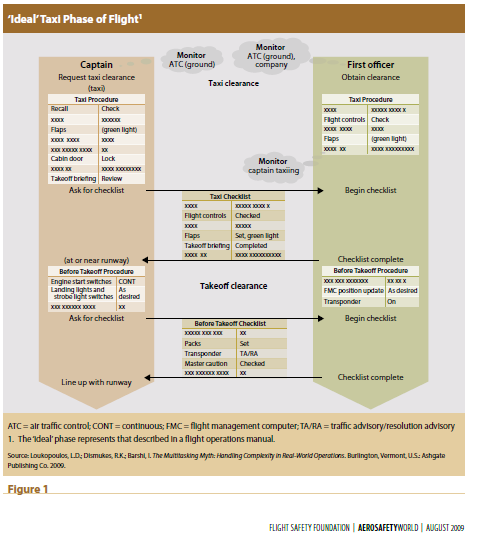

3.2 Situational Awareness

Situational awareness (SA) is a state of knowledge which is achieved through various situation assessment processes (Endsley, M.R. (2004). Situation awareness: Progress and directions. In S. Banbury & S. Tremblay (Eds.), Acognitive approach to situation awareness: Theory and application (pp. 317–341). Aldershot, UK: Ashgate Publishing).

This internal model is believed to be the basis of decision-making, planning, and problem solving. Information in the world must be perceived, interpreted, analyzed for significance, and integrated with previous knowledge, to facilitate a predictive understanding of a system’s state. SA is having an accurate understanding of what is happening around you and what is likely to happen in the near future. Team SA is the degree to which every team member possesses the SA required for their responsibilities (Endsley, M. R. & Jones, W. M. (2001). A model of inter- and intrateam situation awareness: Implications for design, training and measurement. In M. McNeese, E. Salas & M. Endsley (Eds.), New trends in cooperative activities: Understanding system dynamics in complex environments. Santa Monica, CA: Human Factors and Ergonomics Society).

The three stages of SA formation have traditionally included:

- Perception of environmental elements (important and relevant items in the environment must be perceived and recognized. It includes elements in an aircraft such as system status, warning lights and elements external to an aircraft such as other aircraft, obstacles);

- The comprehension of their meaning; and

- The projection of their status following a change in a variable (with sufficient comprehension of the system and appropriate understanding of its behavior, an individual can predict, at least in the near term, how the system will behave. Such understanding is important for identifying appropriate actions and their consequences).

Dominguez et al. (1994) proposed that SA comprised the following four elements: (Dominguez, C. (1994). Can SA be defined? In M. Vidulich, C. Dominguez, E. Vogel, & G. McMillan, Situation awareness: Papers and annotated bibliography (pp. 5-16). Wright-Patterson AFB, OH: Armstrong Laboratory. Flight Safety Foundation. (2009)

- Extracting information from the environment;

- Integrating this information with relevant internal knowledge to create a mental picture of the current situation;

- Using this picture to direct further perceptual exploration in a continual perceptual cycle; and

- Anticipating future

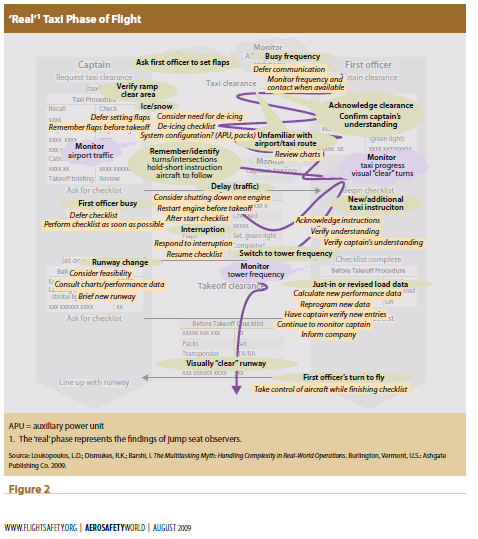

Many factors can induce a loss of situational awareness. Errors can occur at each level of the process. (Crew resource management. Operator’s guide to human factors in aviation. Alexandria, VA: Author. Also, see Skybrary Situational Awareness article

A loss of situational awareness could occur when there was a failure at any one of these stages resulting in the pilot and/or crew not having an accurate mental representation of the situation.

The following list shows a series of factors related to loss of situational awareness, and conditions contributing to those errors

- Data are not observed, either because they are difficult to observe or because the observer’s scanning is deficient due to:

-Attention narrowing

-Passive, complacent behavior

-High workload

-Distractions and interruptions

-Visual Illusions

-Information is misperceived. Expecting to observe something and focusing attention on that belief can cause people see what they expect rather than what is actually happening.

- Use of a poor or incomplete mental model due to:

-Deficient observations

-Poor knowledge/experience

– Use of a wrong or inappropriate mental model, over-reliance on the mental model and failing to recognize that the mental model needs to change.

Human operators may interpret the nature of the problem incorrectly, which leads to inappropriate decisions because they are solving the wrong problem (an SA error) or operators may establish an accurate picture of the situation, but choose an inappropriate course of action (error of intention).

Endsley (1999) reported that perceptual issues accounted for around 80% of SA errors, while comprehension and projection issues accounted for 17% and 3% of SA errors, respectively. That the distribution of errors was skewed to perceptual issues likely reflected that errors at Levels 2 and 3 will lead to behaviors (e.g., misdirection of attentional resources) that produce Level 1 errors (Endsley, M. R. (1999). Situation awareness in aviation systems. In J. A. Wise, V. D. Hopkin, V. D., & D. J. Garland, (Eds.), Handbook of aviation human factors (pp. 257-275). Mahwah, NJ: Lawrence Erlbaum.).

St. John and Smallman (2008) noted that SA is negatively affected by Endsley, interruptions and multi-tasking (St.John, M. S., & Smallman, H. S. (2008). Staying up to speed: Four design principles for maintaining and recovering situation awareness. Journal of Cognitive Engineering and Decision Making, 2, 118-139).

One of the difficulties of maintaining SA was to recover from a reallocation of cognitive resources as tasks and responsibilities change in a dynamic environment. In many, interruptions and multi-tasking introduce conditions for change blindness or for problems with cue acquisition, understanding, and utilization. Change blindness is the striking failure to see large changes that normally would be noticed easily. (See Simons, D. J., & Rensink, R. A. (2005). Change blindness: Past, present, and future. Trends in Cognitive Sciences, 9, 16-20).

For a pilot, situational awareness means having a mental picture of the existing inter-relationship of location, flight conditions, configuration and energy state of the aircraft as well as any other factors that could be about to affect its safety such as proximate terrain, obstructions, airspace, and weather systems. The potential consequences of inadequate situational awareness include CFIT, loss of control, airspace infringement, loss of separation, or an encounter with wake vortex turbulence.

There is a substantial amount of aviation-related situational awareness research. Much of this research supports the loss of situational awareness mitigation concepts. These include the need to be fully briefed, in order to completely understand the particular task at hand. That briefing should also include a risk management or threat and error management assessment. Another important mitigation strategy is distraction management. It is important to minimize distraction, however, if a distraction has occurred during a particular task, to ’back up ‘a few steps, and check whether the intended sequence has been followed.

3.3 Stress

Stress can be defined as a process by which certain environmental demands evoke an appraisal process in which perceived demand exceeds resources and results in undesirable physiological, psychological, behavioral or social outcomes. This means if a person perceives that he or she is not able to cope with a stressor, it can lead to negative stress reactions. Stress can have many effects on a pilot’s performance. These include cognitive issues such as narrowed attention, decreased search activity, longer reaction time to peripheral cues and decreased vigilance, and increased errors performing operational procedures.

Stress management techniques include simulator training to develop proficiency in handling non-normal flight situations that are not encountered often and the anticipation and briefing of possible scenarios and threats that could arise during the flight even if they are unlikely to occur (e.g. engine failure). These techniques help prime a crew to respond effectively should an emergency arise.

Why did the pilots shut down the wrong engine? (4)

Besides the scientific literature overviewed by the Taiwanese investigation authority, on September 2011 Safety Science published a study that looked to demonstrate that Schema Theory (as incorporated in the Perceptual Cycle framework) offers a compelling causal account of human error. Schema Theory offers a system perspective with a focus on human activity in context to explain why apparently erroneous actions occurred, even though they may have appeared to be appropriate at the time. This is exemplified in a case study of the pilots’ actions preceding the 1989 Kegworth accident (Lessons learned from British Midland Flight 92, Boeing B-737-400, January 8, 1989) and offers a very interesting approach to human error in aviation. (Katherine L. Plant, Neville A. Stanton, Why did the pilots shut down the wrong engine? Explaining errors in context using Schema Theory and the Perceptual Cycle Model. Transportation Research Group, School of Civil Engineering and the Environment, University of Southampton, Highfield, Southampton SO17 1BJ, United Kingdom)

What is a Schema?

“For the purposes of this paper, a Schema will be considered as an organized mental pattern of thoughts or behaviors to help organize world knowledge (Neisser, 1976). The concept of Schemata is an attempt to explain how we represent aspects of the world in mental representations and use these representations to guide future behaviors. They provide instruction to our cognition and organize the mass of information we have to deal with (Chalmers, 2003). Our knowledge about everything can be considered as networks of information that become activated as we experience things and function according to Schematic principles (Mandler, 1984). It is not analytic knowledge that is required for effective decision making in a naturalistic setting of a complex socio-technical system, such as a flight deck, but instead intuition. This intuition can be in the form of metaphors or storytelling that allow the perceiver to draw parallels, make inferences and consolidate experiences. It is this area of intuition that Schematic processing will be influential.

When a person carries out a task, Schemata affect and direct how they perceive information in the world, how this information is stored and then activated to provide them with past experiences and the knowledge about the actions required for a specific task (Mandler, 1984).”

The Kegworth Disaster (1989, UK)

“At 1845 on the 8th of January 1989 a Boeing 737-400 landed at London Heathrow Airport after completing its first shuttle from Belfast Aldergrove Airport. At 1952 the plane left Heathrow to return to Belfast with eight crew and 118 passengers. As the aircraft was climbing through 28,300 ft the outer panel of a blade in the fan of the No. 1 (left) engine detached. This gave rise to a series of compressor stalls in the No. 1 engine, which resulted in the airframe shuddering, smoke and fumes entering the cabin and flight deck and fluctuations of the No. 1 engine parameters. The crew made the decision to divert to East Midlands Airport. Believing that the No. 2 (right) engine had suffered damage, the crew throttled it back. The shuddering ceased as soon as the No. 2 engine was throttled back, which persuaded the crew that they had correctly dealt with the emergency, so they continued to shut it down. The No. l engine operated apparently normally after the initial period of vibration, during the subsequent descent, however, the No. 1 engine failed, causing the aircraft to strike the ground 2 nm from the runway. The ground impact occurred on the embankment of the M1 motorway. Forty-seven passengers died and 74 of the remaining 79 passengers and crew suffered serious injury. (The synopsis was adapted from the official Air Accident Investigation Branch report, AAIB, 1990.)”

Schematic analysis of the Kegworth crash

“As highlighted in the synopsis of the Kegworth accident many human errors contributed to the crash. This section accounts for the actions of the pilots in the Kegworth accident from a Schema perspective, using the five key contributory factors presented in the AAIB report as the structure (italics denote information paraphrased from the report).

Fundamental error: Shut down the wrong engine due to inappropriate diagnosis of smoke origin. The pilots believed that smoke entering the flight deck was coming forward from the passenger cabin. Their appreciation of the air conditioning system contributed to the pilots’ belief that this meant a fault with the right engine (instead of the damaged left one).

For the purpose of this paper, the Schematic representation of the air conditioning system will be dealt with detail, though for completion it is worth noting that the AAIB report states that the Captain believed the First Officer had seen positive engine instrument indications and therefore accepted the First Officer’s assessment of the situation. The decision made by the pilots about which engine was damaged was partly based on their assumed knowledge of the air conditioning system (Individual). This is a classic example of how people rely on their Schemata and mental representations. The Captain’s appreciation of the air conditioning system was correct for other types of aircraft flown in which he had acquired substantial flying experience. This experience would have resulted in the Captain having and utilising a Schema based on his knowledge that a problem with the right engine would mean smoke in the passenger’s cabin which could blow forward onto the flight deck due to the configuration of the air conditioning system (world), resulting in the wrong engine being shut-down (action). In a previous generation of aircraft (i.e. series 300 rather than series 400) this would be an entirely accurate mental representation, however, without the time and experience to develop an appropriate Schema, the Captain used a ‘default’ Schema based on previous similar situations. Reason (1990) states that errors are due to the human tendency towards the familiar, similar and expected because people favor using Schemata what are routine to them.

Similarly, when conducting task analyses for Navy tactical decision makers, Morrison et al. (1997) found that 87% of information transactions associated with situation assessment involved feature matching strategies, i.e. matching the observed event to those previously experienced. The underlying principle of Schema Theory is the use of previous experience to develop a set of expectations, even if they subsequently turn out to be wrong as was the case here; the air conditioning system did not work in the pilots expected way in the 400 series. In Norman’s (1981) view of Schema triggering components, the smoke on the flight deck thought to have come forward from the passenger cabin was enough to trigger the Schema for this situation. This, therefore, led to an erroneous classification of the situation. Thus the action was intended and correct for the assumed situation (right engine damage) but not the actual situation (left engine damage). In the Perceptual Cycle Model Schemata are anticipations and are the medium by which the past affects the future, i.e. information already acquired determines what will be picked up (Hanson and Hanson, 1996), this process is clearly evident in this example.

The error of shutting down the wrong engine seems so fundamental, but it is only with the benefit of hindsight that the authors know more than the pilots involved did at the time. The question that the ‘new view’ of error would want to ask is why the contradictions that are so easy to see now, were not interpreted at the time, i.e. why did assessments and actions make sense to the pilots’ at the time? (Dekker, 2006). Operators are often victims of Schema fixation, preventing them from changing their representation and detecting the error.

It must also be noted that the emergency nature of the situation would have influenced the crew’s decision-making abilities. It is far more comfortable and reassuring to impose structure on a situation and deal with the known rather than an unknown problem often resulting in unnecessary haste and failure to question a decision (Berman and Dismukes, 2006).

The subsequent five contributory factors were identified in the AAIB report as the key reasons why the fundamental error of shutting down the wrong engine occurred.

Contributory Factor 1: situation (combination of engine vibration, noise, and smell of fire) was outside crew’s training and experience.

The error literature describes inaccurate or incomplete Schemata as ‘‘buggy’’ (Groeger, 1997). It can be argued that the crew in the Kegworth accident had ‘buggy’ Schemata for the situation they were in because they had not been in that situation before and therefore they had not built up an accurate mental representation of what was going on and how to deal with it. The crew, however, did have an extensive knowledge of the previous generation of the aircraft type, which in this case, appeared to influence their decision making.

Operators in complex systems are in a state both mindfulness and ambivalence to allow for awareness-based detection. In other words, operators are faced with partly novel (in this case fan blade rupture in the left engine causing smoke and vibration outside of the crews training and experience) and partly familiar (in this case the crew had an awareness from their experience of other aircraft types that smoke entering the flight deck from the cabin was likely to mean a problem with the right engine) situations. This dual state however of belief and doubt is hard to achieve, especially in time-critical situations.

The Schematic influences acting on the pilots in the Kegworth accident can be further distinguished between the summary of the experience, in this case what the vibration, noise and smell meant to the pilots (left engine damage) and the actions that occur at a moment in time, in this case throttling back and shutting down the wrong engine. It is impossible to personally experience every eventuality for a complex system such as a flight deck, that is why training, for example, case-based learning (O’Hare et al., 2010), is so critical because it allows for people to develop Schemata that can be utilised when it is required in a real-life situation. The incident of the fan blade rupture and its associated symptoms (fumes, vibration, etc.) was a rare event and not included in training, nor had the crew any first-hand experience of it and therefore they did not have the Schema available to deal with it adequately

Contributory Factor 2: Premature reaction to problem, contrary to training. The speed at which the pilots acted was contrary to their training and the Operators Procedures.

Human action takes place at different cognitive levels; distinguishing between conscious attention-requiring action and unconscious action that do not require the presence of thought. Automatic activation is thus likely to lead to automatic action responses, hence the premature reaction to the problem. At the time the Captain thought he had made the correct assessment of the situation and had no reason to suspect he had made any erroneous decisions. Problems with data interpretation are exacerbated when people either explain away or take immediate action (as occurred here) to counteract a symptom and later forget to integrate data that may be available (see Contributory Factor 3), this situation can be seen to happen here.

Controlled processes (i.e. willed and guided attention) are only activated when either a task is too difficult (a novel situation) or errors are made. At this stage the pilots were not aware they were facing a novel situation, i.e. fan blade rupture rather than a more routine and trained for the engine fire, nor was they aware that their decision was to be erroneous. Therefore a Schematic perspective of the attention processes the pilots were engaged with would suggest they were automatic and thus relatively instantaneous based on their assessment of the situation which can account for the premature reaction to the situation.

Contributory Factor 3: Lack of equipment monitoring and assimilation of instrument indications. The engine parameters appeared to be stable, even though the vibration continued to show on the Flight Data Recorder (FDR) and were felt by passengers but they were not perceived by the pilots. Additionally, the crew looking at the engine instruments did not get an indication of which engine was faulty. Furthermore, the engine vibration gauges that were part of the Engine Instrument System (EIS) were not included in the Captain’s visual scan as in previous models of aircraft it was considered unreliable.

One of the defining features of Schemata is their emphasis on the role of past experience in guiding the way people perceive and act in the world. After the Kegworth accident, the Captain stated that he rarely included the engine vibration gauges in his visual scans as he believed them to be unreliable and prone to false readings, this belief was based on his experience of these instruments in other aircraft. The crew was expecting the engine vibration gauges to be unreliable based on their past experiences, therefore, their ‘scanning Schema’ would not have included these instruments once it was activated. Expectancy can override any external cues to the contrary. The active Schemata available to the Captain were not based on the current model of the aircraft as he only had 23 h flying experience in it. The prevailing and enduring view of the unreliability of the engine vibration gauges was formed from over 13,000 h of flying experience with other aircraft types. As a result, the Captain was left with a faulty Schematic representation for the scanning process.

The engine instrument contributory factor highlights how systemic factors can play a vital role in triggering error. Although the new engine vibration displays (digital rather than mechanical pointers) were technically more reliable, 64% of British Midland Airway pilots thought the new display system was not effective at drawing attention to changes in engine parameters and 74% preferred the old mechanical pointers (AAIB, 1990). It is likely that such views were discussed in crew rooms and colleague’s opinions would have been an influencing factor. Additionally, it would appear that training failed to demonstrate how the new engine vibration gauges were more accurate. Training on the new EIS was included in the 1-day course that explained the differences between the Series 300 and 400 aircraft. There was no flight simulator equipped with the new EIS at the time, therefore the first time a pilot was likely to see abnormal indications on the instruments, such as the engine vibration gauges, was in-flight with a failing engine (AAIB, 1990). Therefore the crew had no real experience of the EIS and the individual gauges and would not have had a relevant Schema developed for it. In this case, these Schemata were not accurate for the situation.

Missing events, known as negative cues, are usually a stumbling block for novices because the experience possessed by experts allows them to form and use expectancies. When these expectancies are misleading, however, the expert may still fall foul of missing events, in this case not assimilating the information from the engine vibration gauges.

The AAIB report (1990) recommended that Civil Aviation Authority (CAA) review their training procedures to ensure crews are provided with EIS display familiarisation in a simulator to acquire the visual and interpretive skills necessary, in other words, develop Schemata for a range of failures and their representation on an EIS, such as the engine vibration gauges. These systemic influences would have played a part in creating the Schemata the pilots relied on and therefore influenced their actions. Short courses and user manuals for converting to the series 400 were unlikely to prevail over the combination of the Captain’s experiences, flying hours and expectations in the immediate term. Reactions to the engine vibration gauges are modified by general experience; old views are liable to prevail unless the technical knowledge of pilots is effectively revised. Schemata reside in long-term memory, to modify it is only likely to occur over relatively longer time scales rather than the shorter time scales associated with dynamic task performance.

When feedback is discrepant from an operator’s expectation, this loss of control requires extensive revision of mental representation and diagnostic actions, which can often be ill-afforded in time-critical situations. Therefore, in the Kegworth accident, had the engine parameters on the engine vibration gauges been noted it would have required a laborious diagnosis to determine whether the display readings were accurate. The lack of visual scanning of the vibration gauges led to a ‘description error’ meaning the relevant information needed to form the appropriate intention is not available. An appropriate intention was formed but based on an insufficient description contributing to the wrong engine being shut down.

Contributory Factor 4: Cessation of smoke, noise, and vibration when the engine was throttled back. Believing that the No 2 (right) engine had suffered damage, it was throttled back and eventually shut down. As soon as this happened the shuddering ceased and there was a cessation of the smoke and fumes, even though it would have been coming from the left engine. This ‘chance’ occurrence (that the left engine ceased to surge as the right one was throttled back) caused the crew to believe that their action had the correct impact.

People tend to assume that their version of the world is correct whenever events happen in accordance with their expectations. This phenomenon is termed ‘confirmation bias’ and refers to people seeking information that is likely to confirm their expectations. Nearly two-thirds of driving accidents are the result of inappropriate expectations or interpretations of the environment. In the Kegworth example the pilots believed they had a good picture of the system (i.e. their Schema was that the right engine was damaged) and the two sequential events that happened were throttling back the right engine (action) and the cessation of vibration and fumes from the left engine (inworld), convincing the crew that their assertion was correct. The reduction in the level of symptoms (engine vibration and fumes) lasted for 20min and was compatible with the pilot’s expectations of the outcome of throttling back the No. 2 engine; therefore it is clear how this would have been taken as evidence that the correct action had been performed.

This confirmation bias is exacerbated by the lack of equipment monitoring by the pilots (see Contributory Factor 3) and the time critical nature of the situation

Contributory Factor 5: lack of communication from the passengers and cabin crew. In the cabin, the passengers and the cabin attendants saw signs of fire from the left engine. The Captain broadcast to the passengers that there was trouble with the right engine which had produced smoke and was shut down. Many of the passengers were puzzled by the commander’s reference to the right engine, but none brought the discrepancy to the attention of the cabin crew, even though several were aware of continuing vibration.

One of the key features of the reciprocal and cyclical nature of the Perceptual Cycle Model is how not only do Schemata affect how people act in the world (i.e. direct action) but that information from the world can affect Schemata. Therefore had the flight deck crew been informed of the confusion the passengers were experiencing due to the apparent misdiagnosis of smoke origin, their Schema may have been revised accordingly. This might have resulted in them realizing they did not have an accurate internal representation of the situation and thus they may have adjusted their actions and the outcome could have been different. This contributory factor again emphasizes how the Perceptual Cycle takes the system as a whole into account. Whilst the framework models perception and action of an individual (in this case flight crew), the world in which the crew interact is the systemic element of the cycle. As previously mentioned systems are not a single entity but built up of various layers. Colleagues are part of these layers and they can have an influencing effect on the perception and action of the operators at the sharp end. Operators are often unaware of the ‘bugs’ in their Schemata and feedback (potentially from people or machines) is one way to avoid this miscalibration of knowledge. Systems in which feedback is poor are more likely to have miscalibrated operators, which appeared to be the case in the Kegworth accident.

The AAIB (1990) report suggested that the issue of ‘role’ influenced the passenger’s acceptance of the Captain’s cabin address. Lay passengers generally assume that flight crew will have all the information and knowledge available to them to have made an informed and correct decision. Similarly, the report suggests that although the cabin crew would have also been confused with the address they had no reason to suspect the pilots had not assimilated all of the engine parameter information available to them on the flight deck. In addition, cabin crew is aware that their presence on the flight deck could be distracting especially when the flight crew are dealing with an emergency. Whilst the flight crew can be considered to have incorrect Schemata for the situation, the cabin crew also did not have the Schemata available to them to make them question the pilots. The AAIB report recommended joint training between flight and cabin crew to deal with such circumstances, after such training both the flight and cabin crews would have revised Schemata for when it is appropriate to coordinate communication in emergency situations. This contributory factor again demonstrates how the wider system plays such an important part in the formation of error and shows how an error which initially looked to result from the actions of one person are actually symptoms of trouble deeper within the system.

Photo from FAA’s Lessons Learned from Transport Airplane Accidents. British Midland B737 Flight 92 at Kegworth

Discussion

In summary of the Kegworth accident, the unforeseen combination of symptoms was outside the pilots training or experience. This led pilots to base actions on what experience they did have, which has previously been shown to be the case with decision makers in complex situations. The pilots’ expectations about the engine vibration gauges meant they did not assimilate the readings on both engine vibration indicators. Additionally, the pilot’s Schematic representation of the air conditioning system was not appropriate for the unfamiliar aircraft type which contributed to the faulty diagnosis of the problem. Therefore, the actions the pilots took were not appropriate for the situation and information that was available in the world (e.g. knowledge of cabin crew or vibration gauge information) was not utilized by the pilots to update their Schematic representations and modify their actions. From the explanations of the contributory factors outlined in the AAIB report, it would appear that the Perceptual Cycle Model and Schema Theory offers a good model to structure the actions of the pilots and accounts for some of the key points highlighted in the modern systems error literature.

The only ‘‘real explanation of error is that all factors come together’’, there is no single cause of failure rather a ‘‘dynamic interplay of multiple contributors’’ which was certainly the case in the Kegworth accident, without one of the factors (including, lack of engine vibration gauge monitoring, assumed knowledge of air system, lack of crew communication) the accident may not have happened. The Perceptual Cycle framework and Schema Theory can account for these factors and how they influenced the perceptions and actions of the pilots to show how their actions made sense to them at the time.

The preceding discussion shows how interactions between situation, person and the system as a whole contribute to such misfortunes.”

References

(1) Aviation Safety Council Taipei-Taiwan Aviation Occurrence Report, 4 February 2015 TransAsia Airways Flight GE235, ATR72-212A, Loss of Control and Crashed into Keelung River Three Nautical Miles East of Songshan Airport. Report Number: ASC-AOR-16-06-001Date: June 2016

(2) Sallee, G. P. & Gibbons, D. M. (1999). Propulsion system malfunction plus inappropriate crew response (PSM + ICR). Flight Safety Digest, 18, (11-12), 1-193

(3) FAA Engine & Propeller Directorate ANE-110 Turbofan Engine Malfunction Recognition and Response Final Report July, 17h, 2009

(4) Katherine L. Plant, Neville A. Stanton, Why did the pilots shut down the wrong engine? Explaining errors in context using Schema Theory and the Perceptual Cycle Model. Transportation Research Group, School of Civil Engineering and the Environment, University of Southampton, Highfield, Southampton SO17 1BJ, United Kingdom)

Further reading

- TransAsia Airways Flight GE235 accident Final Report

- Learning from the past: American Eagle Flight 3379, uncontrolled collision with terrain. Morrisville, North Carolina December 13th, 1994.

- Lessons learned from British Midland Flight 92, Boeing B-737-400, January 8, 1989

**********************

By Laura Victoria Duque Arrubla, a medical doctor with postgraduate studies in Aviation Medicine, Human Factors and Aviation Safety. In the aviation field since 1988, Human Factors instructor since 1994. Follow me on facebook Living Safely with Human Error and twitter@SafelyWith. Human Factors information almost every day

By Laura Victoria Duque Arrubla, a medical doctor with postgraduate studies in Aviation Medicine, Human Factors and Aviation Safety. In the aviation field since 1988, Human Factors instructor since 1994. Follow me on facebook Living Safely with Human Error and twitter@SafelyWith. Human Factors information almost every day

_______________________

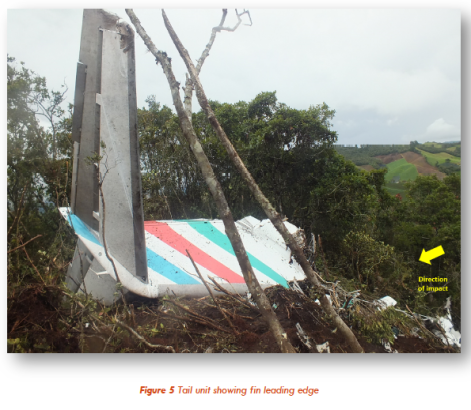

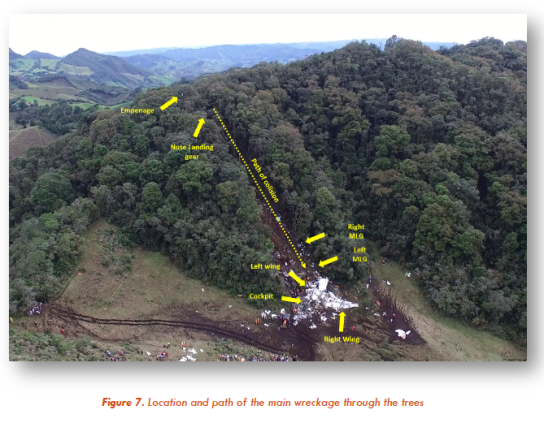

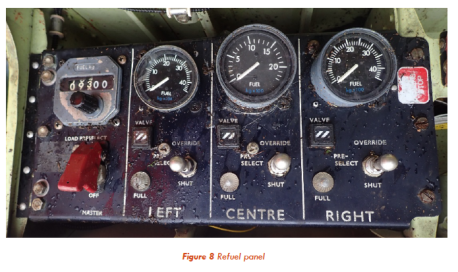

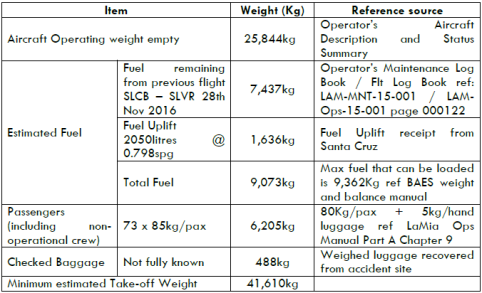

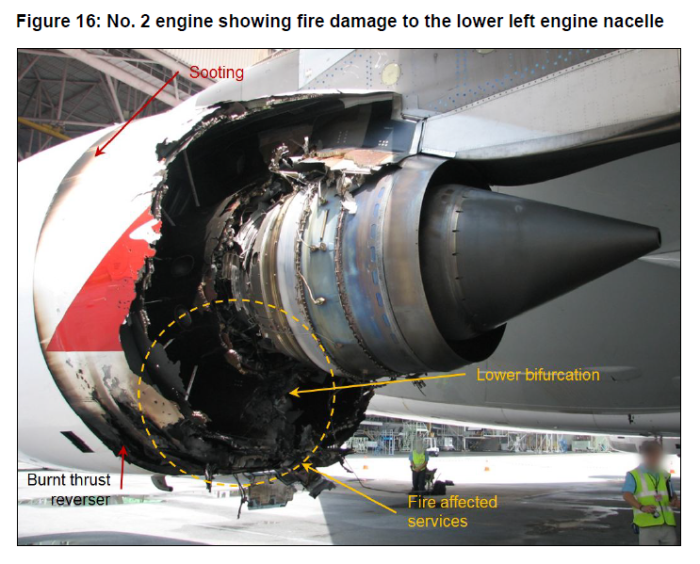

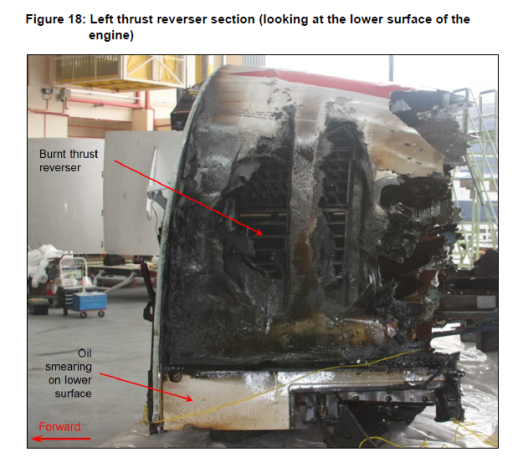

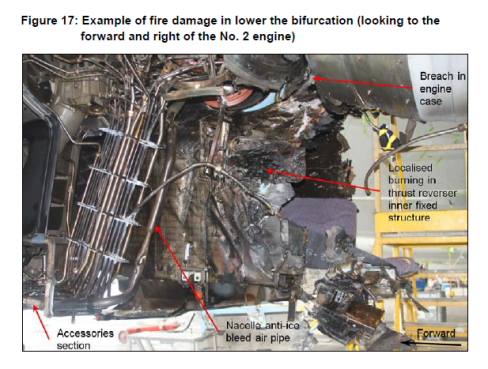

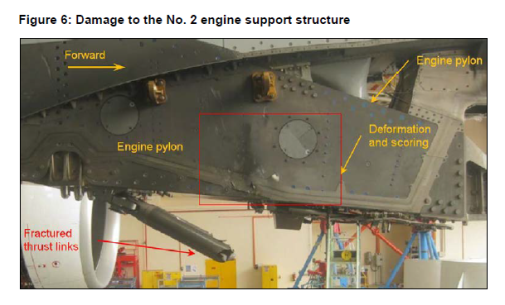

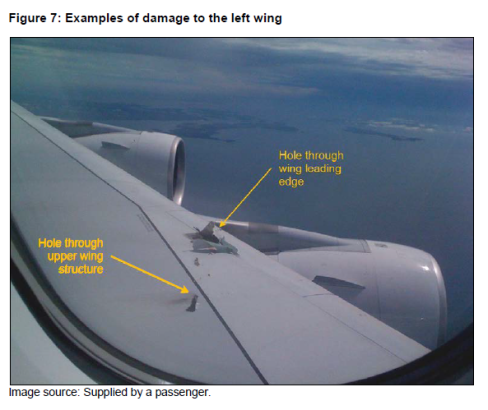

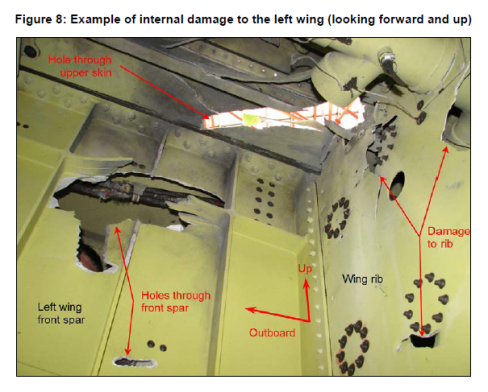

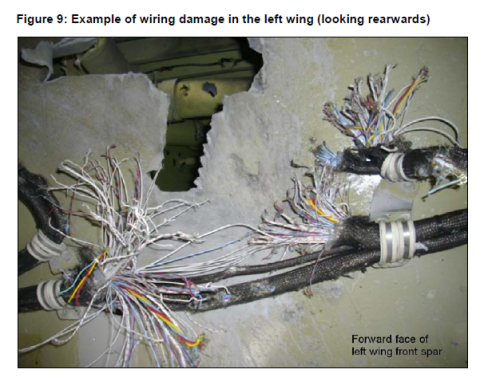

Photo: ATSB Aviation Occurrence Investigation AO-2010-089 Final Report

Photo: ATSB Aviation Occurrence Investigation AO-2010-089 Final Report

Photos: ATSB Aviation Occurrence Investigation AO-2010-089 Final Report

Photos: ATSB Aviation Occurrence Investigation AO-2010-089 Final Report

By Laura Victoria Duque Arrubla, a medical doctor with postgraduate studies in Aviation Medicine, Human Factors and Aviation Safety. In the aviation field since 1988, Human Factors instructor since 1994. Follow me on facebook Living Safely with Human Error and twitter@SafelyWith. Human Factors information almost every day

By Laura Victoria Duque Arrubla, a medical doctor with postgraduate studies in Aviation Medicine, Human Factors and Aviation Safety. In the aviation field since 1988, Human Factors instructor since 1994. Follow me on facebook Living Safely with Human Error and twitter@SafelyWith. Human Factors information almost every day