Abstract

Integration of machine learning (ML) components in critical applications introduces novel challenges for software certification and verification. New safety standards and technical guidelines are under development to support the safety of ML-based systems, e.g., ISO 21448 SOTIF for the automotive domain and the Assurance of Machine Learning for use in Autonomous Systems (AMLAS) framework. SOTIF and AMLAS provide high-level guidance but the details must be chiseled out for each specific case. We initiated a research project with the goal to demonstrate a complete safety case for an ML component in an open automotive system. This paper reports results from an industry-academia collaboration on safety assurance of SMIRK, an ML-based pedestrian automatic emergency braking demonstrator running in an industry-grade simulator. We demonstrate an application of AMLAS on SMIRK for a minimalistic operational design domain, i.e., we share a complete safety case for its integrated ML-based component. Finally, we report lessons learned and provide both SMIRK and the safety case under an open-source license for the research community to reuse.

Similar content being viewed by others

1 Introduction

Machine learning (ML) is increasingly used in critical applications, e.g., supervised learning using deep neural networks (DNN) to support automotive perception. Software systems developed for safety-critical applications must undergo assessments to demonstrate compliance with functional safety standards. However, as the conventional safety standards are not fully applicable for ML-enabled systems (Salay et al., 2018; Tambon et al., 2022), several domain-specific initiatives aim to complement them, e.g., organized by the EU Aviation Safety Agency, the ITU-WHO Focus Group on AI for Health, and the International Organization for Standardization.

In the automotive industry, several standardization initiatives are ongoing to allow safe use of ML in road vehicles. It is evident that the established functional safety as defined in ISO 26262 Functional Safety (FuSa) is no longer sufficient for the next generation of advanced driver-assistance systems (ADAS) and autonomous driving (AD). One complementary standard under development is ISO 21448 Safety of the Intended Functionality (SOTIF). SOTIF aims for absence of unreasonable risk due to hazards resulting from functional insufficiencies, incl. those originating in ML components.

Standards such as SOTIF mandate high-level requirements on what a development organization must provide in a safety case for an ML-based system. However, how to actually collect the evidence — and argue that it is sufficient — is up to the specific organization. Assurance of Machine Learning for use in Autonomous Systems (AMLAS) is one framework that supports the development of corresponding safety cases (Hawkins et al., 2021). Still, when applying AMLAS on a specific case, there are numerous details that must be analyzed, specified, and validated. The research community lacks demonstrator systems that can be used to explore such details.

To address the lack of ML-based demonstrator systems with accompanying safety cases, we embarked on an engineering research endeavor. Engineering research is described in the evolving ACM SIGSOFT Empirical Standards (Ralph et al., 2020) and we consider it synonymous with design science research (Wieringa, 2014; Runeson et al., 2020). Our engineering research was guided by the following design problem:

How to demonstrate and share a complete ML safety case for an open ADAS?

We report results from an industry-academia collaboration on safety assurance of SMIRK, an ML-based open-source software (OSS) ADAS that provides pedestrian automatic emergency braking (PAEB) in an industry-grade simulator. SMIRK is an “original software publication” (Socha et al., 2022) available on GitHub (RISE Research Institutes of Sweden, 2022). In this paper, our main contribution is the carefully described application of AMLAS in conjunction with the development of an ML component. While parts of the framework have been demonstrated before (Gauerhof et al., 2020), we believe this is the first comprehensive use of AMLAS conducted independently from its authors. Moreover, we believe this paper constitutes a pioneering safety case for an ML-based component that is OSS and completely transparent. Thus, our contribution can be used as a starting point for studies on safety engineering aspects such as operational design domain (ODD) extension, dynamic safety cases, and reuse of safety evidence.

Our results show that even an ML component in an ADAS designed for a minimalistic ODD results in a large safety case. Furthermore, we consider three lessons learned to be particularly important for the community. First, using a simulator to create synthetic data sets for ML training particularly limits the validity of the negative examples. Second, evaluation of object detection is non-intuitive and necessitates internal training. Third, the fitness function used for model selection encodes essential tradeoff decisions; thus, the project team must be aligned.

The paper is organized into three main parts:

- Part I:

-

Section 1 contains this introduction. Section 2 contains a background section describing SOTIF, AMLAS, and object detection using YOLO. In Section 3, we share an overview of related work. Finally, Section 4 describes the method used in our R&D project.

- Part II:

-

Sections 5–11 describe the intertwined development and safety assurance of SMIRK. We present an overall system description (Section 5), system requirements (Section 6), system architecture (Section 7), data management strategy (Section 8), ML-based pedestrian recognition component (Section 9), test design (Section 10), and test results (Section 11), respectively.

- Part III:

-

Section 12 reports lessons learned from our R&D project. Section 13 discusses the main threats to validity before Section 14 concludes the paper and outlines future work.

Finally, to ensure a self-contained paper, the Appendix presents the complete AMLAS safety argumentation for the use of ML in SMIRK.

2 Background

This section briefly presents SOTIF and AMLAS, respectively. Also, we present details of object detection and recognition using YOLO, which is fundamental to understand the subsequent safety argumentation.

2.1 SOTIF

ISO 21448 SOTIF is a candidate standard under development to complement the established automotive standard ISO 26262 Functional Safety (FuSa). While FuSa covers hazards caused by malfunctioning behavior, SOTIF addresses hazardous behavior caused by the intended functionality. Note that SOTIF covers “reasonably foreseeable misuse” but explicitly excludes antagonistic attacks; thus, we do not discuss any security concerns in this paper. A system that meets FuSa can still be hazardous due to insufficient environmental perception or inadequate robustness within the ODD. The SOTIF process provides guidance on how to systematically ensure the absence of unreasonable risk due to functional insufficiencies. The goal of the SOTIF process is to perform a risk acceptance evaluation and then reduce the probability of (1) known and (2) unknown scenarios causing hazardous behavior.

Figure 1 shows a simplified version of the SOTIF process. The process starts in the upper left with A) Requirements Specification. Based on the requirements, a B) Risk Analysis is done. For each identified risk, its potential Consequences are analyzed. If the risk of harm is reasonable, it is recorded as an acceptable risk. If not, the activity continues with an analysis of Causes, i.e., an identification and evaluation of triggering conditions. If the expected system response to triggering conditions is acceptable, the SOTIF process continues with V&V activities. If not, the remaining risk forces a C) Functional Modification with a corresponding requirements update.

The lower part of Fig. 1 shows the V&V activities in the SOTIF process, assuming that they are based on various levels of testing. For each risk, the development organization conducts D) Verification to ensure that the system satisfies the requirements for the known hazardous scenarios. If the F) Conclusion of Verification Tests are satisfactory, the V&V activities continue with validation. If not, the remaining risk requires a C) Functional Modification. In the E) Validation, the development organization explores the presence of unknown hazardous scenarios — if any are identified, they turn into known hazardous scenarios. The H) Conclusion of Validation Tests estimate the likelihood of encountering unknown scenarios that lead to hazardous behavior. If the residual risk is sufficiently small, it is recorded as an acceptable risk. If not, the remaining risk again necessitates a C) Functional Modification.

2.2 Safety assurance using the AMLAS process

The methodology for the AMLAS was developed by the Assuring Autonomy International Programme, University of York (Hawkins et al., 2021). AMLAS provides a process that results in 34 safety evidence artifacts. Moreover, AMLAS provides a set of recurring safety case patterns for ML components presented using the graphical format Goal Structuring Notation (GSN) (Assurance Case Working Group, 2021).

An overview of the AMLAS process, adapted from Hawkins et al. (2021). Blue color denotes systems engineering, whereas black color relates specifically to the ML component. Numbers refer to the AMLAS stages, not sections in this paper

Figure 2 shows an overview of the six stages of the AMLAS process. The upper part stresses that the development of an ML component and its corresponding safety case is done in the context of larger systems development, indicated by the blue arrow. Analogous to SOTIF, AMLAS is an iterative process as highlighted by the black arrow in the bottom.

Starting from the System Safety Requirements from the left, stage 1 is ML Safety Assurance Scoping. This stage operates on a systems engineering level and defines the scope of the safety assurance process for the ML component as well as the scope of its corresponding safety case — the interplay with the non-ML safety engineering is fundamental. The next five stages of AMLAS all focus on assurance activities for different constituents of ML development and operations. Each of these stages concludes with an assurance argument that when combined, and complemented by evidence through artifacts, compose the overall ML safety case.

- Stage 2:

-

ML Safety Requirements Assurance. Requirements engineering is used to elicit, analyze, specify, and validate ML safety requirements in relation to the software architecture and the ODD.

- Stage 3:

-

Data Management Assurance. Requirements engineering is first used to develop data requirements that match the ML safety requirements. Subsequently, data sets are generated (development data, internal test data, and verification data) accompanied by quality assurance activities.

- Stage 4:

-

Model Learning Assurance. The ML model is trained using the development data. The fulfilment of the ML safety requirements is assessed using the internal test data.

- Stage 5:

-

Model Verification Assurance. Different levels of testing or formal verification to assure that the ML model meets the ML safety requirements. Most importantly, the ML model shall be tested on verification data that has not influenced the training in any way.

- Stage 6:

-

Model Deployment Assurance. Integrate the ML model in the overall system and verify that the system safety requirements are satisfied. Conduct integration testing in the specified ODD.

The rightmost part of Fig. 2 shows the overall safety case for the system under development with the argumentation for the ML component as an essential part, i.e., the target of the AMLAS process.

2.3 Object detection and recognition using YOLO

YOLO is an established real-time object detection and recognition algorithm that was originally released by Redmon et al. (2016). The first version of YOLO introduced a novel object detection process that uses a single DNN to perform both prediction of bounding boxes around objects and classification at once. YOLO was heavily optimized for fast inference to support real-time applications. A fundamental concept of YOLO is that the algorithm considers each image only once, hence its name “You Only Look Once.” Thus, YOLO is referred to as a single-stage object detector.

Single-stage object detectors consist of three core parts: (1) the model backbone, (2) the model neck, and (3) the model head. The model backbone extracts important features from input images. The model neck generates so-called feature pyramids using PANet (Liu et al., 2018) that support generalization to different sizes and scales. The model head performs the detection task, i.e., it generates the final output vectors with bounding boxes and class probabilities.

In a nutshell, YOLO segments input images into smaller images. Each input image is split into a square grid of individual cells. Each cell predicts bounding boxes capturing potential objects and provides confidence scores for each box. Furthermore, YOLO does a class prediction for objects in the bounding boxes. Relying on the Intersection over Union (IoU) method for evaluating bounding boxes, YOLO eliminates redundant bounding boxes. The final output from YOLO consists of unique bounding boxes with class predictions. There are several versions of YOLO and each version provides different model architectures that balance the tradeoff between inference speed and accuracy differently — additional layers of neurons provide better accuracy at the expense of computation time.

3 Related work

Many researchers argue that software and systems engineering practices must evolve as ML enters the picture. As proposed by Bosch et al. (2021), we refer to this emerging area as AI engineering. AI engineering increases the importance of several system qualities. Quality characteristics discussed in the area of “explainable AI” are particularly important to safety argumentation. In our paper, we adhere to the following definitions provided by Arrieta et al. (2020): “Given an audience, an explainable AI is one that produces details or reasons to make its functioning clear or easy to understand.” In the same vein, we refer to interpretability as “a passive characteristic referring to the level at which a given model makes sense for a human observer.” In our work on SMIRK, the human would be an assessor of

the safety argumentation. In contrast, we regard explainability as an active characteristic of a model that clarifies its internal functions. The current ML models in SMIRK do not actively explain their outputs. For a review of DNN explainability techniques in automotive perception, e.g., post hoc saliency methods, counterfactual explanations, and model translations, we refer to a recent review by Zablocki et al. (2022).

In light of AI engineering, the rest of this section presents related work on safety argumentation and testing of automotive ML, respectively.

3.1 Safety argumentation for machine learning

Many publications address the issue of safety argumentation for systems with ML-based components and highlight corresponding safety gaps. A solid argumentation is required to enable safety certification, for example to demonstrate compliance with future standards such as SOTIF and ISO 8800 Road Vehicles — Safety and Artificial Intelligence. While there are several established safety patterns for non-AI systems (e.g., simplicity, substitution, sanity check, condition monitoring, comparison, diverse redundancy, replication redundancy, repair, degradation, voting, override, barrier, and heartbeat (Wu & Kelly, 2004; Preschern et al., 2015)), considerable research is now directed at understanding to what extent existing approaches apply to AI engineering. For example, Picardi et al. (2020) presented a set of patterns that later turned into AMLAS, i.e., the process that guides most of our work in this paper.

Mohseni et al. (2020) reviewed safety-related ML research and mapped ML techniques to safety engineering strategies. Their mapping identified three main categories toward safe ML: (1) inherently safe design; (2) enhanced robustness, a.k.a. “safety margin”; and (3) run-time error detection, a.k.a. “safe fail.” The authors further split each category into sub-categories. Based on their proposed categories, the safety argumentation we present for SMIRK used an inherently safe design as the starting point through a careful “design specification” and “implementation transparency.” Moreover, from the third category, SMIRK relies on “OOD error detection” as will be presented in Section 9.3.

Schwalbe and Schels (2020) presented a survey on specific considerations for safety argumentation targeting DNNs, organized into four development phases. First, they state that requirements engineering must focus on data representativity and entail scenario coverage, robustness, fault tolerance, and novel safety-related metrics. Second, development shall seek safety by design by, e.g., acknowledging uncertainty and enhancing robustness (in line with recommendations by Mohseni et al. 2020). Third, the authors discuss verification primarily through formal model checks using solvers. Fourth, they consider validation as the task of identifying missing requirements and test cases, for which they recommend data validation followed by both qualitative and quantitative analyses.

Tambon et al. (2022) conducted a systematic literature review on certification of safety-critical ML-based systems. Based on 217 primary studies, the authors identified five overall categories in the literature: (1) robustness, (2) uncertainty, (3) explainability, (4) verification, and (5) safe reinforcement learning. Moreover, the paper concludes by calling for deeper industry-academia collaborations related to certification. Our work on SMIRK responds to this call and explicitly discusses the identified categories on an operational level (except reinforcement learning since this type of ML does not apply to SMIRK). By conducting hands-on development of an ADAS and its corresponding safety case, we have identified numerous design decisions that have not been discussed in prior work. As the devil is in the detail, we recommend other researchers to transparently report from similar endeavors in the future.

Schwalbe et al. (2020) systematically established and broke down safety requirements to argue the sufficient absence of risk arising from SOTIF-style functional insufficiencies. The authors stress the importance of diverse evidence for a safety argument involving DNNs. Moreover, they provide a generic approach and template to thoroughly report DNN specifics within a safety argumentation structure. Finally, the authors show its applicability for an example use case based on pedestrian detection. In our work, 34 artifacts of different types constitute the safety evidence. Furthermore, pedestrian detection is a core feature of SMIRK.

Several research projects selected ML-based pedestrian detection systems to illustrate different aspects of safety argumentation. Wozniak et al. (2020) provided a safety case pattern for ML-based systems and showcase its applicability for pedestrian detection. The pattern is integrated within an overall encompassing approach for safety case generation. On a similar note, Willers et al. (2020) discussed safety concerns for DNN-based automotive perception, including technical root causes and mitigation strategies. The authors argue that it remains an open question how to conclude whether a specific concern is sufficiently covered by the safety case — and stress that safety cannot be determined analytically through ML accuracy metrics. In our work on SMIRK, we provide safety evidence that goes beyond the level of the ML model. Using AMLAS, we also claim that we demonstrate sufficient evidence for ML in SMIRK. Finally, related to pedestrian detection, we find that the work by Gauerhof et al. (2020) is the closest to this study, and the reader will find that we repeatedly refer to it in relation to SMIRK requirements in Section 6.

In the current paper, we present a holistic ML safety case building on previous work for a demonstrator system available under an OSS license. Furthermore, in contrast to a discussion restricted to pedestrian detection, we discuss an ADAS that subsequently commences emergency braking in a simulated environment. This addition responds to a call from Haq et al. (2021a) to go from offline to online testing, as many safety violations cannot be detected on the level of the ML model. While online testing is not novel, research papers on ML testing have largely considered standalone image data sets disconnected from concerns of running systems.

3.2 Testing of machine learning in automated vehicles

According to AMLAS, the two primary means to generate safety evidence for ML-based systems through V&V are testing and verification (Hawkins et al., 2021). As test-based verification is substantially more mature for DNNs such as used in SMIRK, we restrict ourselves to testing. In the context of an ML-based system, this can be split into (1) data set testing, (2) model testing, (3) unit testing, (4) integration testing, (5) system testing, and (6) acceptance testing (Song et al., 2022). The related work focuses on model and system testing.

Model testing shall be performed on the verification data set that must not have been used in the training process. Depending on the test subject and the test level, the inputs for corresponding ADAS testing might be images such as in DeepRoad (Zhang et al., 2018) and DeepTest (Tian et al., 2018), or test scenario configurations as used by Ebadi et al. (2021).

Many research projects investigated efficient design of effective test cases for ADAS. Thus, several approaches to test case generation in simulated environments have been proposed. Pseudo-test oracle differential testing focuses on detecting divergence between programs’ behaviors when provided the same input data. For example, DeepXplore (Pei et al., 2017) changes the test input—like solving an optimization problem—to find the inputs triggering different behaviors between similar autonomous driving DNN models, while trying to increase the neuron coverage. Metamorphic testing works based on detecting violations of metamorphic relations to identify erroneous behaviors. For example, DeepTest (Tian et al., 2018) applies different transformations to a set of seed images and utilizes metamorphic relations to detect faulty behaviors of different Udacity DNN models for self-driving cars, while aiming for increasing neuron coverage as well. Gradient-based test input generation regards the test input generation as an optimization problem and generates a high number of failure-revealing or difference-inducing test inputs while maximizing the test adequacy criteria, e.g., neuron coverage. DeepXplore (Pei et al., 2017) utilizes gradient ascent to generate inputs provoking different behaviors among similar DNN models. Generative Adversarial Network (GAN)–based test input generation is intended to generate realistic test input, which cannot be easily distinguished from original input. DeepRoad (Zhang et al., 2018) utilizes a GAN-based metamorphic testing approach to generate test images for testing Udacity DNN models for autonomous driving.

System testing entails ensuring that system safety standards are met following the integration of the model into the system. As for model testing, many research projects proposed techniques to generate test cases. A commonly proposed approach is to use search-based techniques to identify failure-revealing or collision-provoking scenarios. Many papers argue that simulated test scenarios are effective complements to field testing — which tends to be costly and sometimes dangerous.

Ben Abdessalem et al. (2016) proposed the multi-objective search algorithm NSGA-II along with surrogate models to find critical test scenarios with few simulations for the pedestrian detection system PeVi. PeVi constitutes the reference architecture for SMIRK, as described in Section 7, for which we obtained deep insights during a replication study (Borg et al., 2021a). In a subsequent study, Ben Abdessalem et al. (2018b) used MOSA developed by Panichella et al. (2015)—a many-objective optimization search algorithm—along with objectives based on branch coverage and failure-based heuristics to detect undesired and failure-revealing feature interaction scenarios for integration testing in an automated vehicle. Furthermore, in a third related study, Ben Abdessalem et al. (2018a) combined NSGA-II and decision tree classifier models—which they referred to as a learnable evolutionary algorithm—to guide the search for critical test scenarios. Moreover, the proposed approach characterizes the failure-prone regions of the test input space. Inspired by previous work, we have used various search-techniques, including NSGA-II, to generate test scenarios for pedestrian detection and emergency braking of the autonomous driving platform Baidu Apollo in the SVL simulator (Ebadi et al., 2021).

The test cases providing safety evidence for SMIRK correspond to systematic grid searches rather than any metaheuristic search. We think this is a necessary starting point for a safety argumentation. On the other hand, as argued in several related papers, we believe that search techniques could be a useful complement during testing of ML-based systems to pinpoint weaknesses and guide functional modifications — for example as part of the SOTIF process. In the SMIRK context, we plan to investigate this further as part of future work.

4 Method: engineering research

The overall frame of our work is the engineering researchFootnote 1 standard as defined in the community endeavor ACM SIGSOFT Empirical Standards (Ralph et al., 2020). Engineering research is an appropriate standard when evaluating technological artifacts, e.g., methods, systems, and tools — in our case SMIRK and its safety case. To support the validity of our research, we consulted the essential attributes of the corresponding checklist provided in the standard. We provide three clarifications for readers cross-checking our work against these attributes: (1) empirical evaluations of SMIRK are done using simulation in ESI Pro-SiVIC, (2) empirical evaluation of the safety case has been done through workshops and peer-review, and (3) we compare the SMIRK safety case against the state-of-the-art as we build on previous work.

Engineering research aims to develop general design knowledge in a specific field to help practitioners create solutions to their problems. As discussed by van Aken (2004), technological rules can be used to express design knowledge by capturing general knowledge about the mappings between problems and proposed solutions. Technological rules can normally be expressed as “To achieve <Effect> in <Context> apply <Intervention>.” Starting from a technological rule, researchers can extend the rule’s scope of validity by applying the intervention to new contexts or develop rules from the general to more specific contexts (Runeson et al., 2020), i.e., new studies can lead to both generalization and specialization.

Our work aims to specialize a technological rule for AMLAS. Starting from:

To develop a safety case for ML in autonomous systems apply AMLAS.

we seek general design knowledge for the more specific rule:

To develop a safety case for ML-based perception in ADAS apply AMLAS.

Figure 3 shows an overview of the 2-year R&D project (SMILE IIIFootnote 2) that resulted in the SMIRK MVP (Minimum Viable Product) and the safety case for its ML component. Note that collaborations in the preceding SMILE projects started already in 2016 (Borg et al., 2019), i.e., we report from research in the context of prolonged industry involvement. Starting from the left, we relied on A) Prototyping to get an initial understanding of the problem and solution domain (Käpyaho & Kauppinen, 2015). As our pre-understanding during prototyping grew, SOTIF and AMLAS were introduced as fundamental development processes and we established a first System Requirements Specification (SRS). The AMLAS process starts in the System Safety Requirements, which in our case come from following the SOTIF process.

Based on the SRS, we organized a B) Hazard Analysis and Risk Assessment (HARA) workshop (cf. ISO 262626) with all author affiliations represented. Then, the iterative C) SMIRK development phase commenced, encompassing both software development, ML development, and a substantial amount of documentation. When meeting our definition of done, i.e., an MVP implementation and stable requirements specifications, we conducted D) Fagan Inspections as described in Section 4.1. After corresponding updates, we baselined the SRS and the Data Management Specification (DMS). Note that due to the COVID-19 pandemic, all group activities were conducted in virtual settings.

Subsequently, the development project turned to E) V&V and Functional Modifications as limitations were identified. In line with the SOTIF process (cf. Fig. 1), also this phase of the project was iterative. The various V&V activities generated a significant part of the evidence that supports our safety argumentation. The rightmost part of Fig. 3 depicts the safety case for the ML component in SMIRK, which is peer-reviewed as part of the submission process of this paper.

4.1 Safety evidence from Fagan inspections

We conducted two formal Fagan inspections (Fagan, 1976) during the SMILE III project with representatives from the organizations listed as co-authors of this paper. All reviewers are active in automotive R&D. The inspections targeted the Software Requirements Specification and the Data Management Specification, respectively. The two formal inspections constitute essential activities in the AMLAS safety assurance and result in ML Safety Requirements Validation Results [J] and a Data Requirements Justification Report [M]. A Fagan inspection consists of the steps (1) Planning, (2) Overview, (3) Preparation, (4) Inspection meeting, (5) Rework, and (6) Follow-up.

-

1.

Planning: The authors prepared the document and invited the required reviewers to an inspection meeting.

-

2.

Overview: During one of the regular project meetings, the lead authors explained the fundamental structure of the document to the reviewers, and introduced an inspection checklist. Reviewers were assigned particular inspection perspectives based on their individual expertise. All information was repeated in an email, as not all reviewers were present at the meeting.

-

3.

Preparation: All reviewers conducted an individual inspection of the document, noting any questions, issues, and required improvements.

-

4.

Inspection meeting: Two weeks after the individual inspections were initiated, the lead authors and all reviewers met for a virtual meeting. The entire document was discussed, and the findings from the independent inspections were compared. All issues were compiled in inspection protocols.

-

5.

Rework: The lead authors updated the SRS according to the inspection protocol. The independent inspection results were used as input to capture-recapture techniques to estimate the remaining amount of work (Petersson et al., 2004). All changes are traceable through individual GitHub commits.

-

6.

Follow-up: Reviewers who previously found issues verified that those had been correctly resolved.

4.2 Presentation structure for the safety evidence

In the remainder of this paper, the AMLAS stages and the resulting artifacts act as the backbone in the presentation. Table 1 provides an overview of how those artifacts relate to the stages of AMLAS and where in this paper they are described for SMIRK. Throughout the paper, the notation [A]–[HH], in bold font, refers to the 34 individual artifacts prescribed by AMLAS. Finally, in the Appendix, the same 34 artifacts are used to present a complete safety case for the ML component in SMIRK.

5 SMIRK System Description [C]

SMIRK is a PAEB system that relies on ML. As an example of an ADAS, SMIRK is intended to act as one of several systems supporting the driver in the dynamic driving task, i.e., all the real-time operational and tactical functions required to operate a vehicle in on-road traffic. SMIRK, including the accompanying safety case, is developed with full transparency under an OSS license. We develop SMIRK as a demonstrator in a simulated environment provided by ESI Pro-SiVICFootnote 3.

The SMIRK product goal is to assist the driver on country roads in rural areas by performing emergency braking in the case of an imminent collision with a pedestrian. The level of automation offered by SMIRK corresponds to SAE Level 1: Driver Assistance, i.e., “the driving mode-specific execution by a driver assistance system of either steering or acceleration/deceleration” — in our case only braking. The first release of SMIRK is an MVP, i.e., an implementation limited to a highly restricted ODD, but, future versions might include steering and thus comply with SAE Level 2.

Sections 5 and 6 presents the core parts of the SMIRK SRS. The SRS, as well as this section, largely follows the structure proposed in IEEE 830-1998: IEEE Recommended Practice for Software Requirements Specifications (IEEE, 1998) and the template provided by Wiegers (2008). This section presents a SMIRK overview whereas Section 6 specifies the system requirements.

5.1 Product scope

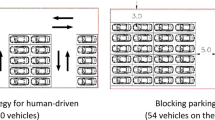

Figure 4 illustrates the overall function provided by SMIRK. SMIRK sends a brake signal when a collision with a pedestrian is imminent. Pedestrians are expected to cross the road at arbitrary angels, including perpendicular movement and moving toward or away from the car. Furthermore, a stationary pedestrian on the road must also trigger emergency braking, i.e., a scenario known to be difficult for some pedestrian detection systems. Finally, Fig. 4 stresses that SMIRK must be robust against false positives, also know as “braking for ghosts.” In this work, this refers to the ML-based component recognizing a pedestrian although another type of object is on collision course (e.g., an animal or a traffic cone) rather than radar noise. Trajectories are illustrated with blue arrows accompanied by a speed (v) and possibly an angle (\(\theta\)). In the superscript, c and p denote car and pedestrian, respectively, and 0 in the subscript indicates initial speed.

Note that the sole purpose of SMIRK is PAEB. The design of SMIRK assumes deployment in a vehicle with complementary ADAS, e.g., large animal detection, lane keeping assistance, and various types of collision avoidance. We also expect that sensors and actuators will be shared between ADAS. For the SMIRK MVP, however, we do not elaborate further on ADAS co-existence and we do not adhere to any particular higher-level automotive architecture. In the same vein, we do not assume a central perception system that fuses various types of sensor input. SMIRK uses a standalone ML model trained for pedestrian detection and recognition. In the SMIRK terminology, to mitigate confusion, the radar detects objects and the ML-based pedestrian recognition component identifies potential pedestrians in the camera input.

The SMIRK development necessitated quality trade-offs. The software product quality model defined in the ISO/IEC 25010 standard consists of eight characteristics. Furthermore, as recommend in requirements engineering research (Horkoff, 2019), we add the two novel quality characteristics interpretability and fairness. For each characteristic, we share its importance for SMIRK by assigning it a low [L], medium [M] or high [H] priority. The priorities influence architectural decisions in SMIRK and support elicitation of architecturally significant requirements (Chen et al., 2012).

-

Functional suitability. No matter how functionally restricted the SMIRK MVP is, stated and implied needs of a prototype ADAS must be met. [H]

-

Performance efficiency. SMIRK must be able to process input, do ML inference, and commence emergency braking in realistic driving scenarios. Identifying when performance efficiency reached excessive levels is vital in the requirements engineering process. [M]

-

Compatibility. SMIRK shall be compatible with other ADAS, but, this is an ambition beyond the MVP development. [L]

-

Usability. SMIRK is an ADAS that operates in the background without a user interface for direct driver interaction. [L]

-

Reliability. A top priority in the SMIRK development that motivates the application of AMLAS. [H]

-

Security. Not included in the SOTIF scope, thus not prioritized. [L]

-

Maintainability. Evolvability from the SMIRK MVP is a key concern for future projects; thus, maintainability is important. [M]

-

Portability. We plan to port SMIRK to other simulators and physical demonstrators in the future. Initially, it is not a primary concern. [L]

-

Interpretability. While interpretability is vital for any cyber-physical system, SMIRK’s ML exacerbates the need further. [M]

-

Fairness. A vital quality that primarily impacts the data requirements specified in the Data Management Specification (Borg et al., 2021b). [H]

5.2 Product functions

SMIRK comprises implementations of four algorithms and uses external vehicle functions. In line with SOTIF, we organize all constituents into the categories sensors, algorithms, and actuators.

-

Sensors

-

Algorithms

-

Time-to-collision (TTC) calculation for objects on collision course.

-

Pedestrian detection and recognition based on the camera input where the radar detected an object (see Section 9.1).

-

Out-Of-distribution (OOD) detection of never-seen-before input (part of the safety cage mechanism, see Section 9.3).

-

A braking module that commences emergency braking. In the SMIRK MVP, maximum braking power is always used.

-

-

Actuators

-

Brakes (provided by ESI Pro-SiVIC, not elaborated further).

-

Figure 5 illustrates detection of a pedestrian on a collision course, i.e., PAEB shall be commenced. The ML-based functionality of pedestrian detection and recognition, including the corresponding OOD detection, is embedded in the Pedestrian Recognition Component (defined in Section 7.1).

6 SMIRK system requirements

This section specifies the SMIRK system requirements, organized into system safety requirements and ML safety requirements. ML safety requirements are further refined into performance requirements and robustness requirements. The requirements are largely re-purposed from the system for pedestrian detection at crossings described by Gauerhof et al. (2020) to our PAEB ADAS, thus allowing for comparisons to previous work within the research community.

6.1 System safety requirements [A]

-

SYS-SAF-REQ1 SMIRK shall commence automatic emergency braking if and only if collision with a pedestrian on collision course is imminent.

Rationale: This is the main purpose of SMIRK. If possible, ego car will stop and avoid a collision. If a collision is inevitable, ego car will reduce speed to decrease the impact severity. Hazards introduced from false positives, i.e., braking for ghosts, are mitigated under ML Safety Requirements.

6.2 Safety requirements allocated to ML component [E]

Based on the HARA (see Section 4), two categories of hazards were identified. First, SMIRK might miss pedestrians and fail to commence emergency braking — we refer to this as a missed pedestrian. Second, SMIRK might commence emergency braking when it should not — we refer to this as an instance of ghost braking.

-

Missed pedestrian hazard: The severity of the hazard is very high (high risk of fatality). Controllability is high since the driver can brake ego car.

-

Ghost braking hazard: The severity of the hazard is high (can be fatal). Controllability is very low since the driver would have no chance to counteract the braking.

We concluded that the two hazards shall be mitigated by ML safety requirements.

6.3 Machine learning safety requirements [H]

This section refines SYS-SAF-REQ1 into two separate requirements corresponding to missed pedestrians and ghost braking, respectively.

-

SYS-ML-REQ1. The pedestrian recognition component shall identify pedestrians in all valid scenarios when the radar tracking component returns a \(TTC < 4s\) for the corresponding object.

-

SYS-ML-REQ2 The pedestrian recognition component shall reject false positive input that does not resemble the training data.

Rationale: SYS-SAF-REQ1 is interpreted in light of missed pedestrians and ghost braking and then broken down into the separate ML safety requirements SYS-ML-REQ1 and SYS-ML-REQ2. The former requirement deals with the “if” aspect of SYS-SAF-REQ1 whereas its “and only if” aspect is targeted by SYS-ML-REQ2. SMIRK follows the reference architecture from Ben Abdessalem et al. (2016) and SYS-ML-REQ1 uses the same TTC threshold (4 s, confirmed during a research stay in Luxembourg). Moreover, we have validated that this TTC threshold is valid for SMIRK based on calculating braking distances for different car speeds. SYS-ML-REQ2 motivates the primary contribution of the SMILE III project, i.e., an OOD detection mechanism that we refer to as a safety cage.

6.3.1 Performance requirements

The performance requirements are specified with a focus on quantitative targets for the pedestrian recognition component. All requirements below are restricted to pedestrians on or close to the road.

For objects detected by the radar tracking component with a TTC \(<\) 4s, the following requirements must be fulfilled:

-

SYS-PER-REQ1 The pedestrian recognition component shall identify pedestrians with an accuracy of 93% when they are within 80 m.

-

SYS-PER-REQ2 The false negative rate of the pedestrian recognition component shall not exceed 7% within 50 m.

-

SYS-PER-REQ3 The false positives per image of the pedestrian recognition component shall not exceed 0.1% within 80 m.

-

SYS-PER-REQ4 In 99% of sequences of 5 consecutive images from a 10 FPS video feed, no pedestrian within 80 m shall be missed in more than 20% of the frames.

-

SYS-PER-REQ5 For pedestrians within 80 m, the pedestrian recognition component shall determine the position of pedestrians within 50 cm of their actual position.

-

SYS-PER-REQ6 The pedestrian recognition component shall allow an inference speed of at least 10 FPS in the ESI Pro-SiVIC simulation.

Rationale: SMIRK adapts the performance requirements specified by Gauerhof et al. (2020) for the SMIRK ODD. SYS-PER-REQ1 reuses the accuracy threshold from Example 7 in AMLAS, which we empirically validated for SMIRK — initially in a feasibility analysis, subsequently during system testing. SYS-PER-REQ2 and SYS-PER-REQ3 are two additional requirements inspired by Henriksson et al. (2019). Note that SYS-PER-REQ3 relies on the metric false positive per image rather than false positive rate as true negatives do not exist for object detection (further explained in Section 10.1 and discussed in Section 12). SYS-PER-REQ6 means that any further improvements to reaction time have a negligible impact on the total brake distance.

6.3.2 Robustness requirements

Robustness requirements are specified to ensure that SMIRK performs adequately despite expected variations in input. For pedestrians present within 50 m of Ego, captured in the field of view of the camera:

-

SYS-ROB-REQ1 The pedestrian recognition component shall perform as required in all situations Ego may encounter within the defined ODD.

-

SYS-ROB-REQ2 The pedestrian recognition component shall identify pedestrians irrespective of their upright pose with respect to the camera.

-

SYS-ROB-REQ3 The pedestrian recognition component shall identify pedestrians irrespective of their size with respect to the camera.

-

SYS-ROB-REQ4 The pedestrian recognition component shall identify pedestrians irrespective of their appearance with respect to the camera.

Rationale: SMIRK reuses robustness requirements for pedestrian detection from previous work. SYS-ROB-REQ1 is specified in Gauerhof et al. (2020). SYS-ROB-REQ2 is presented as Example 7 in AMLAS, which has been limited to upright poses, i.e., SMIRK is not designed to work for pedestrians sitting or lying on the road. SYS-ROB-REQ3 and SYS-ROB-REQ4 are additions identified during the Fagan inspection of the System Requirements Specification (see Section 4.1).

6.4 Operational design domain [B]

This section briefly describes the SMIRK ODD. As the complete ODD specification, based on the taxonomy developed by NHTSA (Thorn et al., 2018), is lengthy, we only present the fundamental aspects in this section. We refer interested readers to the GitHub repository. Note that we deliberately specified a minimalistic ODD, i.e., ideal conditions, to allow the development a complete safety case for the SMIRK MVP.

-

Physical infrastructure Asphalt single-lane roadways with clear lane markings and a gravel shoulder. Rural settings with open green fields.

-

Operational constraints Maximum speed of 70 km/h and no surrounding traffic.

-

Objects No objects except 0-1 pedestrians, either stationary or moving with a constant speed (\(<\) 15 km/h) and direction.

-

Environmental Conditions Clear daytime weather with overhead sun. Headlights turned off. No particulate matter leading to dirt on the sensors.

7 SMIRK system architecture

SMIRK is a pedestrian emergency braking ADAS that demonstrates safety-critical ML-based driving automation on SAE Level 1. The system uses input from two sensors (camera and radar/LiDAR) and implements a DNN trained for pedestrian detection and recognition. If the radar detects an imminent collision between the ego car and an object, SMIRK will evaluate if the object is a pedestrian. If SMIRK is confident that the object is a pedestrian, it will apply emergency braking. To minimize hazardous false positives, SMIRK implements a SMILE safety cage to reject input that is OOD. To ensure industrial relevance, SMIRK builds on the reference architecture from PeVi, an ADAS studied in previous work by Ben Abdessalem et al. (2016).

Explicitly defined architecture viewpoints support effective communication of certain aspects and layers of a system architecture. The different viewpoints of the identified stakeholders are covered by the established 4+1 view of architecture by Kruchten (1995). For SMIRK, we describe the logical view using a simple illustration with limited embedded semantics complemented by textual explanations. The process view is presented through a bulleted list, whereas the interested reader can find the remaining parts in the GitHub repository (RISE Research Institutes of Sweden, 2022). Scenarios are illustrated with figures and explanatory text.

7.1 Logical view

The SMIRK logical view is constituted by a description of the entities that realize the PAEB. Figure 6 provides a graphical description.

SMIRK interacts with three external resources, i.e., hardware sensors and actuators in ESI Pro-SiVIC: A) Mono Camera (752\(\times\)480 (WVGA), sensor dimension 3.13 cm \(\times\) 2.00 cm, focal length 3.73 cm, angle of view 45 degrees), B) Radar unit (providing object tracking and relative lateral and longitudinal speeds), and C) Ego Car (An Audi A4 for which we are mostly concerned with the brake system). SMIRK consists of the following constituents. We refer to E), F), G), I), and J) as the Pedestrian Recognition Component, i.e., the ML-based component for which this study presents a safety case.

-

Software components implemented in Python:

-

D) Radar Logic (calculating TTC based on relative speeds)

-

E) Perception Orchestrator (the overall perception logic)

-

F) Rule Engine (part of the safety cage, implementing heuristics such as pedestrians do not fly in the air)

-

G) Uncertainty Manager (main part of the safety cage, implementing logic to avoid false positives)

-

H) Brake Manager (calculating and sending brake signals to the ego car)

-

-

Trained machine learning models:

-

I) Pedestrian Detector (a YOLOv5 model trained using PyTorchFootnote 4

-

J) Anomaly Detector (an autoencoder provided by SeldonFootnote 5)

-

7.2 Process view

The process view deals with the dynamic aspects of SMIRK including an overview of the run time behavior of the system. The overall SMIRK flow is as follows:

-

1.

The Radar detects an object and sends the signature to the Radar Logic class.

-

2.

The Radar Logic class calculates the TTC. If a collision between the ego car and the object is imminent, i.e., TTC is less than 4 s assuming constant motion vectors, the Perception Orchestrator is notified.

-

3.

The Perception Orchestrator forwards the most recent image from the Camera to the Pedestrian Detector to evaluate if the detected object is a pedestrian.

-

4.

The Pedestrian Detector performs a pedestrian detection in the image and returns the verdict (True/False) to the Pedestrian Orchestrator.

-

5.

If there appears to be a pedestrian on a collision course, the Pedestrian Orchestrator forwards the image and the radar signature to the Uncertainty Manager in the safety cage.

-

6.

The Uncertainty Manager sends the image to the Anomaly Detector and requests an analysis of whether the camera input is OOD or not.

-

7.

The Anomaly Detector analyzes the image in the light of the training data and returns its verdict (True/False).

-

8.

If there indeed appears to be an imminent collision with a pedestrian, the Uncertainty Manager forwards all available information to the Rule Engine for a sanity check.

-

9.

The Rule Engine does a sanity check based on heuristics, e.g., in relation to laws of physics, and returns a verdict (True/False).

-

10.

The Uncertainty Manager aggregates all information and, if the confidence is above a threshold, notifies the Brake Manager that collision with a pedestrian is imminent.

-

11.

The Brake Manager calculates a safe brake level and sends the signal to Ego Car to commence PAEB.

8 SMIRK data management specification

This section describes the overall approach to data management for SMIRK and the explicit data requirements. SMIRK is a demonstrator for a simulated environment. Thus, as an alternative to longitudinal traffic observations and consideration of accident statistics, we have analyzed the SMIRK ODD through the ESI Pro-SiVIC “Object Catalog.” We conclude that the demographics of pedestrians in the ODD is constituted of the following: adult males and females in either casual, business casual, or business casual clothes, young boys wearing jeans and a sweatshirt, and male road workers. As other traffic is not within the ODD (e.g., cars, motorcycles, and bicycles), we consider the following basic shapes from the object catalog to as examples of OOD objects (that still can appear in the ODD) for SMIRK to handle in operation: boxes, cones, pyramids, spheres, and cylinders.

8.1 Data Requirements [L]

This section specifies requirements on the data used to train and test the pedestrian recognition component. The data requirements are specified to comply with the ML Safety Requirements in the SRS. All data requirements are organized according to the assurance-related desiderata proposed by Ashmore et al. (2021), i.e., the key assurance requirements that ensure that the data set is relevant, complete, balanced, and accurate.

Table 2 shows a requirements traceability matrix between ML safety requirements and data requirements. The matrix presents an overview of how individual data requirements contribute to the satisfaction of ML Safety Requirements. Entries in individual cells denote that the ML safety requirement is addressed, at least partly, by the corresponding data requirement. SYS-PER-REQ4 and SYS-PER-REQ6 are not related to the data requirements.

8.1.1 Desideratum: relevant

This desideratum considers the intersection between the data set and the supported dynamic driving task in the ODD. The SMIRK training data will not cover operational environments that are outside of the ODD, e.g., images collected in heavy snowfall.

-

DAT-REL-REQ1 All data samples shall represent images of a road from the perspective of a vehicle.

-

DAT-REL-REQ2 The format of each data sample shall be representative of that which is captured using sensors deployed on the ego car.

-

DAT-REL-REQ3 Each data sample shall assume sensor positioning representative of the positioning used on the ego car.

-

DAT-REL-REQ4 All data samples shall represent images of a road environment that corresponds to the ODD.

-

DAT-REL-REQ5 All data samples containing pedestrians shall include one single pedestrian.

-

DAT-REL-REQ6 Pedestrians included in data samples shall be of a type that may appear in the ODD.

-

DAT-REL-REQ7 All data samples representing non-pedestrian OOD objects shall be of a type that may appear in the ODD.

Rationale: SMIRK adapts the requirements from the Relevant desiderata specified by Gauerhof et al. (2020) for the SMIRK ODD. DAT-REL-REQ5 is added based on the corresponding fundamental restriction of the ODD of the SMIRK MVP. DAT-REL-REQ7 restricts data samples providing OOD examples for testing.

8.1.2 Desideratum: complete

This desideratum considers the sampling strategy across the input domain and its subspaces. Suitable distributions and combinations of features are particularly important. Ashmore et al. (2021) refer to this as the external perspective on the data.

-

DAT-COM-REQ1 The data samples shall include the complete range of environmental factors within the scope of the ODD.

-

DAT-COM-REQ2 The data samples shall include images representing all types of pedestrians according to the demographics of the ODD.

-

DAT-COM-REQ3 The data samples shall include images representing pedestrians paces from standing still up to running at 15km/h.

-

DAT-COM-REQ4 The data samples shall include images representing all angles an upright pedestrian can be captured by the given sensors on the ego car.

-

DAT-COM-REQ5 The data samples shall include images representing all distances to crossing pedestrians from 10 up to 100 m away from ego car.

-

DAT-COM-REQ6 The data samples shall include examples with different levels of occlusion giving partial views of pedestrians crossing the road.

-

DAT-COM-REQ7 The data samples shall include a range of examples reflecting the effects of identified system failure modes.

Rationale: SMIRK adapts the requirements from the complete desiderata specified by Gauerhof et al. (2020) for the SMIRK ODD. We deliberately replaced the original adjective “sufficient” to make the data requirements more specific. Furthermore, we add DAT-COM-REQ3 to cover different poses related to the pace of the pedestrian and DAT-COM-REQ4 to cover different observation angles.

8.1.3 Desideratum: balanced

This desideratum considers the distribution of features in the data set, e.g., the balance between the number of samples in each class. Ashmore et al. (2021) refer to this as an internal perspective on the data.

-

DAT-BAL-REQ1 The data set shall have a representation of samples for each relevant class and feature that ensures AI fairness with respect to gender.

-

DAT-BAL-REQ2 The data set shall have a representation of samples for each relevant class and feature that ensures AI fairness with respect to age.

-

DAT-BAL-REQ3 The data set shall contain both positive and negative examples.

Rationale: SMIRK adapts the requirements from the Balanced desiderata specified by Gauerhof et al. (2020) for the SMIRK ODD. The concept of AI fairness is to be interpreted in the light of the Ethics guidelines for trustworthy AI published by the European Commission (High-Level Expert Group on Artificial Intelligence, 2019). Note that the number of ethical dimensions that can be explored in through the ESI Pro-SiVIC object catalog is limited to gender (DAT-BAL-REQ1) and age (DAT-BAL-REQ2). Moreover, the object catalog does only contain male road workers and all children are boys. Furthermore, DAT-BAL-REQ3 is primarily included to align with Gauerhof et al. (2020) and to preempt related questions by safety assessors. In practice, the concept of negative examples when training object detection models are typically satisfied implicitly as the parts of the images that do not belong to the annotated class are true negatives (further explained in Section 10.1).

8.1.4 Desideratum: accurate

This desideratum considers how measurement issues can affect the way that samples reflect the intended ODD, e.g., sensor accuracy and labeling errors.

-

DAT-ACC-REQ1: All bounding boxes produced shall include the entirety of the pedestrian.

-

DAT-ACC-REQ2: All bounding boxes produced shall be no more than 10% larger in any dimension than the minimum sized box capable of including the entirety of the pedestrian.

-

DAT-ACC-REQ3: All pedestrians present in the data samples shall be correctly labeled.

Rationale: SMIRK reuses the requirements from the Accurate desiderata specified by Gauerhof et al. (2020).

8.2 Data generation log [Q]

This section describes how the data used for training the ML model in the pedestrian recognition component was generated. Based on the data requirements, we generate data using ESI Pro-SIVIC. The data are split into three sets in accordance with AMLAS.

-

Development data: Covering both training and validation data used by developers to create models during ML development.

-

Internal test data: Used by developers to test the model.

-

Verification data: Used in the independent test activity when the model is ready for release.

The SMIRK data collection campaign focuses on generation of annotated data in ESI Pro-SiVIC. All data generation is script-based and fully reproducible. Section 8.2.1 describes positive examples (PX), i.e., humans that shall be classified as pedestrians. Section 8.2.2 describes examples that represent OOD shapes (NX), i.e., objects that shall not initiate PAEB in case of an imminent collision. These images, referred to as OOD examples, shall either not be recognized as a pedestrian or be rejected by the safety cage (see Section 9.3).

In the data collection scripts, ego car is always stationary whereas pedestrians and objects move according to specific configurations. The parameters and values were selected to systematically cover the ODD. Finally, images are sampled from the camera at 10 frames per second with a resolution of \(752 \times 480\) pixels. For each image, we add a separate image file containing the ground truth pixel-level annotation of the position of the pedestrian. In total, we generate data representing \(8 \times 616 = 4928\) execution scenarios with positive examples and \(5 \times 20 = 100\) execution scenarios with OOD examples. In total, the data collection campaign generates roughly 185 GB of image data, annotations, and meta-data (including bounding boxes).

8.2.1 Positive examples

We generate positive examples from humans with eight visual appearances (see the upper part of Fig. 7) available in the ESI Pro-SiVIC object catalog:

- P1:

-

Casual female pedestrian

- P2:

-

Casual male pedestrian

- P3:

-

Business casual female pedestrian

- P4:

-

Business casual male pedestrian

- P5:

-

Business female pedestrian

- P6:

-

Business male pedestrian

- P7:

-

Child

- P8:

-

Male construction worker

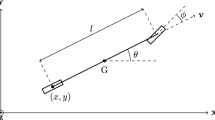

For each of the eight visual appearances, we specify the execution of 616 scenarios in ESI Pro-SiVIC organized into four groups (A–D). The pedestrians always follow rectilinear motion (a straight line) at a constant speed during scenario execution. Groups A and B describe pedestrians crossing the road, either from the left (group A) or from the right (group B). There are three variation points, i.e., (1) the speed of the pedestrian, (2) the angle at which the pedestrian crosses the road, and (3) the longitudinal distance between ego car and the pedestrian’s starting point. In all scenarios, the distance between the starting point of the pedestrian and the edge of the road is 5 m.

-

A. Crossing the road from left to right (4 \(\times\) 7 \(\times\) 10 = 280 scenarios)

-

Speed (m/s): [1, 2, 3, 4]

-

Angle (degree): [30, 50, 70, 90, 110, 130, 150]

-

Longitudinal distance (m): [10, 20, 30, 40, 50, 60, 70, 80, 90, 100]

-

-

B. Crossing the road from right to left (4 \(\times\) 7 \(\times\) 10 = 280 scenarios)

-

Speed (m/s): [1, 2, 3, 4]

-

Angle (degree): [30, 50, 70, 90, 110, 130, 150]

-

Longitudinal distance (m): [10, 20, 30, 40, 50, 60, 70, 80, 90, 100]

-

Groups C and D describe pedestrians moving parallel to the road, either toward ego car (group C) or away (group D). There are two variation points, i.e., (1) the speed of the pedestrian and (2) an offset from the road center. The pedestrian always moves 90 m, with a longitudinal distance between ego car and the pedestrian’s starting point of 100 m for group C (towards) and 10 m for group D (away).

-

C. Movement parallel to the road toward ego car (4 \(\times\) 7= 28 scenarios)

-

Speed (m/s): [1, 2, 3, 4]

-

Lateral offset (m): [−3, −2, −1, 0, 1, 2, 3]

-

-

D. Movement parallel to the road away from ego car (4 \(\times\) 7= 28 scenarios)

-

Speed (m/s): [1, 2, 3, 4]

-

Lateral offset (m): [−3, −2, −1, 0, 1, 2, 3]

-

8.2.2 Out-of-distribution examples

We generate OOD examples using five basic shapes (see the lower part of Fig. 7) available in the ESI Pro-SiVIC object catalog:

- N1:

-

Sphere

- N2:

-

Cube

- N3:

-

Cone

- N4:

-

Pyramid

- N5:

-

Cylinder

For each of the five basic shapes, we specify the execution of 20 scenarios in ESI Pro-SiVIC. The scenarios represent a basic shape crossing the road from the left or right at an angle perpendicular to the road. Since basic shapes are not animated, we fix the speed at 4 m/s. Moreover, as lateral offsets and different angles make little to no difference in front of the camera, we disregard these variation points. In all scenarios, the distance between the starting point of the basic shape and the edge of the road is 5 m. The only variation points are the crossing direction and the longitudinal distance between ego car and the objects’ starting point. As for pedestrians, the objects always follow a rectilinear motion at a constant speed during scenario execution.

-

Crossing direction: [left, right]

-

Longitudinal distance (m): [10, 20, 30, 40, 50, 60, 70, 80, 90, 100]

8.2.3 Preprocessing and data splitting

As the SMIRK data collection campaign relies on data generation in ESI Pro-SiVIC, the need for pre-processing differs from counterparts using naturalistic data. The script-based data generation ensures that the crossing pedestrians and objects appear at the right distance with specified conditions and with controlled levels of occlusion. All output images share the same characteristics; thus, no normalization is needed. SMIRK includes a script to generate bounding boxes for training the object detection model. ESI Pro-SiVIC generates ground truth image segmentation on a pixel-level. The script is used to convert the output to the appropriate input format for model training.

The development data contains images with no pedestrians, in line with the description of “background images” in the YOLOv5 training tips provided by UltralyticsFootnote 6. Background images have no objects for the model to detect, and are added to reduce FPs. Ultralytics recommends 0–10% background images to help reduce FPs and reports that the fraction of background images in the well-known COCO data set is 1% (Lin et al., 2014). In our case, we add background images with cylinders (N5) to the development data. In total, the SMIRK development data contains 1.98% background images, i.e., 1.75% images without any objects and 0.23% with a cylinder.

The generated data are used in three sequestered (separated) data sets:

-

Development data: P2, P3, P6, and N5

-

Internal test data: P1, P4, N1, and N3

-

Verification data: P5, P7, P8, N2, and N4

Note that we deliberately avoid mixing pedestrian models from the ESI Pro-SiVIC object catalog in the data sets due to the limited diversity in the images within the ODD.

9 Machine learning component specification

The pedestrian recognition component consists of two ML-based constituents: a pedestrian detector and an anomaly detector (see Fig. 6).

9.1 Pedestrian detection using YOLOv5s

SMIRK implements its pedestrian recognition component using the third-party OSS framework YOLOv5 by Ultralytics. Based on Ultralytics’ publicly reported experiments on real-time characteristics of different YOLOv5 architecturesFootnote 7, we found that YOLOv5s stroke the best balance between inference time and accuracy for SMIRK. After validating the feasibility in our experimental setup, we proceeded with this ML architecture selection.

The pedestrian recognition component uses the YOLOv5 architecture without any modifications. YOLOv5s has 191 layers and \(\approx\)7.5 million parameters. We use the default configurations proposed in YOLOv5s regarding activation, optimization, and cost functions. As activation functions, YOLOv5s uses Leaky ReLU in the hidden layers and the sigmoid function in the final layer. We use the default optimization function in YOLOv5s, i.e., stochastic gradient descent. The default cost function in YOLOv5s is binary cross-entropy with logits loss as provided in PyTorch, which we also use. We refer the interested reader to further details provided by Rajput (2020) and Ultralytics’ GitHub repository.

9.2 Model development log [U]

This section describes how the YOLOv5s model has been trained for the SMIRK MVP. We followed the general process presented by Ultralytics for training on custom data.

First, we manually prepared two SMIRK data sets to match the input format of YOLOv5. In this step, we also divided the development data [N] into two parts. The first part containing approximately 80% of development data, was used for training. The second part, consisting of the remaining data, was used for validation. Camera frames from the same video sequence were kept together in the same partition to avoid having almost identical images in the training and validation sets. Additionally, we kept the distribution of objects and scenario types consistent in both partitions. The internal test data [O] was used as a test set. We then prepared these three data sets, training, validation, and test, according to Ultralytic’s instructions and trained YOLOv5 for a single class, i.e., pedestrians. The data sets were already annotated using ESI Pro-SiVIC; thus, we only needed to export the labels to the YOLO format with one txt-file per image. Finally, we organize the individual files (images and labels) according to the YOLOv5 instructions. More specifically, each label file contains the following information:

-

One row per object.

-

Each row contains class, x_center, y_center, width, and height.

-

Box coordinates are stored in normalized xywh format (from 0 to 1).

-

Class numbers are zero-indexed, i.e., they start from 0.

Second, we trained a YOLO model using the YOLOv5s architecture with the development data without any pre-trained weights. The model was trained for 10 epochs with a batch-size of 8. The results from the validation subset (27,843 images in total) of the development data guide the selection of the confidence threshold for the ML model. We select a threshold to meet SYS-PER-REQ3 with a safety margin for the development data, i.e., an FPPI of 0.1%. This yields a confidence threshold for the ML model to classify an object as a pedestrian that equals 0.448. The final pedestrian detection model, i.e., the ML model [V], has a size of \(\approx\) 14 MB.

9.3 OOD detection for the safety cage architecture

SMIRK detects OOD input images as part of its safety cage architecture. The OOD detection relies on the OSS third-party library Alibi DetectFootnote 8 from Seldon. Alibi Detect is a Python library that provides several algorithms for outlier, adversarial, and drift detection for various types of data (Klaise et al., 2020). For SMIRK, we trained Alibi Detect’s autoencoder for outlier detection, with three convolutional and deconvolutional layers for the encoder and decoder respectively.

Figure 8 shows an overview of the DNN architecture of an autoencoder. An encoder and a decoder are trained jointly in two steps to minimize a reconstruction error. First, the autoencoder receives input data X and encodes it into a latent space of fewer dimensions. Second, the decoder tries to reconstruct the original data and produces output \(X'\). An and Cho (2015) proposed using the reconstruction error from a autoencoder to identify input that differs from the training data. Intuitively, if inlier data is processed by the autoencoder, the difference between X and \(X'\) will be smaller than for outlier data, i.e., OOD data will stand out. By carefully selecting a tolerance threshold, this approach can be used for OOD detection.

For SMIRK, we trained Alibi Detect’s autoencoder for OOD detection on the training data subset of the development data. The encoder part is designed with three convolutional layers followed by a dense layer resulting in a bottleneck that compresses the input by 96.66%. The latent dimension is limited to 1,024 variables to limit requirements on processing VRAM of the GPU. The reconstruction error from the autoencoder is measured as the mean squared error between the input and the reconstructed instance. The mean squared error is used for OOD detection by computing the reconstruction error and considering an input image as an outlier if the error surpasses a threshold \(\theta\). The threshold used for OOD detection in SMIRK is 0.004, roughly corresponding to the threshold that rejects a number of samples that equals the amount of outliers in the validation set. As explained in Section 11.4, the OOD detection is only active for objects at least 10 m away from ego car as the results for close-up images are highly unreliable. Furthermore, as the constrained SMIRK ODD ensures that only one single object appears in each scenario, the safety cage architecture applies the policy “once an anomaly, always an anomaly” — objects that get rejected once will remain anomalous no matter what subsequent frames might contain.

10 SMIRK system test specification

This section describes the overall SMIRK test strategy. The ML-based pedestrian recognition component is tested on multiple levels. We focus on four aspects of the ML testing scope facet proposed by Song et al. (2022):

-

Data set testing: This level refers to automatic checks that verify that specific properties of the data set are satisfied. As described in the ML Data Validation Results, the data validation, presented in Section 11.1, includes automated testing of the Balance desiderata. Since the SMIRK MVP relies on synthetic data, the distribution of pedestrians is already ensured by the scripts. However, other distributions such as distances to objects and bounding box sizes are important targets for data set testing.

-

Model testing: Testing that the ML model provides the expected output. This is the primary focus of academic research on ML testing, and includes white-box, black-box, and data-box access levels during testing (Riccio et al., 2020). SMIRK model testing is done independently from model development and results in ML Verification Results [Z] as described in Section 11.2.2.

-

Unit testing: Conventional unit testing on the level of Python classes. A test suite developed for the pytest framework is maintained by the developers and not elaborated further in this paper.

-

System testing: System-level testing of SMIRK based on a set of Operational Scenarios [EE]. All test cases are designed for execution in ESI Pro-SiVIC. The system testing targets the requirements in the System Requirements Specification. This level of testing results in Integration Testing Results [FF] presented in Section 11.3.

10.1 ML model testing [AA]

This section corresponds to the Verification Log [AA] in AMLAS Step 5, i.e., Model Verification Assurance. Here we explicitly document the ML Model testing strategy, i.e., the range of tests undertaken and bounds and test parameters motivated by the SMIRK system requirements.

The testing of the ML model is based on assessing the object detection accuracy for the sequestered verification data set. A fundamental aspect of the verification argument is that this data set was never used in any way during the development of the ML model. To further ensure the independence of the ML verification, engineers from Infotiv, part of the SMILE III research consortium, led the corresponding verification and validation work package and were not in any way involved in the development of the ML model. As described in the Machine Learning Component Specification (see Section 9), the ML development was led by Semcon with support from RISE Research Institutes of Sweden.

The ML model test cases provide results for both 1) the entire verification data set and 2) eight slices of the data set that are deemed particularly important. The selection of slices was motivated by either an analysis of the available technology or ethical considerations, especially from the perspective of AI fairness (Borg et al., 2021b). Consequently, we measure the performance for the following slices of data. Identifiers in parentheses show direct connections to requirements.

- S1:

-

The entire verification data set

- S2:

-

Pedestrians close to the ego car (longitudinal distance \(<\) 50 m) (SYS-PER-REQ1, SYS-PER-REQ2)

- S3:

-

Pedestrians far from the ego car (longitudinal distance \(\ge\) 50 m)

- S4:

-

Running pedestrians (speed \(\ge\) 3 m/s) (SYS-ROB-REQ2)

- S5:

-

Walking pedestrians (speed 0 m/s but \(<\) 3 m/s) (SYS-ROB-REQ2)

- S6:

-

Occluded pedestrians (entering or leaving the field of view, defined as bounding box in contact with any edge of image) (DAT-COM-REQ4)

- S7:

-

Male pedestrians (DAT-COM-REQ2)

- S8:

-

Female pedestrians (DAT-COM-REQ2)

- S9:

-

Children (DAT-COM-REQ2)

Evaluating the output from an object detection model in computer vision is non-trivial. We rely on the established IoU metric to evaluate the accuracy of the YOLOv5 model. After discussions in the development team, supported by visualizationsFootnote 9, we set the target at 0.5. We recognize that there are alternative measures tailored for pedestrian detection, such as the log-average miss rate (Dollar et al., 2011) but we find such metrics to be unnecessarily complex for the restricted SMIRK ODD with a single pedestrian. There are also entire toolboxes that can be used to assess object detection (Bolya et al., 2020). Whereas more complex metrics could be used, we decide to use IoU in SMIRK’s safety argumentation. Relying on a simpler metric supports interpretability, which is a vital to any safety case, especially if ML is involved (Jia et al., 2022).

Even using the standard IoU metric to assess how accurate SMIRK’s ML model is, the evaluation results are not necessarily intuitive to non-experts. Each image in the SMIRK data set either has a ground truth bounding box containing the pedestrian or no bounding box at all. Similarly, when performing inference on an image, the ML model will either predict a bounding box containing a potential pedestrian or no bounding box at all. IoU is the intersection over the union of the two bounding boxes. An IoU of 1 implies a perfect overlap. For the ML model in SMIRK, we evaluate pedestrian detection at IoU = 0.5, which for each image means:

- TP:

-

True positive: IoU \(\ge\) 0.5

- FP:

-

False positive: IoU \(<\) 0.5

- FN:

-

False negative: There is a ground truth bounding box in the image, but no predicted bounding box.

- TN:

-

True negative: All parts of the image with neither a ground truth nor a predicted bounding box. This output carries no meaning in our case.