Overview

Update: we have a new article which goes directly into a production-style setup of Kubernetes containers using Network File System (NFS) Persistent Volume. Using K8 with local storage, as illustrated in this posting, is reserved for edge cases as such deployment sacrifices versatility & robustness of a K8 system. Network storage is definitely recommended over local mounts.

This walk-through is intended as a practical demonstration of an application (App) deployment using Kubernetes. To convert this guide into Production-grade practice, it’s necessary to add Secret (tls.crt, tls.key), external storage, and production-grade load balancer components. Explaining all the intricacies of the underlying technologies would require a much longer article; thus, we will just dive straight into the codes with sporadic commentaries.

Assumptions for lab environment:

a. There already exists a Kubernetes cluster.

b. The cluster consists of these nodes: linux01 & linux02

c. Each node is running Ubuntu 20.04 LTS with setup instructions given here.

d. A local mount named /data has been set on the worker node, linux02. A how-to is written here.

e. These are the roles and IPs of nodes:

– linux01: controller, 192.168.100.91

– linux02: worker, 192.168.100.92

f. The controller node has Internet connectivity to download Yaml files for configurations and updates.

g. The ingress-nginx controller has been added to the cluster as detailed in the article link above (part 2: optional components).

h. This instruction shall include a ‘bare-metal’ load balancer installation. Although, a separate load balancer outside of the Kubernetes cluster is recommended for production.

i. A client computer with an Internet browser or BASH

Here are the steps to host an App in this Kubernetes cluster lab:

1. Setup a Persistent Volume

2. Create a Persistent Volume Claim

3. Provision a Pod

4. Apply a Deployment Plan

5. Implement MetalLB Load Balancer

6. Create a Service Cluster

7. Generate an Ingress Route for the Service

8. Add the SSL Secret component

9. Miscellaneous Discovery & Troubleshooting

The general work-flow is divided into part (a) generate a shell script on the worker node linux02, and part (b) paste the code into the master node, linux01. This sequence is to be repeated at the subsequent steps number until completion.

View of a Finished Product

kim@linux01:~$ k get all -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/test-deployment-7d4cc6df47-4z2mr 1/1 Running 0 146m 192.168.100.92 linux02 <none> <none>

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 6d6h <none>

service/test-deployment ClusterIP 10.96.244.250 <none> 80/TCP 2d23h app=test

service/test-service LoadBalancer 10.111.131.202 192.168.100.80 80:30000/TCP 120m app=test

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

deployment.apps/test-deployment 1/1 1 1 146m test nginx:alpine app=test

NAME DESIRED CURRENT READY AGE CONTAINERS IMAGES SELECTOR

replicaset.apps/test-deployment-7d4cc6df47 1 1 1 146m test nginx:alpine app=test,pod-template-hash=7d4cc6df47

Step 1: Setup a Persistent Volume

There are many storage classes available for integration with Kubernetes (https://kubernetes.io/docs/concepts/storage/storage-classes/). For purposes of this demo, we’re using a local mount on the node named linux02. Assuming that the mount has been set as /data, we would run this script on linux02 to generate a yaml file containing instructions for a creation of a Peristent Volume in the cluster. After the script has been generated at the shell console output of linux02, it is to be copied and pasted onto the terminal console of the Kubernetes controller, linux01.

1a: generate script on worker node

# Set variables

mountPoint=/data

# Set permissions

# sudo chcon -Rt svirt_sandbox_file_t $mountPoint # SELinux: enable Kubernetes virtual volumes - only on systems with SELinux enabled

# setsebool -P virt_use_nfs 1 # SELinux: enable nfs mounts

sudo chmod 777 $mountSource

# This would occur if SELinux isn't running

# kim@linux02:~$ sudo chcon -Rt svirt_sandbox_file_t $mountPoint

# chcon: can't apply partial context to unlabeled file 'lost+found'

# chcon: can't apply partial context to unlabeled file '/data'

# create a dummy index.html

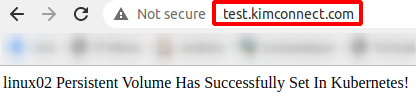

echo "linux02 Persistent Volume Has Successfully Set In Kubernetes!" >> $mountPoint/index.html

# Generate the yaml file creation script

mountSource=$(findmnt --mountpoint $mountPoint | tail -1 | awk '{print $2}')

availableSpace=$(df $mountPoint --output=avail | tail -1 | sed 's/[[:blank:]]//g')

availbleInGib=`expr $availableSpace / 1024 / 1024`

echo "Mount point $mountPoint has free disk space of $availableSpace KB or $availbleInGib GiB"

hostname=$(cat /proc/sys/kernel/hostname)

pvName=$hostname-local-pv

storageClassName=$hostname-local-volume

pvFileName=$hostname-persistentVolume.yaml

echo "Please paste the below contents on the master node"

echo "# Step 1: Setup a Persistent Volume

cat > $pvFileName << EOF

apiVersion: v1

kind: PersistentVolume

metadata:

name: $pvName

spec:

capacity:

# expression can be in the form of an integer or a human readable string (1M=1000KB vs 1Mi=1024KB)

storage: $availableSpace

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: $storageClassName

local:

path: $mountPoint

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- $hostname

EOF

kubectl create -f $pvFileName"

1b: Resulting Script to be executed on the Master Node

# Step 1: Setup a Persistent Volume

cat > linux02-persistentVolume.yaml << EOF

apiVersion: v1

kind: PersistentVolume

metadata:

name: linux02-local-pv

spec:

capacity:

# expression can be in the form of an integer or a human readable string (1M=1000KB vs 1Mi=1024KB)

storage: 243792504

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: linux02-local-volume

local:

path: /data

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- linux02

EOF

kubectl create -f linux02-persistentVolume.yaml

1c: Expected Results

kim@linux01:~$ kubectl create -f linux02-persistentVolume.yaml

persistentvolume/linux02-local-pv created

Step 2: Create a Persistent Volume Claim

2a: generate script on worker node

# Generate the Persistent Volume Claim Script

pvcClaimScriptFile=$hostname-persistentVolumeClaim.yaml

pvClaimName=$hostname-claim

echo "# Step 2. Create a Persistent Volume Claim

cat > $pvcClaimScriptFile << EOF

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: $pvClaimName

spec:

accessModes:

- ReadWriteOnce

storageClassName: $storageClassName

resources:

requests:

storage: $availableSpace

EOF

kubectl create -f $pvcClaimScriptFile

kubectl get pv"

2b: Resulting Script to be executed on the Master Node

# Step 2: Create a Persistent Volume Claim

cat > linux02-persistentVolumeClaim.yaml << EOF

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: linux02-claim

spec:

accessModes:

- ReadWriteOnce

storageClassName: linux02-local-volume

resources:

requests:

storage: 243792504

EOF

kubectl create -f linux02-persistentVolumeClaim.yaml

kubectl get pv

2c: Expected Results

kim@linux01:~$ kubectl create -f linux02-persistentVolumeClaim.yaml

persistentvolumeclaim/linux02-claim created

kim@linux01:~$ kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

linux02-local-pv 243792504 RWO Retain Bound default/linux02-claim linux02-local-volume 8m26s

Step 3: Provision a Pod (to be deleted in favor of Deployment Plan later)

3a: generate script on worker node

# Optional: Generate the Test Pod Script

podName=testpod # must be in lower case

image=nginx:alpine

exposePort=80

echo "$hostname Persistent Volume Has Successfully Set In Kubernetes!" > $mountPoint/index.html

echo "# Step 3. Provision a Pod

cat > $hostname-$podName.yaml << EOF

apiVersion: v1

kind: Pod

metadata:

name: $podName

labels:

name: $podName

spec:

containers:

- name: $podName

image: $image

ports:

- containerPort: $exposePort

name: $podName

volumeMounts:

- name: $pvName

mountPath: /usr/share/nginx/html

volumes:

- name: $pvName

persistentVolumeClaim:

claimName: $pvClaimName

EOF

kubectl create -f $hostname-$podName.yaml

# Wait a few moments for pods to generate and check results

kubectl get pods -o wide

#

#

#

# Remove pods in preparation for deployment plan

kubectl delete pods test-pod"

3b: Resulting Script to be executed on the Master Node

# Step 3. Provision a Pod

cat > linux02-testpod.yaml << EOF

apiVersion: v1

kind: Pod

metadata:

name: testpod

labels:

name: testpod

spec:

containers:

- name: testpod

image: nginx:alpine

ports:

- containerPort: 80

name: testpod

volumeMounts:

- name: linux02-local-pv

mountPath: /usr/share/nginx/html

volumes:

- name: linux02-local-pv

persistentVolumeClaim:

claimName: linux02-claim

EOF

kubectl create -f linux02-testpod.yaml

# Wait a few moments for pods to generate and check results

kubectl get pods -o wide

#

#

#

# Remove pods in preparation for deployment plan

kubectl delete pods test-pod

3c: Expected Results

kim@linux01:~$ kubectl create -f linux02-testpod.yaml

pod/testpod created

kim@linux01:~$ kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

test-deployment-7dc8569756-2tl46 1/1 Running 0 26h 172.16.90.130 linux02 <none> <none>

test-deployment-7dc8569756-mhch7 1/1 Running 0 26h 172.16.90.131 linux02 <none> <none>

Step 4. Apply a Deployment Plan

4a: generate script on worker node

# Generate the Test Deployment Script

appName=test # app name value must be in lower case, '-' (dashes) are ok

image=nginx:alpine

exposePort=80

mountPath=/usr/share/nginx/html

replicas=1

# create a sample file in the persistent volume to be mounted by a test-pod

echo "$hostname Persistent Volume Has Successfully Set In Kubernetes!" > $mountPoint/index.html

# output the script to be ran on the Master node

deploymentFile=$hostname-$appName-deployment.yaml

echo "# Step 4. Apply a Deployment Plan

cat > $deploymentFile << EOF

kind: Deployment

apiVersion: apps/v1

metadata:

name: $appName-deployment

spec:

replicas: $replicas

selector: # select pods to be managed by this deployment

matchLabels:

app: $appName # This must be identical to the pod name

strategy:

type: RollingUpdate

rollingUpdate:

maxSurge: 1

maxUnavailable: 1

template:

metadata:

labels:

app: $appName

spec:

containers:

- name: $appName

image: $image

ports:

- containerPort: $exposePort

name: $appName

volumeMounts:

- name: $pvName

mountPath: $mountPath

volumes:

- name: $pvName

persistentVolumeClaim:

claimName: $pvClaimName

EOF

kubectl create -f $deploymentFile

kubectl get deployments # list deployments

kubectl get pods -o wide # get pod info to include IPs

kubectl get rs # check replica sets"

4b: Resulting Script to be executed on the Master Node

# Step 4. Apply a Deployment Plan

cat > linux02-test-deployment.yaml << EOF

kind: Deployment

apiVersion: apps/v1

metadata:

name: test-deployment

spec:

replicas: 2

selector: # select pods to be managed by this deployment

matchLabels:

app: test # This must be identical to the pod name

strategy:

type: RollingUpdate

rollingUpdate:

maxSurge: 1

maxUnavailable: 1

template:

metadata:

labels:

app: test

spec:

containers:

- name: test

image: nginx:alpine

ports:

- containerPort: 80

name: test

volumeMounts:

- name: linux02-local-pv

mountPath: /usr/share/nginx/html

volumes:

- name: linux02-local-pv

persistentVolumeClaim:

claimName: linux02-claim

EOF

kubectl create -f linux02-test-deployment.yaml

kubectl get deployments # list deployments

kubectl get pods -o wide # get pod info to include IPs

kubectl get rs # check replica sets

4c: Expected Results

kim@linux01:~$ kubectl create -f linux02-test-deployment.yaml

deployment.apps/test-deployment created

kim@linux01:~$ kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

test-deployment-7dc8569756-2tl46 1/1 Running 0 4m41s 172.16.90.130 linux02 <none> <none>

test-deployment-7dc8569756-mhch7 1/1 Running 0 4m41s 172.16.90.131 linux02 <none> <none>

kim@linux01:~$ kubectl get rs

NAME DESIRED CURRENT READY AGE

test-deployment-7dc8569756 2 2 2 25h

Step 5. Implement MetalLB Load Balancer

Source: https://metallb.universe.tf/installation/

# Set strictARP & ipvs mode

kubectl get configmap kube-proxy -n kube-system -o yaml | \

sed -e "s/strictARP: false/strictARP: true/" | sed -e "s/mode: \"\"/mode: \"ipvs\"/" | \

kubectl apply -f - -n kube-system

# Apply the manifests provided by the author, David Anderson (https://www.dave.tf/) - an awesome dude

kubectl apply -f https://raw.githubusercontent.com/metallb/metallb/v0.9.5/manifests/namespace.yaml

kubectl apply -f https://raw.githubusercontent.com/metallb/metallb/v0.9.5/manifests/metallb.yaml

# On first install only

kubectl create secret generic -n metallb-system memberlist --from-literal=secretkey="$(openssl rand -base64 128)"

# Sample output:

kim@linux01:~$ kubectl apply -f https://raw.githubusercontent.com/metallb/metallb/v0.9.5/manifests/namespace.yaml

namespace/metallb-system created

kim@linux01:~$ kubectl apply -f https://raw.githubusercontent.com/metallb/metallb/v0.9.5/manifests/metallb.yaml

podsecuritypolicy.policy/controller created

podsecuritypolicy.policy/speaker created

serviceaccount/controller created

serviceaccount/speaker created

clusterrole.rbac.authorization.k8s.io/metallb-system:controller created

clusterrole.rbac.authorization.k8s.io/metallb-system:speaker created

role.rbac.authorization.k8s.io/config-watcher created

role.rbac.authorization.k8s.io/pod-lister created

clusterrolebinding.rbac.authorization.k8s.io/metallb-system:controller created

clusterrolebinding.rbac.authorization.k8s.io/metallb-system:speaker created

rolebinding.rbac.authorization.k8s.io/config-watcher created

rolebinding.rbac.authorization.k8s.io/pod-lister created

daemonset.apps/speaker created

deployment.apps/controller created

kim@linux01:~$ kubectl create secret generic -n metallb-system memberlist --from-literal=secretkey="$(openssl rand -base64 128)"

secret/memberlist created

# Customize for this system

ipRange=192.168.100.80-192.168.100.90

fileName=metallb-config.yaml

cat > $fileName << EOF

apiVersion: v1

kind: ConfigMap

metadata:

namespace: metallb-system

name: config

data:

config: |

address-pools:

- name: default

protocol: layer2

addresses:

- $ipRange

EOF

kubectl apply -f $fileName

# Sample output

kim@linux01:~$ kubectl apply -f $fileName

configmap/config created

Step 6. Create a Service Cluster

6a: generate script on worker node

A Kubernete service is a virtual entity to perform routing and load balancing of incoming requests toward intended pods by magically forwarding traffic toward the hosts of named pods, masquerading a significant amount of networking complexities. There are five types of Services:

1. ClusterIP – default type to internally load balance traffic to live pods with a single entry point

2. NodePort – is ClusterIP with added feature to bind toward an ephemeral port

3. LoadBalancer – typically dependent on an external load balancer (AWS, Azure, Google, etc). Although, our lab has already included the MetalLB internal component as detailed prior. Hence, we would be utilizing this type to enable ingress into the cluster using an virtual external IP.

4. ExternalName – I don’t know about this one

5. Headless – there are use cases where certain services are purely internal; hence, this type would be necessary.

In this example, we’re preparing a Service to be consumed by an Ingress entity, to be further detailed in the next section. NodePort service type is necessary for this coupling between Service and Ingress entities.

# Generate script for Service of type NodePort

appName=test

protocol=TCP

publicPort=80

appPort=80

nodePort=30000

serviceName=$appName-service

serviceFile=$serviceName.yaml

echo "# Step 5. Create a Service Cluster

cat > $serviceFile << EOF

apiVersion: v1

kind: Service

metadata:

name: $serviceName

spec:

type: LoadBalancer # Other options: ClusterIP, NodePort, LoadBalancer

# NodePort is a ClusterIP service with an additional capability.

# It is reachable via ingress of any node in the cluster via the assigned 'ephemeral' port

selector:

app: $appName # This name must match the template.metadata.labels.app value

ports:

- protocol: $protocol

port: $publicPort

targetPort: $appPort

nodePort: $nodePort # by default, Kubernetes control plane will allocate a port from 30000-32767 range

EOF

kubectl apply -f $serviceFile

clusterIP=\$(kubectl get service $serviceName --output yaml|grep 'clusterIP: '|awk '{print \$2}')

echo \"clusterIP: \$clusterIP\"

curl \$clusterIP

kubectl get service $serviceName"

6b: Resulting Script to be executed on the Master Node

# Step 5. Create a Service Cluster

cat > test-service.yaml << EOF

apiVersion: v1

kind: Service

metadata:

name: test-service

spec:

type: LoadBalancer # Other options: ClusterIP, LoadBalancer

selector:

app: test # This name must match the template.metadata.labels.app value

ports:

- protocol: TCP

port: 80

targetPort: 80

nodePort: 30000 # optional field: by default, Kubernetes control plane will allocate a port from 30000-32767 range

EOF

kubectl apply -f test-service.yaml

clusterIP=$(kubectl get service test-service --output yaml|grep 'clusterIP: '|awk '{print $2}')

echo "clusterIP: $clusterIP"

curl $clusterIP

kubectl get service test-service

6c: Expected Results

kim@linux01:~$ kubectl apply -f test-service.yaml

service.apps/test-service created

kim@linux01:~$ clusterIP=$(kubectl get service test-service --output yaml|grep 'clusterIP: '|awk '{print $2}')

kim@linux01:~$ echo "clusterIP: $clusterIP"

clusterIP: 10.108.54.11

kim@linux01:~$ curl $clusterIP

linux02 Persistent Volume Has Successfully Set In Kubernetes!

kim@linux01:~$ kubectl get service test-service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

test-service LoadBalancer 10.111.131.202 192.168.100.80 80:30000/TCP 135m

6d: Check for ingress from an external client machine

kim@kim-linux:~$ curl -D- -H 'Host: test.kimconnect.com'

HTTP/1.1 200 OK

Server: nginx/1.19.6

Date: Wed, 27 Jan 2021 04:48:29 GMT

Content-Type: text/html

Content-Length: 62

Last-Modified: Sun, 24 Jan 2021 03:40:16 GMT

Connection: keep-alive

ETag: "600cec20-3e"

Accept-Ranges: bytes

linux02 Persistent Volume Has Successfully Set In Kubernetes!

Step 7. Generate an Ingress Route for the Service (optional)

7a: generate script on worker node

# Generate the Ingress yaml Script

virtualHost=test.kimconnect.com

serviceName=test-service

nodePort=30001

ingressName=virtual-host-ingress

ingressFile=$ingressName.yaml

echo "# Step 6. Generate an Ingress Route for the Service

cat > $ingressFile << EOF

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: virtual-host-ingress

annotations:

kubernetes.io/ingress.class: nginx # use the shared ingress-nginx

spec:

rules:

- host: $virtualHost

http:

paths:

- pathType: Prefix

path: /

backend:

service:

name: $serviceName

port:

number: 80

EOF

kubectl apply -f $ingressFile

kubectl describe ingress $ingressName

# run this command on client PC with hosts record of $virtualHost manually set to the IP of any node of this Kubernetes cluster

curl $virtualHost:$nodePort

# Remote test-ingress

kubectl delete $ingressName"

7b: Resulting Script to be executed on the Master Node

# Step 6. Generate an Ingress Route for the Service

cat > virtual-host-ingress.yaml << EOF

# apiVersion: networking.k8s.io/v1

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: virtual-host-ingress

annotations:

kubernetes.io/ingress.class: nginx # use the shared ingress-nginx

spec:

rules:

- host: test.kimconnect.com

http:

paths:

- pathType: Prefix

path: /

backend:

service:

name: test-service

servicePort: 80

# port:

# number: 80

EOF

kubectl apply -f virtual-host-ingress.yaml

kubectl describe ingress virtual-host-ingress

# run this command on client PC with hosts record of $virtualHost manually set to the IP of any node of this Kubernetes cluster

curl test.kimconnect.com:30001

kubectl delete test-ingress

7c: Expected Results

kim@linux01:~$ kubectl apply -f virtual-host-ingress.yaml

ingress.networking.k8s.io/virtual-host-ingress created

kim@linux01:~$ cat /etc/hosts

127.0.0.1 localhost

127.0.1.1 linux01

127.0.1.2 test.kimconnect.com

# The following lines are desirable for IPv6 capable hosts

::1 ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

kim@linux01:~$ curl test.kimconnect.com:30001

linux02 Persistent Volume Has Successfully Set In Kubernetes!

kim@linux01:~$ k delete ingress test-ingress

ingress.networking.k8s.io "test-ingress" deleted

Step 8. Add SSL Secret Component

apiVersion: v1

kind: Secret

metadata:

name: test-secret-tls

namespace: default # must be in the same namespace as the test-app

data:

tls.crt: CERTCONTENTHERE

tls.key: KEYVALUEHERE

type: kubernetes.io/tls

Step 9. Miscellaneous Discovery and Troubleshooting

# How to find the IP address of a pod and assign to a variable

podName=test-deployment-7dc8569756-2tl46

podIp=$(kubectl get pod $podName -o wide | tail -1 | awk '{print $6}')

echo "Pod IP: $podIp"

kim@linux01:~$ k get services

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 3d2h

kim@linux01:~$ kubectl describe service test-service

Name: test-service

Namespace: default

Labels: <none>

Annotations: <none>

Selector: app=test

Type: ClusterIP

IP Families: <none>

IP: 10.108.54.11

IPs: 10.108.54.11

Port: <unset> 80/TCP

TargetPort: 80/TCP

Endpoints: 172.16.90.130:80,172.16.90.131:80

Session Affinity: None

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Type 43m service-controller NodePort -> LoadBalancer

Normal Type 38m service-controller LoadBalancer -> NodePort

# Clear any presets of a host on common services

unset {http,ftp,socks,https}_proxy

env | grep -i proxy

curl $clusterIP

# How to expose a deployment

# kubectl expose deployment test-deployment --type=ClusterIP # if ClusterIP is specified in the service plan

kubectl expose deployment test-deployment --port=80 --target-port=80

kubectl expose deployment/test-deployment --type="NodePort" --port 80

kim@linux01:~$ kubectl expose deployment test-deployment --type=ClusterIP

Error from server (AlreadyExists): services "test-deployment" already exists

kim@linux01:~$ kubectl expose deployment/test-deployment --type="NodePort" --port 80

Error from server (AlreadyExists): services "test-deployment" already exists

# Create a name space - not included in the examples of this article

# kubectl creates ns persistentVolume1

Step 10. Cleanup

# Cleanup: must be in the correct sequence!

kubectl delete ingress virtual-host-ingress

kubectl delete services test-service

kubectl delete deployment test-deployment

kubectl delete persistentvolumeclaims linux02-claim

kubectl delete pv linux02-local-pv