What just happened? Nvidia has reportedly requested a sample of the next-generation High Bandwidth Memory (HBM3E DRAM) from South Korean chipmaker SK Hynix, aiming to evaluate its impact on GPU performance. If everything proceeds as anticipated, the company plans to use the new technology in its future GPUs for AI and HPC.

The DigiTimes report comes almost a month after SK Hynix announced that its 5th-generation 10nm process, 1bnm, has completed the validation process and is ready to power next-gen DDR5 & HBM3E solutions. The company also revealed that Intel's Xeon Scalable platform has already been certified by the American chipmaker to support DDR5 products built on the 1bnm node. Back in January, it received Intel certification for the 4th-gen 10nm (1anm) DDR5 server DRAM.

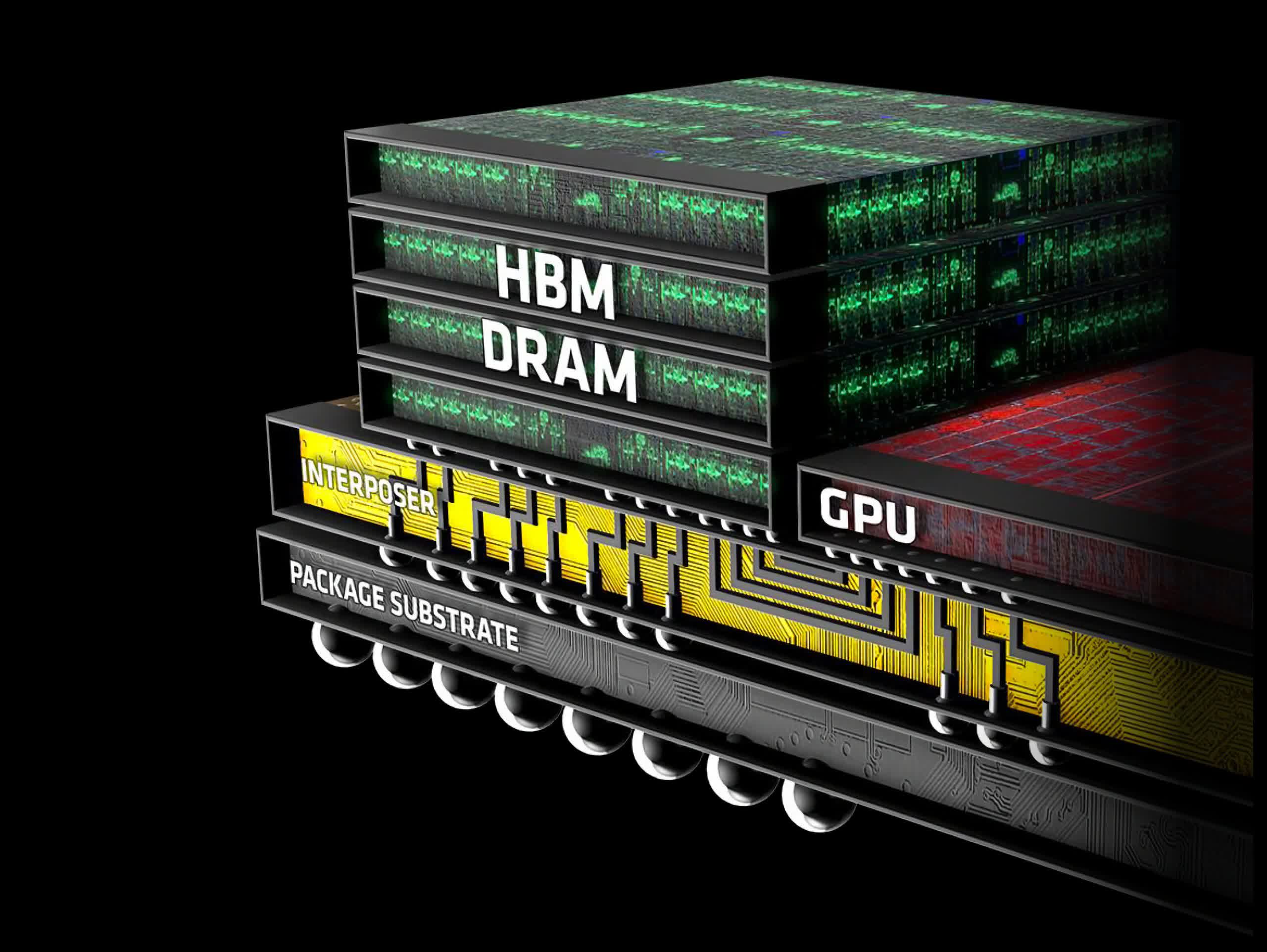

SK Hynix also announced that in test runs, DDR5 products built on the 1bnm node ran at 4.8Gbps; the maximum speed of DDR5 stipulated in the JEDEC standards is 8.8Gbps. According to a report by Tom's Hardware, the HMB3E memory will significantly increase the data transfer rate from the current 6.40 GT/s to 8.0 GT/s, thereby increasing the per-stack bandwidth from 819.2 GB/s to 1 TB/s. However, it's not immediately clear if the new technology will be compatible with the existing HBM3 controllers and interfaces.

SK Hynix is preparing HBM3E product samples for the second half of this year with the goal of getting it into mass production by the first half of 2024. The company already has a partnership with Nvidia, providing its HBM3 product for the latter's H100 Hopper Compute GPU.

With a 50 percent share of the total global market, SK Hynix is currently the HBM market leader ahead of Samsung, which has a 40 percent overall market share. If Nvidia approves its latest technology, this could help the company extend its lead over Samsung in the HBM sector.

It remains uncertain which of Nvidia's compute GPUs will utilize SK Hynix's HBM3E memory if the deal is successful. However, speculation suggests they could be integrated into team green's next-gen products, expected to be released in 2024.

There's also speculation that Nvidia may dump HBM3 entirely in favor of HBM3E for its Hopper-Next GPUs, but this has yet to be officially announced by either party. Meanwhile, Samsung is also said to be working on its own Snowbolt HBM3P memory offering up to 5 TB/s bandwidth per stack, so it will be interesting to see how that compares to SK Hynix's HBM3E in real-life performance.