WD Red SMR v. CMR Part I: The Reasonably Similar

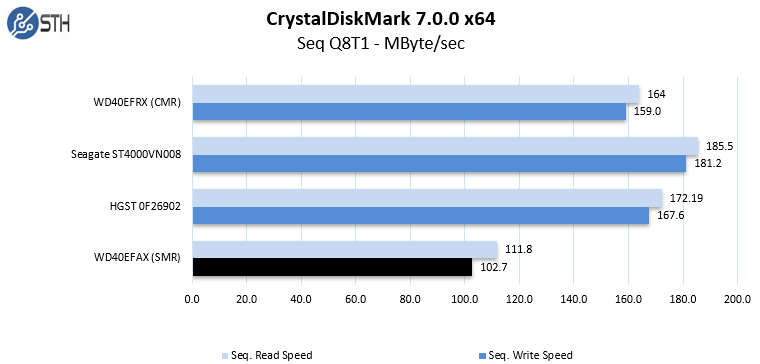

We are going to start with some general benchmarks to try and place the WD Red (WD40EFAX) performance in a larger context.

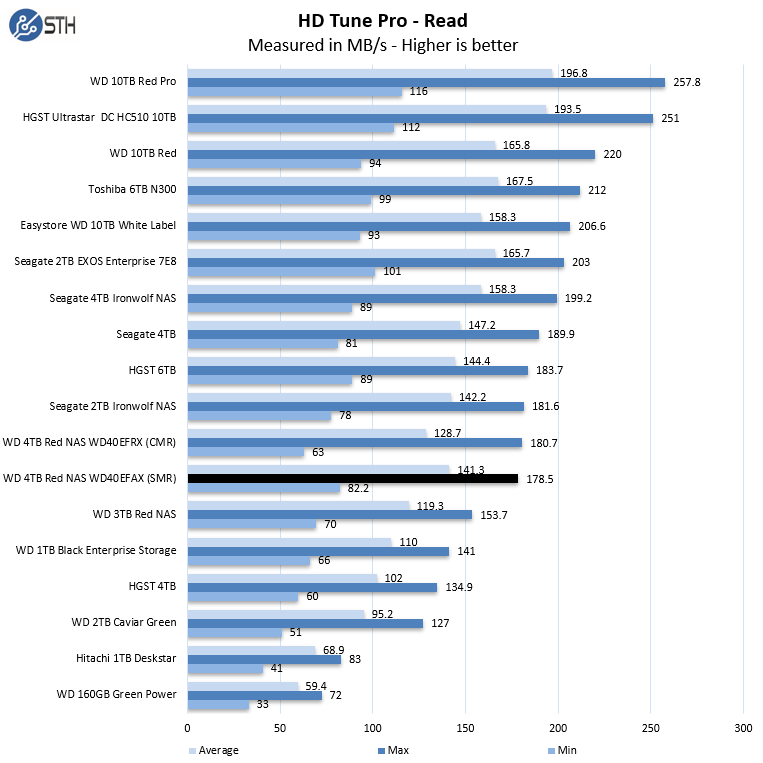

In read tests the SMR drive performs fairly similarly to the CMR based WD40EFRX.

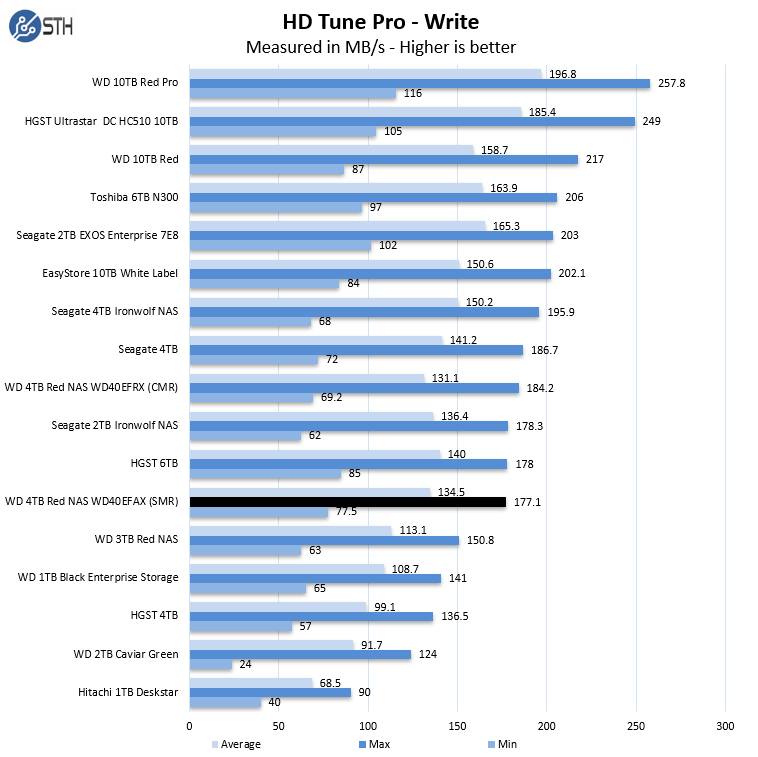

Write tests tell a mostly similar story. As an individual drive, the WD40EFAX is performing pretty well in these benchmarks.

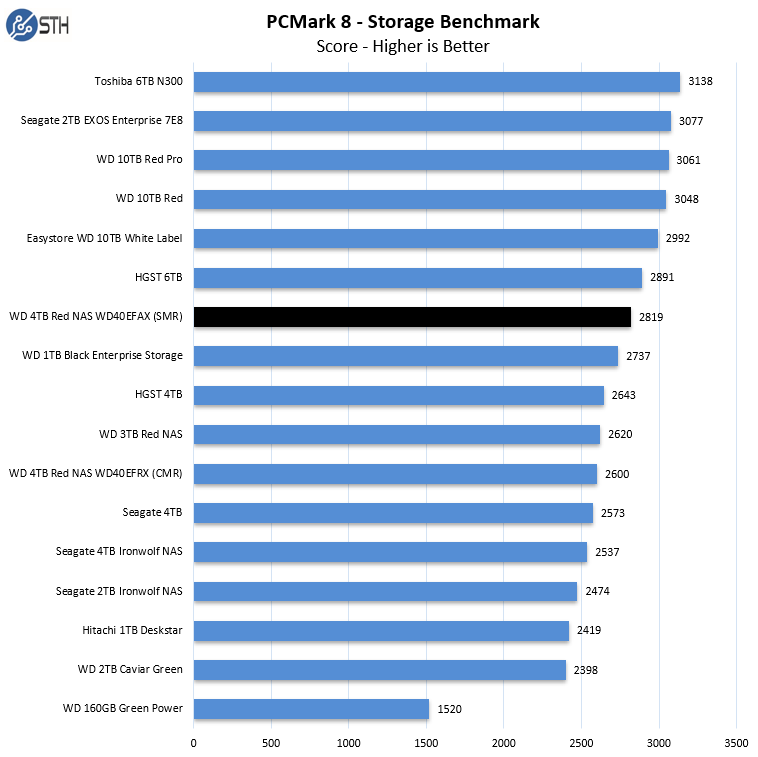

In PCMark8, the WD40EFAX manages to outperform the CMR WD40EFRX. The SMR drive has a much larger cache than the CMR version, 256MB vs 64MB, which perhaps helps account for the win here. In these kinds of shorter burst activity workloads, one can see how SMR may be used as a substitute.

Next, we will move on to the tests focused on the WD40EFAX and NAS RAID arrays.

WD Red SMR v. CMR Part II: The Not So Good

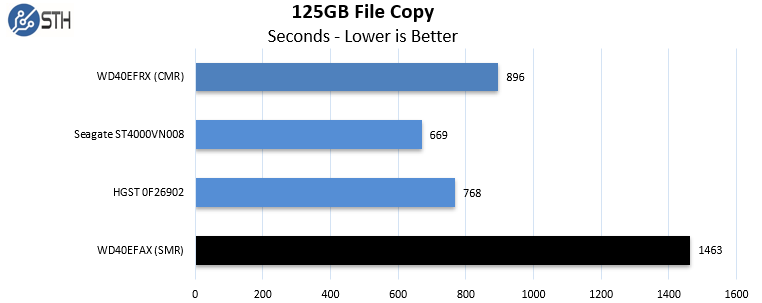

First up is the file copy test. Just a reminder, this test was performed as immediately as possible after completing the drive preparation process.

In the file copy test, the effects of the slower SMR technology starts to show itself a bit. The WD40EFAX turns in performance numbers that are significantly worse than the CMR drives.

Something we noticed is that the test that immediately followed the file copy test was a sequential CrystalDiskMark workload:

As you can see, with a heavy write workload immediately preceding the CDM test, the SMR drive was notably slower. In some ways, this is like timing a runner’s sprint time after running a marathon. One could argue that you may not transfer 125GB files every day, but that is less data than the video production folder for this article’s companion video we linked at the start. Still, this is a good indicator of the drive working through its internal data management processes and impacting performance.

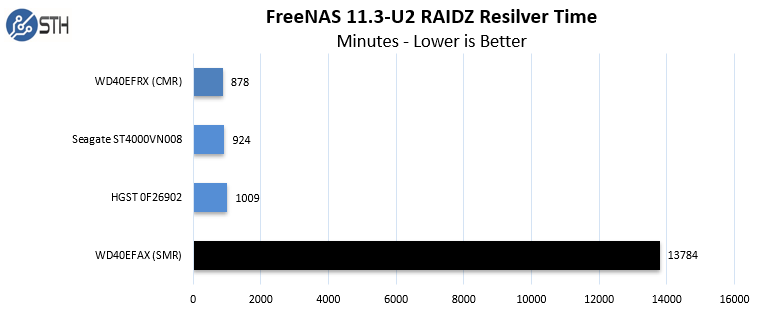

Due to the nature of our last test, it was not performed in rapid succession with the previous two. With that said, all of the tested drives were disconnected as soon as their previous benchmarks were complete, and before plugging them back in for use in our test NAS array. During this time, scrubs were disabled for the pool and resilvering priority was completely disabled.

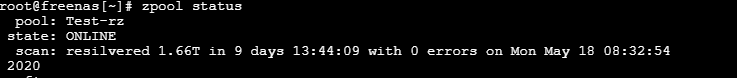

Unfortunately, while the SMR WD Red performed respectably in the previous benchmarks, the RAIDZ resilver test proved to be another matter entirely. While all three CMR drives comfortably completed the resilver in under 17 hours, the SMR drive took nearly 230 hours to perform an identical task.

The WD40EFAX performed so poorly that we repeated the test on a second disk to rule out user error; the second disk exhibited the same extremely slow resilver speeds. We also tested the SMR drives before and after the CMR drives to ensure that it was not a case of something happening due to the order of testing.

The only positive here is that the resilver did finish, and encountered no errors along the way, but the performance operating in the RAIDZ array was completely unacceptable. That 9 day and almost 14-hour rebuild means that using the WD Red 4TB SMR drive inadvertently in an array would lead to your data being vulnerable for around 9 days longer than the WD Red 4TB CMR drive or Seagate IronWolf. If you use WD Red CMR drives, you had class-leading performance in this test but if you bought a WD Red SMR drive, perhaps not understanding the difference, you would have another 9 days of potentially catastrophic data vulnerability.

According to iXsystems, WD Red SMR drives running firmware revision 82.00A82 can cause the drive to enter a failed state during heavy loads using ZFS. This is the revision of firmware that came on both of our drives. We did not experience this failure mode, and instead only received extremely poor performance. Perhaps that was because we were testing the use of the drive as a replacement rather than building an entire array of SMR drives. In either case, we suggest not using them.

There are other NAS vendors who are staying silent on this issue, even if they utilize ZFS and these WD Red SMR drives. We do want to point out that we likely want to see a more rigorous drive certification process at iXsystems, but also that they at least have done a good job communicating it on their blog. Still, the WD Red line reaches well beyond the iXsystems blog audience and even to local retail channels where these drives may be purchased without the benefit of online research.

Final Words

The performance results achieved by the WD Red WD40EFAX surprised me; my only personal experience with SMR drives prior to this point was with Seagate’s Archive line. Based on my time with those drives, I was expecting much poorer results. Instead, individually the WD Red SMR drives Are essentially functional. They work aggressively in the background to mitigate their own limitations. The performance of the drive seemed to recover relatively quickly if given even brief periods of inactivity. For single drive installations, the WD40EFAX will likely function without issue.

However, the WD40EFAX is not a consumer desktop-focused drive. Instead, it is a WD Red drive with NAS branding all over it. When that NAS readiness was put to the test the drive performed spectacularly badly. The RAIDZ results were so poor that, in my mind, they overshadow the otherwise decent performance of the drive.

The WD40EFAX is demonstrably a worse drive than the CMR based WD40EFRX, and assuming that you have a choice in your purchase the CMR drive is the superior product. Given the significant performance and capability differential between the CMR WD Red and the SMR model, they should be different brands or lines rather than just product numbers. In online product catalogs keeping the same branding means that it shows as a “newer model” at many retailers. Many will simply purchase the newer model expecting it to be better as previous generations have been. That is not a recipe for success.

Thanks to the public outcry, WD is now properly noting the use of SMR technology in the drives on their online store, and Amazon and Newegg have also followed suit. The potential for confusion is still high though. The general population does not follow drive technology closely. The differences between SMR and CMR are fairly nuanced where regular STH readers may understand, but those regular readers are the same IT professionals that keep up on the latest technology trends in the market. Absent that context, simply putting the word “SMR” in a product listing does not help an uninformed purchaser choose the correct product. Still, it is a good step.

If you are reading this piece, and know someone who uses, or may use WD Red drives in NAS arrays but may not keep track of trends, send them this article, a chart from it, or the video. So long as there is proper disclosure and people are making an informed choice, then SMR is a valid technology. Simply saying SMR is not enough without showing the impact.

Thanks for putting your readers first with stuff like this. That’s why STH is a gem. It takes balls to do it but know I appreciate it.

You didn’t address this but now I’ve got a problem. Now I know I’ve sold my customers FreeNAS hardware that isn’t good. I had such a great week too.

If you watch the video, it’s funny. There @Patrick is saying how much he loves WD Red (CMR) drives while using this to show why he doesn’t like the SMR drives. At the start you’d think he’s anti-WD but by the end you realize he’s actually anti-Red SMR. Great piece STH.

It’s nice to see the Will cameo in a video too.

I know I’m being a d!ck here but the video has a much more thorough impact assessment while this is more showing the testing behind what’s being said in the video. Your video and web are usually much closer to 1 another.

Finally a reputable site has covered this. I’m also happy to see you tried on a second drive. You’d be surprised how often we see clients do this panic and put in new drives. Sometimes they put in blues or whatever because that’s all they can get. +1 on rep for it.

NAS drives are always a gamble, SMR or not, you should always keep away from the cheap HDD drives and that also includes cheap SSD’s if you are trying to have a NAS that have a good performance in a Raid setup.

Spend a little bit more money for the 54/5600 – 7200 RPM drives that are CRM. And for SSD be aware that SSD QLC SSD drives will fall back to about 80MBps transfer rate as soon as you fill the small cache that it has built in.

So take your time and pick your storage depending on your needs.

1) For higher NAS use stay away from SMR HDD, and QLC SSD’s

2) For backup purposes SMR HDD and QLC SSD is a good choice.

CMOSTTL this basically shows stay away from SMR even for backup in NASes. Reds aren’t cheap either, but they’ve previously been good.

I’d like to say thanks to Seagate for keeping CMR IronWolf. We have maybe 200 CMR Reds that we’ve bought over the last year. I passed this article around our office. Now we’re going to switch to Seagate.

would be interesting to see RAID rebuild time on a more conventional RAID setup. perhaps hardware raid or Linux mdadm etc, instead of just ZFS.

Would be worthwhile to at least update the following articles with a warning to avoid SMR HDDs when using ZFS:

https://www.servethehome.com/buyers-guides/top-hardware-components-freenas-nas-servers/top-picks-freenas-hard-drives/

https://www.servethehome.com/hpe-proliant-microserver-gen10-plus-ultimate-customization-guide/2/

Shucking external drives (which are often SMR) is mentioned on both pages.

At the end of the youtube, it clearly shows a WD produced spec sheet that shows which drives are SMR vs CMR. Any chance anyone has a link to that? I’d really like to go through all my drives and explicitly verify which ones are SMR vs CMR.

Robert Dole,

I think this is the link you are looking for: https://documents.westerndigital.com/content/dam/doc-library/en_us/assets/public/western-digital/product/internal-drives/wd-red-hdd/product-brief-western-digital-wd-red-hdd.pdf

Fortunately I bought WD Red 4TB drives a long time ago and they are EFRX and they were used in a RAID system. Since then, I standardized on 10 TB which are CRM. I had followed the story on blocksandfiles (.com) and this is really good that it landed on STH and then followed by a testing report.

It is indeed a good sign to see STH calling BS when it is… BS.

Thank you!

CONCLUSION: one more checkbox to check when buying drives, not SMR?

Dear Western Digital, you thought you could get away with it because a basic benchmark does not show much difference OR you were not even aware of the issue because you did not test them with RAID. Duplicity or lazy indifference or both? On top of which you badly tried to cover it up before finally facing it up.

Dear Western Digital, I will probably continue to buy WD Red in the future, but I just voted with my $$$ following that story. I needed 3 x 10TB drives, I went with barely used open-box HSGT He10 on eBay (all 2019 models with around 1,000 hours usage). They were priced like new WD Red 10TB ;-)

Thank you to Will for doing this testing and Patrick for making it happen. It’s about time a large highly regarded site stepped in by doing more than just covering what Chris did.

I want to point out that you’re wrong about one thing. In the Video Patrick says 9 days. If you round to nearest day it’s 10 days not 9. Either is bad.

Doing the CMR too was great.

Luke,

If we’d said 10 days, someone could come along and say we were exaggerating the issue. We say 9 days and we’re understating the problem, which in my mind is the more defensible position. An article like this has a high likelihood of ruffling feathers, so we wanted to have as many bases covered as possible.

So glad I got 12TB Toshibaa N300’s last year that are CMR.

I didn’t specifically checked for it back then because, you know, N300 series.

Would be very unhappy if I had gotten SMR drives though.

Thanks, Will. Good analysis. I learned this lesson a few years ago with Seagate SMR drives and a 3ware 9650se. Impossible to replace a disk in a RAID5 array, the controller would eventually fail the rebuild.

I think you should explain how SMR works with a bunch of images or an animation. Most people do not understand how complex SMR is when data needs to be moved from a bottom shingled track. And really nobody (you, too) mentions how inefficient this is in case of power consumption as all the reading and writing while moving the data on a top shingle consumes energy while an CMR drive is sleeping all the time.

At least WD is now showing which model numbers are CMR or SMR on their spec. page https://www.westerndigital.com/products/internal-drives/wd-red-hdd

AFAIK, the SMR Reds support the TRIM command. I wonder to what extent can performance be regained with its use. Also, if you trim the entire disk (and maybe wait a little), does it return to initial performance? Has anyone tested this?

Not that I would use SMR for NAS. In my opinion, the SMR Reds are a case of fraudulent advertising. Friends don’t let friends use SMR drives for NAS.

Maybe I’m in the minority here. I’m fine with the drive makers selling SMR drives. I just want a clear demarcation. WD Red = CMR, WD Pink = SMR. Even down to external drives needing to be marked in this way.

Clearly the problem is with the label on the drive

WD Red tm

NAS Hard Drive

should read

WD Red tm

Not for NAS Hard Drive

If people can sue Apple for advertising a phone has 16GB of storage when some of that is taken up by the operating system, those two missing words may make a huge different in the legal circus.

Yes, there is an array running here, due to the brilliance of picking drives from different production runs and vendors, that has half SMR and half CMR. While it’s running well enough at the moment, does anyone know if a scrub is likely to cause a problems with SMR drives?

Great article as always. :) Great to see some hard facts related to this after reading about it from others.

And it looks like WD got caught and now have a class-action law suit brewing:

https://arstechnica.com/gadgets/2020/05/western-digital-gets-sued-for-sneaking-smr-disks-into-its-nas-channel/

Form to join the class:

https://www.hattislaw.com/cases/investigations/western-digital-lawsuit-for-shipping-slower-smr-hard-drives-including-wd-red-nas/

I just ordered 3 WD 4TB Red for a new NAS and had no clue! Checked the invoice and they are marked as WD40EFRX (phew)…

Great article!

Such a shame, I was happy with putting red drives into client Nas now I will be putting ironwolf, what were Western digital thinking? Was there nobody on the team who realised the consequences?

I have a problem with your RAIDZ test: normally I replace failed disks with brand new, just unpacked ones, not the ones that were used to write a lot of data and immediately disconnected. But you are not showing how long does it take for an array to rebuild under those conditions? Granted, this is a good article that demonstrates what happens when SMR cache is filled and disks don’t have enough idle time to recover, but I doubt this happens a lot in the real life, and your advice to avoid SMR does not follow from the data you’re obtained. All I can conclude is “don’t replace failed disks in RAIDZ arrays with SMR disks that just came out of heavy load and did not have time to flush their cache.

How often do you force your marathon runners to run sprints just after they’ve finished the marathon?

This is a a great article. Ektich we load test every drive before we replace them in customer systems to ensure we aren’t using a faulty drive. You don’t test a drive before putting it into a rebuild scenario? That’s terrible practice. You don’t need to do it with CMR drives either. CMR was tested in the same way so I don’t see how its a bad test. Even with a cache flush they’re hitting steady state because of the rebuild. Their insight into the drive being used while doing the rebuild is great too.

More trolls on STH when you get to these mass audience articles. But great test methodology STH. It’s also a great job having the balls to publish something like this to help your readers instead of serving WD’s interests.

Thank you for the article and thank you in particular Will for the link to the WD Product brief. From the brief I now know the 3TB drives I bought for my Synology are CMR. I was under the misapprehension (along with that sinking feeling) from reporting from other sites that all 3TB WD Reds are SMR when in fact there are two models. I’m guessing the CMR model is an older one as I bought mine a few years ago now.

I second the motion to re-test with Linux MD-RAID. Without LVM file systems, just plain MD-RAID single file system. Plus, I’d like to see some stock hardware RAID devices tested along the same lines.

To keep the comparison, use the same disk configuration of 4 x 4TB CMR disk in a RAID-5. Replacing with 1 SMR disk.

About 5 years ago I bought a Seagate 8TB Archive SMR disk for backing up my FreeNAS. To be crystal clear, I knew what SMR was, and that the drive used it. Since my source had 4 x 4TB WD Red CMRs, using a single 8TB drive for backups was perfect.

Initially it worked reasonably fast, but as time went on, it slowed down. I use ZFS on it, with snapshots, so it actually stores multiple backups. For my use, (it was the only 8TB drive on the market for a reasonable price at that time), it works well. My backup window is not time constrained, I simply let it run until it’s done.

But, selling SMR as a NAS drive, AND not clearly labeling it, (like Red Lite), that should be criminal.

https://www.youtube.com/watch?v=sjzoSwR6AYA

here they compared a Rebuild with mixed drives and the results were not as sever ?

Does it strongly depend on the Type of RAID and Filesystem ?

Would be interesting to test on consumer devices such as Synology or QNAP ?

I do get the unhappyness about not branding correctly but I cannot beleive that the results are this severe for consumer NAS especially ?

Robert, that video is very hard to follow. They are using smaller capacity drives with different NAS systems. They are also not doing a realistic test since it seems they are not putting a workload on the NAS during rebuilds? The ability to keep systems running and maintaining operations is a key feature of NAS/ RAID systems. It is strange not to at least generate some workload during a rebuild.

People are seeing very poor performance with these SMR drives and Synology as well, even in normal operation. A great example is http://blog.robiii.nl/2020/04/wd-red-nas-drives-use-smr-and-im-not.html

The systems and capacities used will impact results in different ways. We wanted to present a real-world use case with ZFS so our readers have some sense of the impact. We use ZFS heavily and many of our readers do as well.

hey thanks for the quick reply!

yes indeed they only compare rebuilding while there is no other access.

But the question for me (as somebody who is about to buy a new NAS as a media hub for Videos and Photos) I still have two old st4000dm005 lying around and would use them and upgrade two additional a cheap 8TB (SMR – st8000dm004) or with the whole SMR NAS drive debate, a very expensive CMR Ironwolf or something like that ?

My use case would just be me and my wife, and once the newborn is at age, perhaps him?

But for a consumer case is the whole SMR debate a real problem? I get that it’s not OK to hide what the drive actually uses, but on a Media Server/Backup level ? Taking into account that you regularly make backups from the backups.

I truly would like to know in order to make a decision. Do I need an expensive CMR (Ironwolf Helium), a “cheaper” SMR Red NAS drive or will a standard barracuda 8TB SMR “Archive Drive suffice”, for Media (Plex) and Photos.

Robert – I generally look for low-cost CMR drives, and expect that they will fail on me. While I expect the drive failures, I also look for a predictable level of performance during operation and rebuilds. In both cases, the WD Red SMR drives would not work for me personally. I will also say that a likely part of the problem here is that these are DM-SMR drives that hide the fact they are SMR from the host. SMR drive support is getting better when hosts know they are using SMR drives.

I generally tell people RAID arrays tend to operate at the speed of their slowest part. If you mix drives, the slower ones tend to dictate performance more times than not.

On the WD Red drives, the 64MB cache CMR drives are still available and worked great in our testing.

Guess I should be happy all mine are EFRX as well…

Someone said this is part of a RACE for BIGGER capacities. It can be… BUT, before that happens, WD is probably using the most demanding customers / environments to TEST SMR tech so they can DEPLOY them in the bigger capacity DRIVES: 8, 10, 12, 14TB and beyond (do not currently exist). I say this because, WD has the same “infected SMR drives” using the well known PMR tech! https://documents.westerndigital.com/content/dam/doc-library/en_us/assets/public/western-digital/product/internal-drives/wd-red-hdd/data-sheet-western-digital-wd-red-hdd-2879-800002.pdf

Why is that? Why keep SMR and PMR drives with the SAME capacity in the same line and HIDING this info from customers? So they can target “specific” markets with the SMR drives? It seems like a marketing TEST!!! How BIG is it?

Note that currently, the MAX capacity drive using SMR is the 6TB WD60EFAX, with 3 platters / 6 heads… So… is that it?? Is WD USING RAID / more demanding users as “guinea pigs” to test SMR and then move on and use SMR on +14TB drives (that currently use HELIUM inside to bypass the theoretical limitation of 6 platters / 12 heads)??? Is that the next step? And after that, plague all the other lines (like the BLUE one, that already has 2 drives with SMR). I’m thinking YES!! And this is VERY BAD NEWS. I don’t want a mechanical disk that overlaps tracks and has to write adjacent tracks just to write a specific track!!!

Customers MUST be informed of this new tech, even those using EXTERNAL SINGLE DRIVES ENCLOSURES!!! I have many WD external drives, and i DON’T WANT any drive with SMR!!! Period!

Gladly, i checked my WD ELEMENTS drives, a NONE of the internal drives is PLAGUED by SMR! (BTW, if you ask WD how to know the DRIVE MODEL inside an external WD enclosure, they will tell you it’s impossible!!! WTF is that??? WD technicians don’t have a way to query the drive and ask for the model number?? Well, i got new for you: crystaldiskinfo CAN!!! How about that? Stupid WD support… )

So, if anyone needs to know WHAT INTERNAL DRIVE MODEL they have in their WD EXTERNAL ENCLOSURES, install https://crystalmark.info/en/software/crystaldiskinfo and COPY PAST the info to the clipboard! (EDIT -> COPY or CTRL-C). Paste it to a text editor, and voila!!!

(1) WDC WD20EARX-00PASB0 : 2000,3 GB [1/0/0, sa1] – wd

(2) WDC WD40EFRX-68N32N0 : 4000,7 GB [2/0/0, sa1] – wd

Compare this with the “INFECTED” SMR drive list, and you’re good to go!

P.S. I will NEVER buy another EXTERNAL WD drive again without the warranty to check the internal drive MODEL first!!!! That’s for sure!

Just read this bollocks: https://arstechnica.com/gadgets/2020/06/western-digitals-smr-disks-arent-great-but-theyre-not-garbage/2/

I saw that Ars piece. I thought it was good in explanation, but it’s odd.

They go way too in-depth on the technical side, but when you’re looking at it, they did a less good experiment. They’re using different size drives, more drives, they’re not putting a workload and just letting it rebuild. They’re using the technical block size and command bits to hide that they’ve done a less thorough experiment. So they go way into the weeds of commands (that the average QNAP, Synology, Dobo user has no clue about) then say it’s fine… oh but for ZFS its still sucks. They wrote the article like someone who uses ZFS though.

I’m thinking WD pays Ars a lot.

Ars articles always lack the depth of real reporting, but do provide an entertainment factor and many times the commenters have much more insight (which is what I love finding and reading).

STH articles have always had the feel of ‘real news’ to me–from the easystore article to this one, highlighting the true pros and cons.

Is this CRM or SRM??

https://www.cnet.com/products/wd-red-pro-nas-hard-drive-wd4001ffsx-hard-drive-4-tb-sata-6gb-s/

Just got off the phone with a Seagate rep. And I’m fuming right now.

Background:

I am running a 6×2.5″ 500GB RAID10 array for a total of 3TB for my Steam library. The drives are Seagate Barracuda ST500LM050 drives from the same or similar batch. The drives perform terrible ever since day 1, causing the whole PC to appear unresponsive for minutes the moment 1 file in the Steam library is rewritten for game updates. Things get worse when Steam needs to preallocate storage space for new games, often I have to leave the machine alone for two to three hours. I already changed motherboard once because I thought it was a motherboard issue. Even with a new motherboard the problem persisted.

Then I found out about this lawsuit. And upon further investigation I found out that these disks are SMR. I filed a support request with Seagate.

I received a phone call from the rep this morning. They were apologetic, but then they dropped the bombshell: All Seagate 2.5″ drives are SMR, they no longer make 2.5″ PMR drives.

I’m really frustrated. There was no information on whether the drives are SMR or PMR, and there were NO indication whatsoever that they should not be used in RAID arrays.

Great article, thanks for the info. Using older WD Reds in a server with ZFS raid, and thinking about buying more on sale… big eye opener here. Had no idea this was a thing but glad I googled it now.

9 days to rebuild a RAID is just bonkos.

Very interesting, very disconcerting. Thanks for testing and reporting!

“An article like this has a high likelihood of ruffling feathers, so we wanted to have as many bases covered as possible.”

Bollocks to the feathers. Keep calm and ruffle on.

STH: do you have a more complete followup article? There are tons of loose ends in this old stuff. For instance, is this effect specific to ZFS’s resilver algorithm? Or has someone else done better followup? (Including raid6/raidz2, since plain old raid1 has been not-best practice for a long time…)

yap. SMR drives actually created a new tier for my server… basically a read only tier that i need to read from but rarely write…they are indeed cheap though

Hi Will Taillac,

The RAID rebuild problem you refer to is a peculiarity of ZFS. It does not occur in Hardware Based RAID and it does not occur in Windows RAID or Storage Spaces. It does not occur in other Software Defined Storage solutions.

In fact, ZFS ‘resilvering/reslivering’ is the only known ‘workload’ that stumbles over SMR.

Isn’t it time to place the ‘blame’ where it properly belongs — on ZFS?

KD Mann you mean to blame the 15+ year old storage platform with millions of installations instead of the company that marketed those drives as suitable for ZFS? That’s a really weird position unless you work at WD.

I knew of the WD SMR scandal. Fortunately, I have four of the WD40EFRZ CMR models, so breathed a sigh of relief. However, WD have gotten off incredibly lightly here. They marketed their Red range as NAS drives, all of them, not just those they knew were CMR. That’s completely unacceptable and the fact they weren’t forced to change those SMR Red’s to Blue or Blue Plus or something, is outrageous.

If you scour Amazon for WD Red’s, you will rarely, if ever, see whether the drive is CMR or SMR. I have had to resort to a lengthy table of drives by model number to find out whether a WD, or other for that matter, is CMR or SMR.

The last time I checked, WD’s 6Tb Red was SMR and I couldn’t find any CMR counterpart!

WD, stop selling the drives as Red models and at higher prices than the desktop Blue range!!!