Zero Trust Security and Confidential Computing

Often hearing the terms in network security?

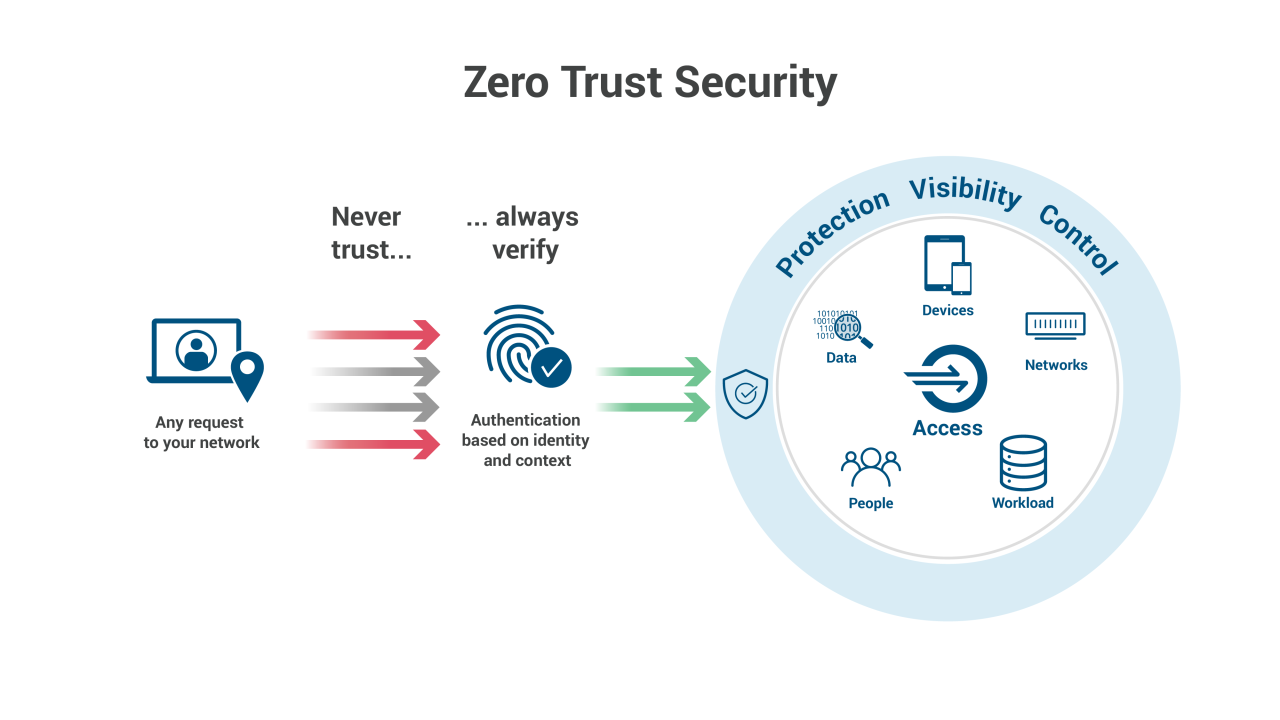

Zero Trust is a security concept that requires all users, even those inside the organization’s enterprise network, to be authenticated, authorized, and continuously validating security configuration and posture, before being granted or keeping access to applications and data. This approach leverages advanced technologies such as multifactor authentication, identity and access management (IAM), and next-generation endpoint security technology to verify the user’s identity and maintain system security.

Zero Trust security environment focused on earning trust across any endpoint, including desktops, mobiles, servers, and IoT devices. It continuously validates that trust at every event or transaction, authenticating users to deliver a Zero Touch experience that improves security with no user interruption. Zero Trust is a strategic initiative that helps prevent successful data breaches by eliminating the concept of trust from an organization’s network architecture. A traditional or perimeter network security approach focuses on keeping attackers out of the network but is vulnerable to users and devices inside the network. Traditional network security architecture leverages firewalls, VPNs, access controls, IDS, IPS, SIEMs, and email gateways by building multiple layers of security on the perimeter that cyber attackers have learned to breach. “Verify, then trust” security trusts users inside the network by default. Someone with the correct user credentials could be admitted to the network’s complete array of sites, apps, or devices. Zero Trust assumes the network has been compromised and challenges the user or device to prove that they are not attackers.

Zero Trust requires strict identity verification for every user and device when attempting to access resources on a network even if the users or devices are already within the network perimeter. Zero Trust also provides the ability to limit a user’s access once inside the network, preventing an attacker who has accessed a network from enjoying lateral freedom throughout the network’s applications.

Every remote endpoint is vulnerable to an attack, and the number of endpoints is increasing as the number of remote workers and IoT devices grows rapidly. Zero Trust can only be successful if organizations are able to continuously monitor and validate that a user and his or her device have the right privileges and attributes. One-time validation simply won’t suffice, because threats and user attributes are all subject to change. Advanced Persistent Threats (APTs) actors continue to focus on attacking VPNs in order to gain a foothold on the networks of targeted organizations. The unfortunate reality is that VPNs are not as secure as most organizations and individuals believe they are. In fact, a variety of studies report numerous security flaws within VPNs that make an organization and its remote workforce vulnerable to cyberattacks.

The principles of Zero Trust architecture as established by the National Institute of Standards & Technology (NIST)

Source: NIST Special Publication 800-207

Policy Engine

The policy engine is the core of zero trust architecture. The policy engine decides whether to grant access to any resource within the network. The policy engine relies on policies orchestrated by the enterprise’s security team, as well as data from external sources like Security information and event management (SIEM) or Threat Intelligence to verify and determine context. Access is then granted, denied, or revoked based on the parameters defined by the enterprise. The policy engine communicates with a policy administrator component that executes the decision.

Policy Administrator

The policy administrator component is responsible for executing access decisions determined by the policy engine. It has the ability to allow or deny the communication path between a subject and a resource. Once the policy engine makes an access decision, the policy administrator kicks in to allow or deny a session by communicating a third logical component called the policy enforcement point.

Policy Enforcement Point

The policy enforcement point is responsible for enabling, monitoring, and terminating connections between a subject and an enterprise resource. In theory, this is treated as a single component of zero trust architecture. But in practice, the policy enforcement point has two sides: 1) the client side, which could be an agent on a laptop or a server; and 2) the resource side, which acts as a gateway to control access.

- All data sources and computing services are considered resources.

- All communication is secure regardless of network location; network location does not imply trust.

- Access to individual enterprise resources is granted on a per-connection basis; trust in the requester is evaluated before the access is granted.

- Access to resources is determined by policy, including the observable state of user identity and the requesting system, and may include other behavioral attributes.

- The enterprise ensures all owned and associated systems are in the most secure state possible and monitors systems to ensure that they remain in the most secure state possible.

- User authentication is dynamic and strictly enforced before access is allowed; this is a constant cycle of access, scanning and assessing threats, adapting, and continually authenticating.

The enterprises must ensure that all access requests are continuously vetted prior to allowing connection to any of your enterprise or cloud assets. That’s why enforcement of Zero Trust policies heavily relies on real-time visibility into user attributes such as:

- user identity

- endpoint hardware type

- firmware versions

- operating system versions

- patch levels

- vulnerabilities

- applications installed

- user logins

- security or incident detections

Zero Trust Reference Architecture (Microsoft)

Instead of assuming everything behind the corporate firewall is safe, the Zero Trust model assumes a breach and verifies each and every request as though it originates from an open network. Regardless of where the request originates or what resource it accesses, Zero Trust teaches us to “never trust, always verify”. Every access request is fully authenticated, authorized, and encrypted before granting access. Microsegmentation and least privileged access principles are applied to minimize lateral movement. Rich intelligence and analytics are utilized to detect and respond to anomalies in real-time.

How to Achieve a Zero Trust Architecture

Use Zero Trust to gain visibility and context for all traffic – across user, device, location and application – plus zoning capabilities for visibility into internal traffic. To gain traffic visibility and context, it needs to go through a next-generation firewall with decryption capabilities. The next-generation firewall enables micro-segmentation of perimeters and acts as border control within your organization. While it’s necessary to secure the external perimeter border, it’s even more crucial to gain the visibility to verify traffic as it crosses between the different functions within the network. Adding multi-factor authentication and other verification methods will increase your ability to verify users correctly. Leverage a Zero Trust approach to identify your business processes, users, data, data flows, and associated risks, and set policy rules that can be updated automatically, based on associated risks, with every iteration.

Confidential Computing and Security Enclaves

New buzz word? It is not and this is a reality and already coming into practice. I started reading recently this is an interesting topic since it has a critical role in filling gaps in cybersecurity. Data protection is implemented at different levels. Data at Rest, Data in Transit, Data in Use.

Data in Use is the story behind confidential computing because the other two modes (Rest, Transit) are widely popular and have been in real-time use for years. Data in use generally refers to data being processed by a computer central processing unit (CPU) or in random access memory (RAM) which is in unencrypted format. Confidential computing consortium (https://confidentialcomputing.io/) is focused on helping and defining the standards to secure data in use. What it means is if a hacker tries to dump data from RAM then it will be encrypted and only the CPU instruction set can understand how to decrypt data and flush out encrypted values for storing.

How to dump data from the CPU? This is what hackers typically do as there are debuggers, advanced tools to deal with it and remove the audit, logs files with the admins or internal user's help. At a basic level if you are a Linux developer then you must be familiar with core files so you can run gcore to generate core for the running process for which you like to dump runtime memory and then use gdb to attach the core to analyze the data at each address, variables, registers of your interest to read the plain text values.

Intel has released a new processor family with Software Guard Extensions (Intel® SGX), AMD EPYC, ARM TrustZone processer family to enable granular data protection to provide advanced hardware encryption security features. Amazon has released the support already which is AWS Nitro Enclaves and similarly other cloud providers from Azure, GCP, IBM, etc., offering support for Confidential VM’s and Trusted execution environment (TEE).

Security Enclave

A secure enclave provides CPU hardware-level isolation and memory encryption on every server, by isolating application code and data from anyone with privileges, and encrypting its memory. With additional software, secure enclaves enable the encryption of both storage and network data for simple full-stack security. Secure enclave hardware support is built into all new CPUs from Intel and AMD.

There are two ways to go whether you want to rebuild the current applications to run in TEE, that is building the application with security enclaves SDK integration like https://openenclave.io/sdk/ or using any other vendors supported enclaves frameworks (example: base OS image for containers) compatible with hardware encryption processors family alternatively lift and shift the enterprise applications with underlying supported secure enclaves, OS abstraction layers that do all necessary mappings like AWS Nitro. I think that there would be a lot of enterprise migration in the coming years to cloud computing to protect PII, financial, healthcare information to address the gaps in cybersecurity to scale the enterprise products.

There are a lot of opportunities to build security frameworks, container security, and cloud service integrations for data protection using confidential computing.

CEO spictera

8moHow does zero trust map to security zones, such as separation of different security domains? What is best practicies for that? Thanks for information Regards Tomas

NOC Engineer at TPLEX

1yThis will help a lot of folks! Great explanation, keep share more articles Huge respect