Upgrading To Inform OS 3.1.2

Before beginning an upgrade of the 3PAR Inform OS to version 3.1.2 it is recommended to use the following guides:

- HP 3PAR Upgrade Pre-Planning Guide

- HP 3PAR Service Processor Software Installation Instructions

When performing the upgrade, 3PAR Support will either want to be onsite or on the phone remotely. They will ask for the following details:

- Host Platform e.g. StoreServe 7200

- Architecture e.g. SPARC/x86

- OS e.g. 3.1.1

- DMP Software

- HBA details

- Switch details

- 3PAR license details

A few useful commands here are:

- showsys

- showfirmwaredb

- showlicense

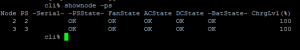

- shownode

A quick run threw of the items that are recommended to be checked. The first item is your current Inform OS version as this will determine how the upgrade has to be performed

Log into your 3PAR via SSH and issue the command ‘showversion’ This will give you your release version and patches which have been applied.

Here our 3PAR’s is on 3.1.1 however it doesn’t specify if we have a direct upgrade path to 3.1.2 or not, see table below.

If going from 3.1.1 to 3.1.2 then Remote Copy groups can be left replicating, if you are upgrading from 2.3.1 then the Remote Copy groups must be stopped.

Any scripts you may have running against the 3PAR should be stopped, the same goes for any environment changes (common sense really).

The 3PAR must be in a health state and each node should be multipatted and a check should be undertaken to confirm that all paths have active I/O.

If the 3PAR is attached to a vSphere Cluster, then the path policy must be set to Round Robin.

Once you have verified these, you are good to go.

3PAR Virtual Ports – NPIV

NPIV allows an N_Port which is that of the 3PAR HBA to assume the identity of another port without multipath dependency. Why is this important Well it means that if the storage controller is lost or rebooted it is transparent to the host paths meaning connectivity remains albeit with less interconnects.

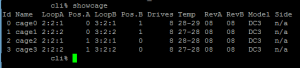

When learning any SAN, you need to get used to the naming conventions. For 3PAR they roll with it is Node:Slot:Port or N:S:P for short.

Each host facing port has a ‘Native’ identify (primary path) and a ‘Guest’ identity (backup path) on a different 3PAR node in case of node failure.

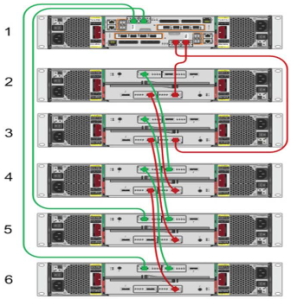

It is recommended to use Single Initiator Zones when working with 3PAR and to connect the Native and Backup ports to the same switch.

S1 – N0:S0:p1

S2 – N:S0:P2

S3 – N0:S0:P1

S4 – N0:S0:P2

For NPIV to work, you need to make sure that the Fabric switches support NPIV and the HBA’s in the 3PAR do. Note the HBA’s in the Host do not need to support NPIV as the change to the WWN will be transparent to the Host facing HBA’s.

How does it actually work? Well if the Native port goes down, the Guest port takes over in two steps:

- Guest port logs into Fabric switch with the Guest identity

- Host path from Fabric switch to the 3PAR uses Guest path

The really cool thing as part of the online upgrade, the software will check:

- Validate Virtual Ports to ensure the same WWN’s appear on the Native and Guest ports

- Validate that the Native and Guest ports are plugged into the same Fabric switch

If everything is ‘tickety boo’ then Node 0 will be shutdown and transparently failed over to Node 1. After reboot Node 0 will have the new 3PAR OS 3.1.2 and then Node 1 is failed over to Node 0 Guest ports and Node 1 is upgraded. This continues until all Nodes are upgraded.

When performing an upgrade it shouldn’t require any user interaction, however as we all know things can go wrong. A few useful commands to have in your toolbox are:

- showport

- showportdev

- statport/histport

- showvlun/statvlun