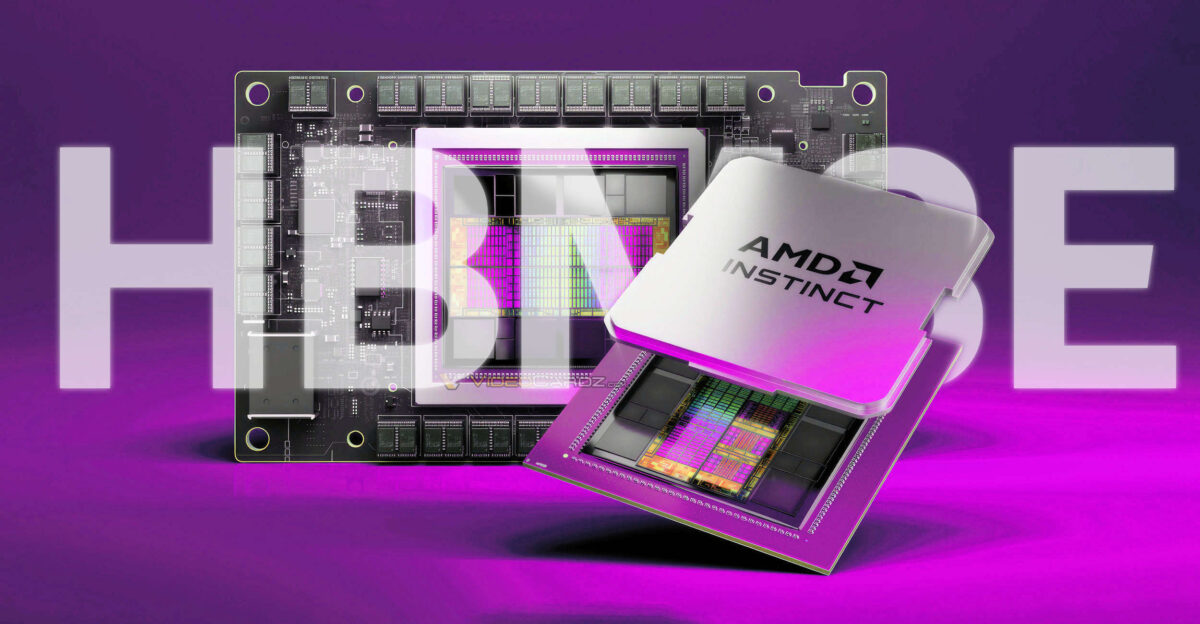

AMD MI300 expected to get HBM3E update

Mark Pepermaster (Chief Technology Officer and Executive Vice President) confirms AMD MI300 were architectured to support HBM3E.

Micron and Samsung have recently reported on important updates to High Bandwidth Memory (HBM), specifically the HBM3E variant, targeting next-gen and current-gen refreshed data-center accelerators. NVIDIA’s H200 are already confirmed to incorporate the advanced HBM3E. However, it seems AMD has plans to upgrade their Instinct series from HBM3 as well.

Many enterprise customers are exploring alternatives, considering factors like cost and availability. AMD has positioned its Instinct MI300 series as formidable contenders against NVIDIA’s Hopper and Ampere processors. The MI300 series has several advantages, such as larger memory capacity.

The MI300A from AMD is equipped with 228 CDNA3 Compute Units, accompanied by 24 Zen4 cores based on x86 architecture. This SKU is outfitted with 128 GB of HBM3 memory. For those with compute-intensive requirements, the MI300X steps up with 304 CDNA3 Compute Units and 192GB of HBM3 memory. However, this product lacks Zen4 cores. The MI300 lineup competes with NVIDIA H200 and H200 SuperChip, based on Hopper architecture and paired with ARM Neoverse V2 cores in the case of the Super Chip.

| HPC Accelerators | ||||

|---|---|---|---|---|

| VideoCardz | Architecture | GPU CU/SM | CPU Cores | Memory |

| AMD Instinct MI300A | AMD CDNA 3 | 228 | 24 “Zen 4” | 128 GB HBM3 |

| AMD Instinct MI300X | AMD CDNA 3 | 304 | – | 192 GB HBM3 |

| NVIDIA H100 | NVIDIA Hopper | 132 132 132 | – | 64 GB HBM3 80 GB HBM3 96 GB HBM3 |

| NVIDIA H200 | NVIDIA Hopper | ~132 | – | 141 GB HBM3E |

| NVIDIA H200 Super Chip | NVIDIA Grace Hopper | 132 132 | 72 ARM 72 ARM | 96 GB HBM3 141 GB HBM3E |

AMD has now confirmed that the company will upgrade their Instinct products to HMB3E technology. The HBM3E not only has lower power consumption, it also enables faster bandwidth and more capacity by introducing 50% more stacks. The 12-Hi stack can enable up to 36GB of capacity per module, as shared by Samsung today. Theoretically, this would enable 8 HBM3E modules to support 288 GB of capacity.

We are not standing still. We made adjustments to accelerate our roadmap with both memory configurations around the MI300 family, derivatives of MI300, the generation next. […] So, we have 8-Hi stacks. We architected for 12-Hi stacks. We are shipping with MI300 HBM3. We have architected for HBM3E.

Mark Papermaster (AMD CTO) via Seeking Alpha

With MI300 receiving an update to HBM3E this year, one should also look forward to MI400, which is expected to hit the server racks in 2025. Meanwhile, NVIDIA is now expected to tease its B100 Blackwell HPC accelerator at the Spring GTC 2024 keynote in March.

Source: Wccftech